Importing CSV files

The read_csv method of the pandas library can be used to read a file with comma separated values (CSV) and load it into memory as a pandas DataFrame. In this recipe, we import a CSV file and address some common issues: creating column names that make sense to us, parsing dates, and dropping rows with critical missing data.

Raw data is often stored as CSV files. These files have a carriage return at the end of each line of data to demarcate a row, and a comma between each data value to delineate columns. Something other than a comma can be used as the delimiter, such as a tab. Quotation marks may be placed around values, which can be helpful when the delimiter occurs naturally within certain values, which sometimes happens with commas.

All data in a CSV file are characters, regardless of the logical data type. This is why it is easy to view a CSV file, presuming it is not too large, in a text editor. The pandas read_csv method will make an educated guess about the data type of each column, but you will need to help it along to ensure that these guesses are on the mark.

Getting ready

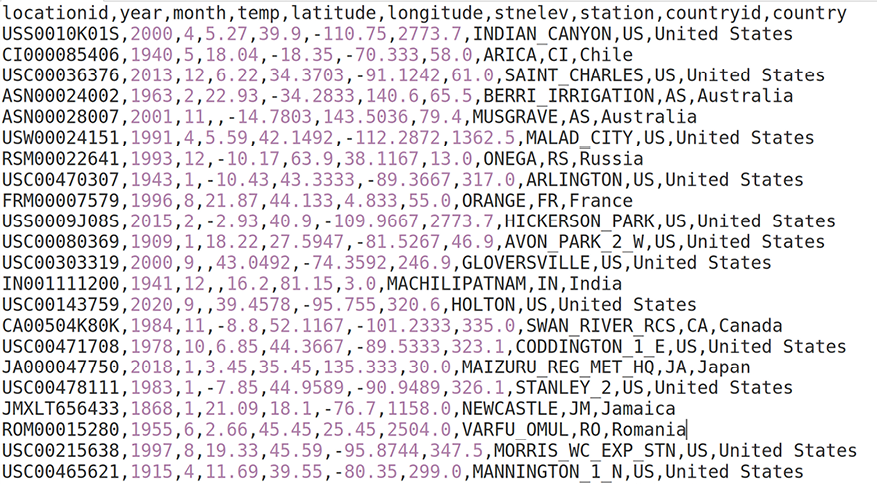

Create a folder for this chapter, and then create a new Python script or Jupyter Notebook file in that folder. Create a data subfolder, and then place the landtempssample.csv file in that subfolder. Alternatively, you could retrieve all of the files from the GitHub repository, including the data files. Here is a screenshot of the beginning of the CSV file:

Figure 1.1: Land Temperatures Data

Data note

This dataset, taken from the Global Historical Climatology Network integrated database, is made available for public use by the United States National Oceanic and Atmospheric Administration at https://www.ncei.noaa.gov/products/land-based-station/global-historical-climatology-network-monthly. I used the data from version 4. The data in this recipe uses a 100,000-row sample of the full dataset, which is also available in the repository.

How to do it…

We will import a CSV file into pandas, taking advantage of some very useful read_csv options:

- Import the

pandaslibrary, and set up the environment to make viewing the output easier:import pandas as pd pd.options.display.float_format = '{:,.2f}'.format pd.set_option('display.width', 85) pd.set_option('display.max_columns', 8) - Read the data file, set new names for the headings, and parse the date column.

Pass an argument of 1 to the skiprows parameter to skip the first row, pass a list of columns to parse_dates to create a pandas datetime column from those columns, and set low_memory to False. This will cause pandas to load all of the data into memory at once, rather than in chunks. We do this so that pandas can identify the data type of each column automatically. In the There’s more… section, we see how to set the data type for each column manually:

landtemps = pd.read_csv('data/landtempssample.csv',

... names=['stationid','year','month','avgtemp','latitude',

... 'longitude','elevation','station','countryid','country'],

... skiprows=1,

... parse_dates=[['month','year']],

... low_memory=False)

type(landtemps)

<class 'pandas.core.frame.DataFrame'>

Note

We have to use skiprows because we are passing a list of column names to read_csv. If we use the column names in the CSV file, we do not need to specify values for either names or skiprows.

- Get a quick glimpse of the data.

View the first few rows. Show the data type for all columns, as well as the number of rows and columns:

landtemps.head(7)

month_year stationid ... countryid country

0 2000-04-01 USS0010K01S ... US United States

1 1940-05-01 CI000085406 ... CI Chile

2 2013-12-01 USC00036376 ... US United States

3 1963-02-01 ASN00024002 ... AS Australia

4 2001-11-01 ASN00028007 ... AS Australia

5 1991-04-01 USW00024151 ... US United States

6 1993-12-01 RSM00022641 ... RS Russia

[7 rows x 9 columns]

landtemps.dtypes

month_year datetime64[ns]

stationed object

avgtemp float64

latitude float64

longitude float64

elevation float64

station object

countryid object

country object

dtype: object

landtemps.shape

(100000, 9)

- Give the date column a more appropriate name and view the summary statistics for average monthly temperature:

landtemps.rename(columns={'month_year':'measuredate'}, inplace=True) landtemps.dtypesmeasuredate datetime64[ns] stationid object avgtemp float64 latitude float64 longitude float64 elevation float64 station object countryid object country object dtype: objectlandtemps.avgtemp.describe()count 85,554.00 mean 10.92 std 11.52 min -70.70 25% 3.46 50% 12.22 75% 19.57 max 39.95 Name: avgtemp, dtype: float64 - Look for missing values for each column.

Use isnull, which returns True for each value that is missing for each column, and False when not missing. Chain this with sum to count the missing values for each column. (When working with Boolean values, sum treats True as 1 and False as 0. I will discuss method chaining in the There’s more... section of this recipe):

landtemps.isnull().sum()

measuredate 0

stationed 0

avgtemp 14446

latitude 0

longitude 0

elevation 0

station 0

countryid 0

country 5

dtype: int64

- Remove rows with missing data for

avgtemp.

Use the subset parameter to tell dropna to drop rows when avgtemp is missing. Set inplace to True. Leaving inplace at its default value of False would display the DataFrame, but the changes we have made would not be retained. Use the shape attribute of the DataFrame to get the number of rows and columns:

landtemps.dropna(subset=['avgtemp'], inplace=True)

landtemps.shape

(85554, 9)

That’s it! Importing CSV files into pandas is as simple as that.

How it works...

Almost all of the recipes in this book use the pandas library. We refer to it as pd to make it easier to reference later. This is customary. We also use float_format to display float values in a readable way and set_option to make the Terminal output wide enough to accommodate the number of variables.

Much of the work is done by the first line in Step 2. We use read_csv to load a pandas DataFrame in memory and call it landtemps. In addition to passing a filename, we set the names parameter to a list of our preferred column headings. We also tell read_csv to skip the first row, by setting skiprows to 1, since the original column headings are in the first row of the CSV file. If we do not tell it to skip the first row, read_csv will treat the header row in the file as actual data.

read_csv also solves a date conversion issue for us. We use the parse_dates parameter to ask it to convert the month and year columns to a date value.

Step 3 runs through a few standard data checks. We use head(7) to print out all columns for the first seven rows. We use the dtypes attribute of the DataFrame to show the data type of all columns. Each column has the expected data type. In pandas, character data has the object data type, a data type that allows for mixed values. shape returns a tuple, whose first element is the number of rows in the DataFrame (100,000 in this case) and whose second element is the number of columns (9).

When we used read_csv to parse the month and year columns, it gave the resulting column the name month_year. We used the rename method in Step 4 to give that column a more appropriate name. We need to specify inplace=True to replace the old column name with the new column name in memory. The describe method provides summary statistics on the avgtemp column.

Notice that the count for avgtemp indicates that there are 85,554 rows that have valid values for avgtemp. This is out of 100,000 rows for the whole DataFrame, as provided by the shape attribute. The listing of missing values for each column in Step 5 (landtemps.isnull().sum()) confirms this: 100,000 – 85,554 = 14,446.

Step 6 drops all rows where avgtemp is NaN. (The NaN value, not a number, is the pandas representation of missing values.) subset is used to indicate which column to check for missing values. The shape attribute for landtemps now indicates that there are 85,554 rows, which is what we would expect, given the previous count from describe.

There’s more...

If the file you are reading uses a delimiter other than a comma, such as a tab, this can be specified in the sep parameter of read_csv. When creating the pandas DataFrame, an index was also created. The numbers to the far left of the output when head was run are index values. Any number of rows can be specified for head. The default value is 5.

Instead of setting low_memory to False, to get pandas to make good guesses regarding data types, we could have set data types manually:

landtemps = pd.read_csv('data/landtempssample.csv',

names=['stationid','year','month','avgtemp','latitude',

'longitude','elevation','station','countryid','country'],

skiprows=1,

parse_dates=[['month','year']],

dtype={'stationid':'object', 'avgtemp':'float64',

'latitude':'float64','longitude':'float64',

'elevation':'float64','station':'object',

'countryid':'object','country':'object'},

)

landtemps.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 100000 entries, 0 to 99999

Data columns (total 9 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 month_year 100000 non-null datetime64[ns]

1 stationid 100000 non-null object

2 avgtemp 85554 non-null float64

3 latitude 100000 non-null float64

4 longitude 100000 non-null float64

5 elevation 100000 non-null float64

6 station 100000 non-null object

7 countryid 100000 non-null object

8 country 99995 non-null object

dtypes: datetime64[ns](1), float64(4), object(4)

memory usage: 6.9+ MB

The landtemps.isnull().sum() statement is an example of chaining methods. First, isnull returns a DataFrame of True and False values, resulting from testing whether each column value is null. The sum function takes that DataFrame and sums the True values for each column, interpreting the True values as 1 and the False values as 0. We would have obtained the same result if we had used the following two steps:

checknull = landtemps.isnull()

checknull.sum()

There is no hard and fast rule for when to chain methods and when not to do so. I find chaining helpful when the overall operation feels like a single step, even if it’s two or more steps mechanically. Chaining also has the side benefit of not creating extra objects that I might not need.

The dataset used in this recipe is just a sample from the full land temperatures database, with almost 17 million records. You can run the larger file if your machine can handle it, with the following code:

landtemps = pd.read_csv('data/landtemps.zip',

... compression='zip', names=['stationid','year',

... 'month','avgtemp','latitude','longitude',

... 'elevation','station','countryid','country'],

... skiprows=1,

... parse_dates=[['month','year']],

... low_memory=False)

read_csv can read a compressed ZIP file. We get it to do this by passing the name of the ZIP file and the type of compression.

See also

Subsequent recipes in this chapter, and in other chapters, set indexes to improve navigation over rows and merging.

A significant amount of reshaping of the Global Historical Climatology Network raw data was done before using it in this recipe. We demonstrate this in Chapter 11, Tidying and Reshaping Data.