Parallel computation using Julia

Advancement in modern computing has led to multi-core CPUs in systems and sometimes these systems are combined together in a cluster capable of performing a task which a single system might not be able to perform alone, or if it did it would take an undesirable amount of time. Julia's environment of parallel processing is based on message passing. Multiple processes are allowed for programs in separate memory domains.

Message passing is implemented differently in Julia from other popular environments such as MPI. Julia provides one-sided communication, therefore the programmer explicitly manages only one process in the two-process operation.

Julia's parallel programming paradigm is built on the following:

- Remote references

- Remote calls

A request to run a function on another process is called a remote call. The reference to an object by another object on a particular process is called a remote reference. A remote reference is a construct used in most distributed object systems. Therefore, a call which is made with some specific arguments to the objects generally on a different process by the objects of the different process is called the remote call and this will return a reference to the remote object which is called the remote reference.

The remote call returns a remote reference to its result. Remote calls return immediately. The process that made the call proceeds to its next operation. Meanwhile, the remote call happens somewhere else. A call to wait() on its remote reference waits for the remote call to finish. The full value of the result can be obtained using fetch(), and put!() is used to store the result to a remote reference.

Julia uses a single process default. To start Julia with multiple processors use the following:

julia -p n

where n is the number of worker processes. Alternatively, it is possible to create extra processors from a running system by using addproc(n). It is advisable to put n equal to the number of the CPU cores in the system.

pmap and @parallel are the two most frequently used and useful functions.

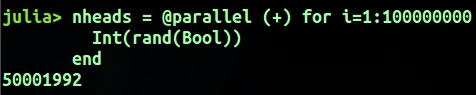

Julia provides a parallel for loop, used to run a number of processes in parallel. This is used as follows.

Parallel for loop works by having multiple processes assigned iterations and then reducing the result (in this case (+)). It is somewhat similar to the map-reduce concept. Iterations will run independently over different processes and the results obtained by these processes will be combined at the end (like map-reduce). The resultant of one loop can also become the feeder for the other loop. The answer is the resultant of this whole parallel loop.

It is very different than a normal iterative loop because the iterations do not take place in a specified sequence. As the iterations run on different processes, any writes that happens on variables or arrays are not globally visible. The variables used are copied and broadcasted to each process of the parallel for loop.

For example:

arr = zeros(500000) @parallel for i=1:500000 arr[i] = i end

This will not give the desired result as each process gets their own separate copy of arr. The vector will not be filled in with i as expected. We must avoid such parallel for loops.

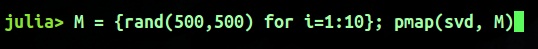

pmap refers to parallel map. For example:

This code solves the problem if we have a number of large random matrices and we are required to obtain the singular values, in parallel.

Julia's pmap() is designed differently. It is well suited for cases where a large amount of work is done by each function call, whereas @parallel is suited for handling situations which involve numerous small iterations. Both pmap() and @parallel for utilize worker nodes for parallel computation. However, the node from which the calling process originated does the final reduction in @parallel for.