Administering clusters

It's pivotal at this time to know more about the HBase administrative process, as it stores petabyte of data in distributed locations and requires the system to work smoothly agnostic to the location of the data in a multithreaded, easy-to-manage environment in addition to the hardware, OS, JDK and other hardware and software components.

HbBase and administrative GUI provide the current state of the cluster and and plenty of command-line tools, which can really give us an in-depth knowledge about what is going on in the cluster.

Getting ready

We must have a HDFS/Hadoop setup in a cluster or fully distributed mode as the first step, which we did it in the earlier section. The second step is to have an HBase setup in a fully distributed mode.

Tip

It's assumed that we have a setup of password-less communication between the master and the region servers on the HBase side. If you are using the same nodes for Hadoop/Hdfs setup, we need to have the Hadoop user also to have a password-less setup from Namenode to Secondary NameNode, DataNodes, and so on.

We must have a full HBase cluster running on top of hadoop/HDFS cluster.

How to do it…

HBase provides multiple ways to do administration work on the fully distributed clustered environment:

- WebUI-based environment

- Command line-based environment

- HBase Admin UI

The Master run on 60010 ports as default, the web interface is looked up on this port only. This needs to be started on the HMaster node.

The master UI gives a dashboard of what's going on in the HBase cluster.

The UI contains the following details:

- HBase home

- Table details

- Local logs

- Log levels

- Debug dump

- Metric dump

- HBase configuration

We will go through it in the following sections.

- HBase Home: It contains the dashboard that gives a holistic picture of the HBase cluster.

- The region server

- The backup master

- Tables

- A task

- Software attributes

- Region Server: The image shows the region server and also provided a tab view of various metrics like (basic stats, Memory, Request, Storefiles, Compactions):

A Detailed discussion is out of scope at this point. For more details, see later sections. For our purpose, we will avoid to go for backup master:

Let's list all the user-created tables using Tables. It provides the details about User Tables (tables that are created by the users/actors), Catalog Tables (this contains HBase :meta and HBase :namespaces), which is seen in the following figure:

Clicking any table, as listed earlier, other important details are shown such as table attributes, table regions, and region-by-region server details. Actions as compaction and split can be taken using admin UI.

Task provides the details of talk, which is happening. We can see Monitored task, RPC Tasks, RPC Handler task, Active RPC Calls, Client Operations, and a JSON response:

The following Software Attributes page provides the details of the software used in the HBase cluster:

After clicking on zk_dump, it provides further details about the zookeeper Quorum stats as follows:

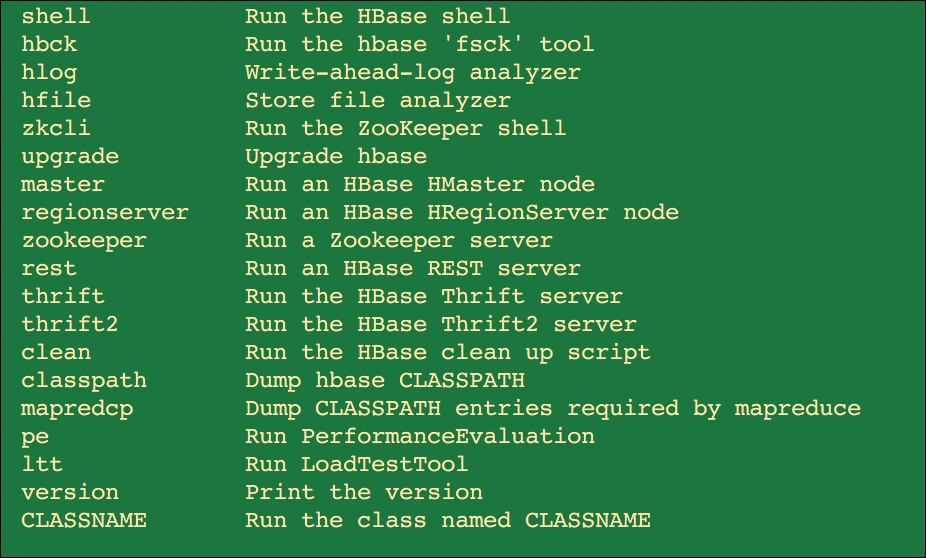

HBase provides various command-line tools for administrating, debugging, and doing an analysis on the HBase cluster.

The first tool is the HBase shell, and the details are as follows:

bin/HBase Usage: HBase [<options>] <command> [<args>]

Identify inconsistencies with hbck; this tool provides consistencies, checks for corruption of data, and it runs against the cluster.

bin/HBase hbck

This runs against the cluster and provides the details of inconsistencies between the regions and masters.

bin/HBase hbck –details

The -details provides the insight of splits which happens in all the tables.

bin/HBase hbck MyClickStream

The preceding line enables us to vView the HFile content in a text format.

bin/HBase org.apache.hadoop.HBase .io.hfile.HFile

Use FSFLogs for manual splitting and dumping:

HBase org.apache.hadoop.HBase .regionserver.wal.FSHLog --dump HBase org.apache.hadoop.HBase .regionserver.wal.FSHLog –split

Enable compressor tool:

HBase org.apache.hadoop.HBase .util.CompressionTest hdfs://host/path/to/HBase snappy

Enable compressor tool on the Column Family or while creating a tables:

HBase > disable 'MyClickStream' HBase > alter 'MyClickStream', {NAME => 'cf', COMPRESSION => 'GZ'} HBase > enable 'MyClickStream' HBase > create MyClickStream', {NAME =>'cf2', COMPRESSION => 'SNAPPY'}

Load Test too Usage below are some of the commands which can be used to do a quick load test on your compression performance:

bin/HBase org.apache.hadoop.HBase .util.LoadTestTool -h

usage: bin/HBase org.apache.hadoop.HBase .util.LoadTestTool <options>.

Options: includes –batchupdate

-compression: <arg> Compression type , arguments can be LZq,GZ,NONE and SNAPPY

We will limit ourselves with the above commands.

A good example will be as follows:

HBase org.apache.hadoop.HBase .util.LoadTestTool -write 2:20:20 -num_keys 500000 -read 60:30 -num_tables 1 -data_block_encoding NONE -tn load_test_tool_NONE -write (here we are passing 2 as ->avg_cols_per_key) 20 is the ->avg_data_size>: 20 is number of parallel threads to be used. -num_key has an integer arguments as 500000, this is the number of keys to read and write.

Now let's look at a read:

-read 60 is the verify percent

30 is the number of threads

-num_tables a positve interger is passed which is the number of tables to be loaded in parallel -data_block_encoding there are various encoding algorithms which can be passed as an argument, this allow the data block to be encoded based on the need. Some of them are [NONE,PREFIX,DIFF,FAST_DIFF,PREFIX_TREE].

-tn is a table name prefix which exports the content and data to HDFS in a sequence file using this:

HBase org.apache.hadoop.HBase .mapreduce.Export <tablename> <outputdir> [<versions> [<starttime> [<endtime>]]]

Note

You can configure HBase .client.scanner.caching in the job configuration; this is for all the scans.

Importing: This tool will load data that has been exported back into HBase :

This can be done by the following command:

HBase org.apache.hadoop.HBase .mapreduce.Import <tablename> <inputdir>

-tablename: Is the name of the table to be imported by the tool.

-inputdir: Is the input dir which will be used.

Utility to Replay WAL files into HBase :

HBase org.apache.hadoop.HBase .mapreduce.WALPlayer [options] <wal inputdir> <tables> [<tableMappings>]> HBase org.apache.hadoop.HBase .mapreduce.WALPlayer /backuplogdir MyClickStream newMyClickStream walinputdi: /backuplogdir tables: MyClickStream tableMappings→newMyClickStream newMyClickStream

HBase clean: is dangerous and should be avoided in production setup.

HBase clean

Options: as parameters

--cleanZk cleans HBase related data from zookeeper. --cleanHdfs cleans HBase related data from hdfs. --cleanAll cleans HBase related data from both zookeeper and hdfs.

HBase pe: This is a shortcut to run the performance evaluations tools

HBase ltt: This command is a shortcut provided to run the rg.apache.hadoop.HBase .util.LoadTestTool utility. It was introduced in 0.98 version.

View the details of the table as shown here:

Go to the log tab on the HBase Admin UI home page, and you will see the following details. Alternatively, you can log in to the directory using the Linux shell to tail the logs.

Directory: /logs/:

SecurityAuth.audit 0 bytes Aug 29, 2014 7:22:00 PM HBase -hadoop-master-rchoudhry-linux64.com.log 691391 bytes Sep 2, 2014 11:01:34 AM HBase -hadoop-master-rchoudhry-linux64.com.out 419 bytes Aug 29, 2014 7:31:21 PM HBase -hadoop-regionserver-rchoudhry-linux64.com.log 1048281 bytes Sep 2, 2014 11:01:23 AM HBase -hadoop-regionserver-rchoudhry-linux64com.out 419 bytes Aug 29, 2014 7:31:23 PM HBase -hadoop-zookeeper-rchoudhry-linux64.log 149832 bytes Aug 31, 2014 12:51:42 AM HBase -hadoop-zookeeper-rchoudhry-linux64.com.out 419 bytes Aug 29, 2014 7:31:19 PM HBase -hadoop-zookeeper-rchoudhry-linux64.com.out.1 1146 bytes Aug 29, 2014 7:26:29 PM

Get and set the log levels as required at runtime:

Log dump

Have a look at what is going in the cluster with Log dump:

Master status for HBase -hadoop-master-rchoudhry-linux64.com,60000,1409365881345 as of Tue Sep 02 11:17:33 PDT 2014 Version Info: =========================================================== HBase 0.98.5-hadoop2 Subversion file:///var/tmp/0.98.5RC0/HBase -0.98.5 -r Unknown Compiled by apurtell on Mon Aug 4 23:58:06 PDT 2014 Hadoop 2.2.0 Subversion https://svn.apache.org/repos/asf/hadoop/common -r 1529768 Compiled by hortonmu on 2013-10-07T06:28Z Tasks: =========================================================== Task: RpcServer.reader=1,port=60000 Status: WAITING:Waiting for a call Running for 315970s Task: RpcServer.reader=2,port=60000 Status: WAITING:Waiting for a call Running for 315969s

Metrics dump

Exposes the JMX details for the following components in a JSON format using Matrix dump:

- Start-up progress

- Balancer

- Assignment Manager

- Java Management extension details

- Java Runtime Implementation System Properties

Note

These are various system properties; the discussion of it is beyond the scope of this book.

How it works…

When the browser points to the http address http://your-HBase-master:60010/master-status, the web interface of HBase admin is loaded.

Internally, it connects to the Zookeeper and can collect the details from the zookeeper interface, where in zookeeper tracks the Region server as per the region server configuration in the region server file. These are the values set in the HBase -site.xml. The data for hadoo/hdfs, region servers, zookeeper quorum details are continuously looked by the RPC calls, which the master makes via zookeeper.

In the above HBase -site.xml, the user/ sets the Master, Backup master, various other software attributes, the refresh time, memory allocations, storefiles, compactions,request, zk dumps etc

Node or Cluster view: In this, the user chooses either monitoring Hmaster or Region server data view. The HMaster view contains data and graphics about the node status. The Region server view is the main and the most important one because it allows monitoring of all the region server aspects.

You can point the http address to http://your-HBase-master:60030/rs-status. It loads the admin UI for the region servers.

The matrix that is captured here is as follows:

- Region server Metrics (Base stats, Memory, request, Hlog, Storefiles, Queues) Tasks happening in Region servers (Monitored, RPC, RPC handler, active RPC .JSON, client operations)

- Block Cache provides different options for on-heap and off-heap: LurBlockCache and Bucket are off-heap.

Backup master is a design/architecture choice, which needs careful considerations before enabling it. HBase by design is a fault-tolerant distributed system with assumes hardware failure in the network topology.

However, HBase does provide various options to for it such as:

- Data center-level failure

- Accidental deletion of records/data

- For audit purpose

See also

Refer to the following chapter:

- Working with Large Distributed Systems.