Chapter 7. Cloud Computing

In this chapter, we will cover the following recipes:

- Creating virtual machine with KVM

- Managing virtual machines with virsh

- Setting up your own cloud with OpenStack

- Adding a cloud image to OpenStack

- Launching a virtual instance with OpenStack

- Installing Juju a service orchestration framework

- Managing services with Juju

Introduction

Cloud computing has become the most important terminology in the computing sphere. It has reduced the effort and cost required to set up and operate the overall computing infrastructure. It has helped various businesses quickly start their business operations without wasting time planning their IT infrastructure, and has enabled really small teams to scale their businesses with on-demand computing power.

The term cloud is commonly used to refer to a large network of servers connected to the Internet. These servers offer a wide range of services and are available for the general public on a pay-per-use basis. Most cloud resources are available in the form of Software as a Service (SaaS), Platform as a Service (PaaS), or Infrastructure as a Service (IaaS). A SaaS is a software system hosted in the cloud. These systems are generally maintained by large organizations; a well-known example that we commonly use is Gmail and the Google Docs service. The end user can access these application through their browsers. He or she can just sign up for the service, pay the required fees, if any, and start using it without any local setup. All data is stored in the cloud and is accessible from any location.

PaaS provide a base platform to develop and run applications in the cloud. The service provider does the hard work of building and maintaining the infrastructure and provides easy-to-use APIs that enable developers to quickly develop and deploy an application. Heroku and the Google App Engine are well-known examples of PaaS services.

Similarly, IaaS provides access to computing infrastructure. This is the base layer of cloud computing and provides physical or virtual access to computing, storage, and network services. The service builds and maintains actual infrastructure, including hardware assembly, virtualization, backups, and scaling. Examples include Amazon AWS and the Google Compute Engine. Heroku is a platform service built on top of the AWS infrastructure.

These cloud services are built on top of virtualization. Virtualization is a software system that enables us to break a large physical server into multiple small virtual servers that can be used independently. One can run multiple isolated operating systems and applications on a single large hardware server. Cloud computing is a set of tools that allows the general public to utilize these virtual resources at a small cost.

Ubuntu offers a wide range of virtualization and cloud computing tools. It supports hypervisors, such as KVM, XEN, and QEMU; a free and open source cloud computing platform, OpenStack; the service orchestration tool Juju and machine provisioning tool MAAS. In this chapter, we will take a brief look at virtualization with KVM. We will install and set up our own cloud with OpenStack and deploy our applications with Juju.

Creating virtual machine with KVM

Ubuntu server gives you various options for your virtualization needs. You can choose from KVM, XEN, QEMU, VirtualBox, and various other proprietary and open source tools. KVM, or Kernel virtual machine, is the default hypervisor on Ubuntu. In this recipe, we will set up a virtual machine with the help of KVM. Ubuntu, being a popular cloud distribution provides prebuilt cloud images that can be used to start virtual machines in the cloud. We will use one of these prebuilt images to build our own local virtual machine.

Getting ready

As always, you will need access to the root account or an account with sudo privileges.

How to do it…

Follows these steps to install KVM and launch a virtual machine using cloud image:

- To get started, install the required packages:

$ sudo apt-get install kvm cloud-utils \ genisoimage bridge-utils

Tip

Before using KVM, you need to check whether your CPU supports hardware virtualization, which is required by KVM. Check CPU support with the following command:

$ kvm-okYou should see output like this:

INFO: /dev/kvm existsKVM acceleration can be used.

- Next, download the cloud images from the Ubuntu servers. I have selected the Ubuntu 14.04 Trusty image:

$ wget http://cloud-images.ubuntu.com/releases/trusty/release/ubuntu-14.04-server-cloudimg-amd64-disk1.img -O trusty.img.distThis image is in a compressed format and needs to be converted into an uncompressed format. This is not strictly necessary but should save on-demand decompression when an image is used. Use the following command to convert the image:

$ qemu-img convert -O qcow2 trusty.img.dist trusty.img.orig - Create a copy-on-write image to protect your original image from modifications:

$ qemu-img create -f qcow2 -b trusty.img.orig trusty.img - Now that our image is ready, we need a

cloud-configdisk to initialize this image and set the necessary user details. Create a new file calleduser-dataand add the following data to it:$ sudo vi user-data #cloud-config password: password chpasswd: { expire: False } ssh_pwauth: True

This file will set a password for the default user,

ubuntu, and enable password authentication in the SSH configuration. - Create a disk with this configuration written on it:

$ cloud-localds my-seed.img user-data - Next, create a network bridge to be used by virtual machines. Edit

/etc/network/interfacesas follows:auto eth0 iface eth0 inet manual auto br0 iface br0 inet dhcp bridge_ports eth0

Note

On Ubuntu 16.04, you will need to edit files under the

/etc/network/interfaces.ddirectory. Edit the file foreth0or your default network interface, and create a new file forbr0. All files are merged under/etc/network/interfaces. - Restart the networking service for the changes to take effect. If you are on an SSH connection, your session will get disconnected:

$ sudo service networking restart - Now that we have all the required data, let's start our image with KVM, as follows:

$ sudo kvm -netdev bridge,id=net0,br=br0 \ -net user -m 256 -nographic \ -hda trusty.img -hdb my-seed.img

This should start a virtual machine and route all input and output to your console. The first boot with

cloud-initshould take a while. Once the boot process completes, you will get a login prompt. Log in with the usernameubuntuand the password specified in user-data. - Once you get access to the shell, set a new password for the user

ubuntu:$ sudo passwd ubuntuAfter that, uninstall the cloud-init tool to stop it running on the next boot:

$ sudo apt-get remove cloud-initYour virtual machine is now ready to use. The next time you start the machine, you can skip the second disk with the cloud-init details and route the system console to VNC, as follows:

$ sudo kvm -netdev bridge,id=net0,br=br0 \ -hda trusty.img \ -m 256 -vnc 0.0.0.0:1 -daemonize

How it works…

Ubuntu provides various options to create and manage virtual machines. The previous recipe covers basic virtualization with KVM and prebuilt Ubuntu Cloud images. KVM is very similar to desktop virtualization tools such as VirtualBox and VMware. It comes as a part of the Qemu emulator and uses hardware acceleration features from the host CPU to boost the performance of virtual machines. Without hardware support, the machines need to run inside the Qemu emulator.

After installing KVM, we have used Ubuntu cloud image as our pre-installed boot disk. Cloud images are prebuilt operating system images that do not contain any user data or system configuration. These images need to be initialized before being used. Recent Ubuntu releases contain a program called cloud-init, which is used to initialize the image at first boot. The cloud-init program looks for the metadata service on the network and queries user-data once the service is found. In our case, we have used a secondary disk to pass user data and initialize the cloud image.

We downloaded the prebuilt image from the Ubuntu image server and converted it to uncompressed format. Then, we created a new snapshot with the backing image set to the original prebuilt image. This should protect our original image from any modifications so that it can be used to create more copies. Whenever you need to restore a machine to its original state, just delete the newly created snapshot images and recreate it. Note that you will need to use the cloud-init process again during such restores.

This recipe uses prebuilt images, but you can also install the entire operating system on virtual machines. You will need to download the required installation medium and attach a blank hard disk to the VM. For installation, make sure you set the VNC connection to follow the installation steps.

There's more…

Ubuntu also provides the virt-manager graphical interface to create and manage KVM virtual machines from a GUI. You can install it as follows:

$ sudo apt-get install virt-manager

Alternatively, you can also install Oracle VirtualBox on Ubuntu. Download the .deb file for your Ubuntu version and install it with dpkg -i, or install it from the package manager as follows:

- Add the Oracle repository to your installation sources. Make sure to substitute

xenialwith the correct Ubuntu version:$ sudo vi /etc/apt/sources.list deb http://download.virtualbox.org/virtualbox/debian xenial contrib

- Add the Oracle public keys:

wget -q https://www.virtualbox.org/download/oracle_vbox_2016.asc -O- | sudo apt-key add - - Install VirtualBox:

$ sudo apt-get update && sudo apt-get install virtualbox-5.0

See also

- VirtualBox downloads: https://www.virtualbox.org/wiki/Linux_Downloads

- Ubuntu Cloud images on a local hypervisor: https://help.ubuntu.com/community/UEC/Images#line-105

- The Ubuntu community page for KVM: https://help.ubuntu.com/community/KVM

Getting ready

As always, you will need access to the root account or an account with sudo privileges.

How to do it…

Follows these steps to install KVM and launch a virtual machine using cloud image:

- To get started, install the required packages:

$ sudo apt-get install kvm cloud-utils \ genisoimage bridge-utils

Tip

Before using KVM, you need to check whether your CPU supports hardware virtualization, which is required by KVM. Check CPU support with the following command:

$ kvm-okYou should see output like this:

INFO: /dev/kvm existsKVM acceleration can be used.

- Next, download the cloud images from the Ubuntu servers. I have selected the Ubuntu 14.04 Trusty image:

$ wget http://cloud-images.ubuntu.com/releases/trusty/release/ubuntu-14.04-server-cloudimg-amd64-disk1.img -O trusty.img.distThis image is in a compressed format and needs to be converted into an uncompressed format. This is not strictly necessary but should save on-demand decompression when an image is used. Use the following command to convert the image:

$ qemu-img convert -O qcow2 trusty.img.dist trusty.img.orig - Create a copy-on-write image to protect your original image from modifications:

$ qemu-img create -f qcow2 -b trusty.img.orig trusty.img - Now that our image is ready, we need a

cloud-configdisk to initialize this image and set the necessary user details. Create a new file calleduser-dataand add the following data to it:$ sudo vi user-data #cloud-config password: password chpasswd: { expire: False } ssh_pwauth: True

This file will set a password for the default user,

ubuntu, and enable password authentication in the SSH configuration. - Create a disk with this configuration written on it:

$ cloud-localds my-seed.img user-data - Next, create a network bridge to be used by virtual machines. Edit

/etc/network/interfacesas follows:auto eth0 iface eth0 inet manual auto br0 iface br0 inet dhcp bridge_ports eth0

Note

On Ubuntu 16.04, you will need to edit files under the

/etc/network/interfaces.ddirectory. Edit the file foreth0or your default network interface, and create a new file forbr0. All files are merged under/etc/network/interfaces. - Restart the networking service for the changes to take effect. If you are on an SSH connection, your session will get disconnected:

$ sudo service networking restart - Now that we have all the required data, let's start our image with KVM, as follows:

$ sudo kvm -netdev bridge,id=net0,br=br0 \ -net user -m 256 -nographic \ -hda trusty.img -hdb my-seed.img

This should start a virtual machine and route all input and output to your console. The first boot with

cloud-initshould take a while. Once the boot process completes, you will get a login prompt. Log in with the usernameubuntuand the password specified in user-data. - Once you get access to the shell, set a new password for the user

ubuntu:$ sudo passwd ubuntuAfter that, uninstall the cloud-init tool to stop it running on the next boot:

$ sudo apt-get remove cloud-initYour virtual machine is now ready to use. The next time you start the machine, you can skip the second disk with the cloud-init details and route the system console to VNC, as follows:

$ sudo kvm -netdev bridge,id=net0,br=br0 \ -hda trusty.img \ -m 256 -vnc 0.0.0.0:1 -daemonize

How it works…

Ubuntu provides various options to create and manage virtual machines. The previous recipe covers basic virtualization with KVM and prebuilt Ubuntu Cloud images. KVM is very similar to desktop virtualization tools such as VirtualBox and VMware. It comes as a part of the Qemu emulator and uses hardware acceleration features from the host CPU to boost the performance of virtual machines. Without hardware support, the machines need to run inside the Qemu emulator.

After installing KVM, we have used Ubuntu cloud image as our pre-installed boot disk. Cloud images are prebuilt operating system images that do not contain any user data or system configuration. These images need to be initialized before being used. Recent Ubuntu releases contain a program called cloud-init, which is used to initialize the image at first boot. The cloud-init program looks for the metadata service on the network and queries user-data once the service is found. In our case, we have used a secondary disk to pass user data and initialize the cloud image.

We downloaded the prebuilt image from the Ubuntu image server and converted it to uncompressed format. Then, we created a new snapshot with the backing image set to the original prebuilt image. This should protect our original image from any modifications so that it can be used to create more copies. Whenever you need to restore a machine to its original state, just delete the newly created snapshot images and recreate it. Note that you will need to use the cloud-init process again during such restores.

This recipe uses prebuilt images, but you can also install the entire operating system on virtual machines. You will need to download the required installation medium and attach a blank hard disk to the VM. For installation, make sure you set the VNC connection to follow the installation steps.

There's more…

Ubuntu also provides the virt-manager graphical interface to create and manage KVM virtual machines from a GUI. You can install it as follows:

$ sudo apt-get install virt-manager

Alternatively, you can also install Oracle VirtualBox on Ubuntu. Download the .deb file for your Ubuntu version and install it with dpkg -i, or install it from the package manager as follows:

- Add the Oracle repository to your installation sources. Make sure to substitute

xenialwith the correct Ubuntu version:$ sudo vi /etc/apt/sources.list deb http://download.virtualbox.org/virtualbox/debian xenial contrib

- Add the Oracle public keys:

wget -q https://www.virtualbox.org/download/oracle_vbox_2016.asc -O- | sudo apt-key add - - Install VirtualBox:

$ sudo apt-get update && sudo apt-get install virtualbox-5.0

See also

- VirtualBox downloads: https://www.virtualbox.org/wiki/Linux_Downloads

- Ubuntu Cloud images on a local hypervisor: https://help.ubuntu.com/community/UEC/Images#line-105

- The Ubuntu community page for KVM: https://help.ubuntu.com/community/KVM

How to do it…

Follows these steps to install KVM and launch a virtual machine using cloud image:

- To get started, install the required packages:

$ sudo apt-get install kvm cloud-utils \ genisoimage bridge-utils

Tip

Before using KVM, you need to check whether your CPU supports hardware virtualization, which is required by KVM. Check CPU support with the following command:

$ kvm-okYou should see output like this:

INFO: /dev/kvm existsKVM acceleration can be used.

- Next, download the cloud images from the Ubuntu servers. I have selected the Ubuntu 14.04 Trusty image:

$ wget http://cloud-images.ubuntu.com/releases/trusty/release/ubuntu-14.04-server-cloudimg-amd64-disk1.img -O trusty.img.distThis image is in a compressed format and needs to be converted into an uncompressed format. This is not strictly necessary but should save on-demand decompression when an image is used. Use the following command to convert the image:

$ qemu-img convert -O qcow2 trusty.img.dist trusty.img.orig - Create a copy-on-write image to protect your original image from modifications:

$ qemu-img create -f qcow2 -b trusty.img.orig trusty.img - Now that our image is ready, we need a

cloud-configdisk to initialize this image and set the necessary user details. Create a new file calleduser-dataand add the following data to it:$ sudo vi user-data #cloud-config password: password chpasswd: { expire: False } ssh_pwauth: True

This file will set a password for the default user,

ubuntu, and enable password authentication in the SSH configuration. - Create a disk with this configuration written on it:

$ cloud-localds my-seed.img user-data - Next, create a network bridge to be used by virtual machines. Edit

/etc/network/interfacesas follows:auto eth0 iface eth0 inet manual auto br0 iface br0 inet dhcp bridge_ports eth0

Note

On Ubuntu 16.04, you will need to edit files under the

/etc/network/interfaces.ddirectory. Edit the file foreth0or your default network interface, and create a new file forbr0. All files are merged under/etc/network/interfaces. - Restart the networking service for the changes to take effect. If you are on an SSH connection, your session will get disconnected:

$ sudo service networking restart - Now that we have all the required data, let's start our image with KVM, as follows:

$ sudo kvm -netdev bridge,id=net0,br=br0 \ -net user -m 256 -nographic \ -hda trusty.img -hdb my-seed.img

This should start a virtual machine and route all input and output to your console. The first boot with

cloud-initshould take a while. Once the boot process completes, you will get a login prompt. Log in with the usernameubuntuand the password specified in user-data. - Once you get access to the shell, set a new password for the user

ubuntu:$ sudo passwd ubuntuAfter that, uninstall the cloud-init tool to stop it running on the next boot:

$ sudo apt-get remove cloud-initYour virtual machine is now ready to use. The next time you start the machine, you can skip the second disk with the cloud-init details and route the system console to VNC, as follows:

$ sudo kvm -netdev bridge,id=net0,br=br0 \ -hda trusty.img \ -m 256 -vnc 0.0.0.0:1 -daemonize

How it works…

Ubuntu provides various options to create and manage virtual machines. The previous recipe covers basic virtualization with KVM and prebuilt Ubuntu Cloud images. KVM is very similar to desktop virtualization tools such as VirtualBox and VMware. It comes as a part of the Qemu emulator and uses hardware acceleration features from the host CPU to boost the performance of virtual machines. Without hardware support, the machines need to run inside the Qemu emulator.

After installing KVM, we have used Ubuntu cloud image as our pre-installed boot disk. Cloud images are prebuilt operating system images that do not contain any user data or system configuration. These images need to be initialized before being used. Recent Ubuntu releases contain a program called cloud-init, which is used to initialize the image at first boot. The cloud-init program looks for the metadata service on the network and queries user-data once the service is found. In our case, we have used a secondary disk to pass user data and initialize the cloud image.

We downloaded the prebuilt image from the Ubuntu image server and converted it to uncompressed format. Then, we created a new snapshot with the backing image set to the original prebuilt image. This should protect our original image from any modifications so that it can be used to create more copies. Whenever you need to restore a machine to its original state, just delete the newly created snapshot images and recreate it. Note that you will need to use the cloud-init process again during such restores.

This recipe uses prebuilt images, but you can also install the entire operating system on virtual machines. You will need to download the required installation medium and attach a blank hard disk to the VM. For installation, make sure you set the VNC connection to follow the installation steps.

There's more…

Ubuntu also provides the virt-manager graphical interface to create and manage KVM virtual machines from a GUI. You can install it as follows:

$ sudo apt-get install virt-manager

Alternatively, you can also install Oracle VirtualBox on Ubuntu. Download the .deb file for your Ubuntu version and install it with dpkg -i, or install it from the package manager as follows:

- Add the Oracle repository to your installation sources. Make sure to substitute

xenialwith the correct Ubuntu version:$ sudo vi /etc/apt/sources.list deb http://download.virtualbox.org/virtualbox/debian xenial contrib

- Add the Oracle public keys:

wget -q https://www.virtualbox.org/download/oracle_vbox_2016.asc -O- | sudo apt-key add - - Install VirtualBox:

$ sudo apt-get update && sudo apt-get install virtualbox-5.0

See also

- VirtualBox downloads: https://www.virtualbox.org/wiki/Linux_Downloads

- Ubuntu Cloud images on a local hypervisor: https://help.ubuntu.com/community/UEC/Images#line-105

- The Ubuntu community page for KVM: https://help.ubuntu.com/community/KVM

How it works…

Ubuntu provides various options to create and manage virtual machines. The previous recipe covers basic virtualization with KVM and prebuilt Ubuntu Cloud images. KVM is very similar to desktop virtualization tools such as VirtualBox and VMware. It comes as a part of the Qemu emulator and uses hardware acceleration features from the host CPU to boost the performance of virtual machines. Without hardware support, the machines need to run inside the Qemu emulator.

After installing KVM, we have used Ubuntu cloud image as our pre-installed boot disk. Cloud images are prebuilt operating system images that do not contain any user data or system configuration. These images need to be initialized before being used. Recent Ubuntu releases contain a program called cloud-init, which is used to initialize the image at first boot. The cloud-init program looks for the metadata service on the network and queries user-data once the service is found. In our case, we have used a secondary disk to pass user data and initialize the cloud image.

We downloaded the prebuilt image from the Ubuntu image server and converted it to uncompressed format. Then, we created a new snapshot with the backing image set to the original prebuilt image. This should protect our original image from any modifications so that it can be used to create more copies. Whenever you need to restore a machine to its original state, just delete the newly created snapshot images and recreate it. Note that you will need to use the cloud-init process again during such restores.

This recipe uses prebuilt images, but you can also install the entire operating system on virtual machines. You will need to download the required installation medium and attach a blank hard disk to the VM. For installation, make sure you set the VNC connection to follow the installation steps.

There's more…

Ubuntu also provides the virt-manager graphical interface to create and manage KVM virtual machines from a GUI. You can install it as follows:

$ sudo apt-get install virt-manager

Alternatively, you can also install Oracle VirtualBox on Ubuntu. Download the .deb file for your Ubuntu version and install it with dpkg -i, or install it from the package manager as follows:

- Add the Oracle repository to your installation sources. Make sure to substitute

xenialwith the correct Ubuntu version:$ sudo vi /etc/apt/sources.list deb http://download.virtualbox.org/virtualbox/debian xenial contrib

- Add the Oracle public keys:

wget -q https://www.virtualbox.org/download/oracle_vbox_2016.asc -O- | sudo apt-key add - - Install VirtualBox:

$ sudo apt-get update && sudo apt-get install virtualbox-5.0

See also

- VirtualBox downloads: https://www.virtualbox.org/wiki/Linux_Downloads

- Ubuntu Cloud images on a local hypervisor: https://help.ubuntu.com/community/UEC/Images#line-105

- The Ubuntu community page for KVM: https://help.ubuntu.com/community/KVM

There's more…

Ubuntu also provides the virt-manager graphical interface to create and manage KVM virtual machines from a GUI. You can install it as follows:

$ sudo apt-get install virt-manager

Alternatively, you can also install Oracle VirtualBox on Ubuntu. Download the .deb file for your Ubuntu version and install it with dpkg -i, or install it from the package manager as follows:

- Add the Oracle repository to your installation sources. Make sure to substitute

xenialwith the correct Ubuntu version:$ sudo vi /etc/apt/sources.list deb http://download.virtualbox.org/virtualbox/debian xenial contrib

- Add the Oracle public keys:

wget -q https://www.virtualbox.org/download/oracle_vbox_2016.asc -O- | sudo apt-key add - - Install VirtualBox:

$ sudo apt-get update && sudo apt-get install virtualbox-5.0

See also

- VirtualBox downloads: https://www.virtualbox.org/wiki/Linux_Downloads

- Ubuntu Cloud images on a local hypervisor: https://help.ubuntu.com/community/UEC/Images#line-105

- The Ubuntu community page for KVM: https://help.ubuntu.com/community/KVM

See also

- VirtualBox downloads: https://www.virtualbox.org/wiki/Linux_Downloads

- Ubuntu Cloud images on a local hypervisor: https://help.ubuntu.com/community/UEC/Images#line-105

- The Ubuntu community page for KVM: https://help.ubuntu.com/community/KVM

Managing virtual machines with virsh

In the previous recipe, we saw how to start and manage virtual machines with KVM. This recipe covers the use of Virsh and virt-install to create and manage virtual machines. The libvirt Linux library exposes various APIs to manage hypervisors and virtual machines. Virsh is a command-line tool that provides an interface to libvirt APIs.

To create a new machine, Virsh needs the machine definition in XML format. virt-install is a Python script to easily create a new virtual machine without manipulating bits of XML. It provides an easy-to-use interface to define a machine, create an XML definition for it and then load it in Virsh to start it.

In this recipe, we will create a new virtual machine with virt-install and see how it can be managed with various Virsh commands.

Getting ready

You will need access to the root account or an account with sudo privileges.

- Install the required packages, as follows:

$ sudo apt-get update $ sudo apt-get install -y qemu-kvm libvirt-bin virtinst

- Install packages to create the cloud init disk:

$ sudo apt-get install genisoimage - Add your user to the

libvirtdgroup and update group membership for the current session:$ sudo adduser ubuntu libvirtd $ newgrp libvirtd

How to do it…

We need to create a new virtual machine. This can be done either with an XML definition of the machine or with a tool called virt-install. We will again use the prebuilt Ubuntu Cloud images and initialize them with a secondary disk:

- First, download the Ubuntu Cloud image and prepare it for use:

$ mkdir ubuntuvm && cd ubuntuvm $ wget -O trusty.img.dist \ http://cloud-images.ubuntu.com/releases/trusty/release/ubuntu-14.04-server-cloudimg-amd64-disk1.img $ qemu-img convert -O qcow2 trusty.img.dist trusty.img.orig $ qemu-img create -f qcow2 -b trusty.img.orig trusty.img

- Create the initialization disk to initialize your cloud image:

$ sudo vi user-data #cloud-config password: password chpasswd: { expire: False } ssh_pwauth: True $ sudo vi meta-data instance-id: ubuntu01; local-hostname: ubuntu $ genisoimage -output cidata.iso -volid cidata -joliet \ -rock user-data meta-data

- Now that we have all the necessary data, let's create a new machine, as follows:

$ virt-install --import --name ubuntu01 \ --ram 256 --vcpus 1 --disk trusty.img \ --disk cidata.iso,device=cdrom \ --network bridge=virbr0 \ --graphics vnc,listen=0.0.0.0 --noautoconsole -v

This should create a virtual machine and start it. A display should be opened on the local VNC port

5900. You can access the VNC through other systems available on the local network with a GUI.Tip

You can set up local port forwarding and access VNC from your local system as follows:

$ ssh kvm_hostname_or_ip -L 5900:127.0.0.1:5900 $ vncviewer localhost:5900

- Once the cloud-init process completes, you can log in with the default user,

ubuntu, and the password set inuser-data. - Now that the machine is created and running, we can use the

virshcommand to manage this machine. You may need to connectvirshandqemubefore using them:$ virsh connect qemu:///system - Get a list of running machines with

virsh list. The--allparameter will show all available machines, whether they are running or stopped:$ virsh list --all # or virsh --connect qemu:///system list - You can open a console to a running machine with

virshas follows. This should give you a login prompt inside the virtual machine:$ virsh console ubuntu01To close the console, use the Ctrl + ] key combination.

- Once you are done with the machine, you can shut it down with

virsh shutdown. This will call a shutdown process inside the virtual machine:$ virsh shutdown ubuntu01You can also stop the machine without a proper shutdown, as follows:

$ virsh destroy ubuntu01 - To completely remove the machine, use

virsh undefine. With this command, the machine will be deleted and cannot be used again:$ virsh destroy ubuntu01

How it works…

Both the virt-install and virsh commands collectively give you an easy-to-use virtualization environment. Additionally, the system does not need to support hardware virtualization. When it's available, the virtual machines will use KVM and hardware acceleration, and when KVM is not supported, Qemu will be used to emulate virtual hardware.

With virt-install, we have easily created a KVM virtual machine. This command abstracts the XML definition required by libvirt. With a list of various parameters, we can easily define all the components with their respective configurations. You can get a full list of virt-install parameters with the --help flag.

Tip

The virtinst package, which installs virt-install, also contains some more commands, such as virt-clone, virt-admin, and virt-xml. Use tab completion in your bash shell to get a list of all virt-* commands.

Once the machine is defined and running, it can be managed with virsh subcommands. Virsh provides tons of subcommands to manage virtual machines, or domains as they are called by libvirt. You can start or stop machines, pause and resume them, or stop them entirely. You can even modify the machine configuration to add or remove devices as needed, or create a clone of an existing machine. To get a list of all machine (domain) management commands, use virsh help domain.

Once you have your first virtual machine, it becomes easier to create new machines using the XML definition from it. You can dump the XML definition with virsh dumpxml machine, edit it as required, and then create a new machine using XML configuration with virsh create configuration.xml.

There are a lot more options available for the virsh and virt-install commands; check their respective manual pages for more details.

There's more…

In the previous example, we used cloud images to quickly start a virtual machine. You do not need to use cloud machines, and you can install the operating system on your own using the respective installation media.

Download the installation media and then use following command to start the installation. Make sure you change the -c parameter to the downloaded ISO file, along with the location:

$ sudo virt-install -n ubuntu -r 1024 \ --disk path=/var/lib/libvirt/images/ubuntu01.img,bus=virtio,size=4 \ -c ubuntu-16.04-server-i386.iso \ --network network=default,model=virtio --graphics vnc,listen=0.0.0.0 --noautoconsole -v

The command will wait for the installation to complete. You can access the GUI installation using the VNC client.

Forward your local port to access VNC on a KVM host. Make sure you replace 5900 with the respective port from virsh vncdisplay node0:

$ ssh kvm_hostname_or_ip -L 5900:127.0.0.1:5900

Now you can connect to VNC at localhost:5900.

Easy cloud images with uvtool

Ubuntu provides another super easy tool named uvtool. This tool focuses on the creation of virtual machines out of Ubuntu Cloud images. It synchronizes cloud images from Ubuntu servers to your local machine. Later, these images can be used to launch virtual machines in minutes. You can install and use uvtool with the following commands:

$ sudo apt-get install uvtool

Download the Xenial image from the cloud images:

$ uvt-simplestreams-libvirt sync release=xenial arch=amd64

Start a virtual machine:

$ uvt-kvm create virtsys01

Finally, get the IP of a running system:

$ uvt-kvm ip virtsys01

Check out the manual page with the man uvtool command and visit the official uvtool page at https://help.ubuntu.com/lts/serverguide/cloud-images-and-uvtool.html for more details.

See also

- Check out the manual pages for virt-install using

$ man virt-install - Check out the manual pages for virsh using

$ man virsh - The official Libvirt site: http://libvirt.org/

- The Libvirt documentation on Ubuntu Server guide: https://help.ubuntu.com/lts/serverguide/libvirt.html

Getting ready

You will need access to the root account or an account with sudo privileges.

- Install the required packages, as follows:

$ sudo apt-get update $ sudo apt-get install -y qemu-kvm libvirt-bin virtinst

- Install packages to create the cloud init disk:

$ sudo apt-get install genisoimage - Add your user to the

libvirtdgroup and update group membership for the current session:$ sudo adduser ubuntu libvirtd $ newgrp libvirtd

How to do it…

We need to create a new virtual machine. This can be done either with an XML definition of the machine or with a tool called virt-install. We will again use the prebuilt Ubuntu Cloud images and initialize them with a secondary disk:

- First, download the Ubuntu Cloud image and prepare it for use:

$ mkdir ubuntuvm && cd ubuntuvm $ wget -O trusty.img.dist \ http://cloud-images.ubuntu.com/releases/trusty/release/ubuntu-14.04-server-cloudimg-amd64-disk1.img $ qemu-img convert -O qcow2 trusty.img.dist trusty.img.orig $ qemu-img create -f qcow2 -b trusty.img.orig trusty.img

- Create the initialization disk to initialize your cloud image:

$ sudo vi user-data #cloud-config password: password chpasswd: { expire: False } ssh_pwauth: True $ sudo vi meta-data instance-id: ubuntu01; local-hostname: ubuntu $ genisoimage -output cidata.iso -volid cidata -joliet \ -rock user-data meta-data

- Now that we have all the necessary data, let's create a new machine, as follows:

$ virt-install --import --name ubuntu01 \ --ram 256 --vcpus 1 --disk trusty.img \ --disk cidata.iso,device=cdrom \ --network bridge=virbr0 \ --graphics vnc,listen=0.0.0.0 --noautoconsole -v

This should create a virtual machine and start it. A display should be opened on the local VNC port

5900. You can access the VNC through other systems available on the local network with a GUI.Tip

You can set up local port forwarding and access VNC from your local system as follows:

$ ssh kvm_hostname_or_ip -L 5900:127.0.0.1:5900 $ vncviewer localhost:5900

- Once the cloud-init process completes, you can log in with the default user,

ubuntu, and the password set inuser-data. - Now that the machine is created and running, we can use the

virshcommand to manage this machine. You may need to connectvirshandqemubefore using them:$ virsh connect qemu:///system - Get a list of running machines with

virsh list. The--allparameter will show all available machines, whether they are running or stopped:$ virsh list --all # or virsh --connect qemu:///system list - You can open a console to a running machine with

virshas follows. This should give you a login prompt inside the virtual machine:$ virsh console ubuntu01To close the console, use the Ctrl + ] key combination.

- Once you are done with the machine, you can shut it down with

virsh shutdown. This will call a shutdown process inside the virtual machine:$ virsh shutdown ubuntu01You can also stop the machine without a proper shutdown, as follows:

$ virsh destroy ubuntu01 - To completely remove the machine, use

virsh undefine. With this command, the machine will be deleted and cannot be used again:$ virsh destroy ubuntu01

How it works…

Both the virt-install and virsh commands collectively give you an easy-to-use virtualization environment. Additionally, the system does not need to support hardware virtualization. When it's available, the virtual machines will use KVM and hardware acceleration, and when KVM is not supported, Qemu will be used to emulate virtual hardware.

With virt-install, we have easily created a KVM virtual machine. This command abstracts the XML definition required by libvirt. With a list of various parameters, we can easily define all the components with their respective configurations. You can get a full list of virt-install parameters with the --help flag.

Tip

The virtinst package, which installs virt-install, also contains some more commands, such as virt-clone, virt-admin, and virt-xml. Use tab completion in your bash shell to get a list of all virt-* commands.

Once the machine is defined and running, it can be managed with virsh subcommands. Virsh provides tons of subcommands to manage virtual machines, or domains as they are called by libvirt. You can start or stop machines, pause and resume them, or stop them entirely. You can even modify the machine configuration to add or remove devices as needed, or create a clone of an existing machine. To get a list of all machine (domain) management commands, use virsh help domain.

Once you have your first virtual machine, it becomes easier to create new machines using the XML definition from it. You can dump the XML definition with virsh dumpxml machine, edit it as required, and then create a new machine using XML configuration with virsh create configuration.xml.

There are a lot more options available for the virsh and virt-install commands; check their respective manual pages for more details.

There's more…

In the previous example, we used cloud images to quickly start a virtual machine. You do not need to use cloud machines, and you can install the operating system on your own using the respective installation media.

Download the installation media and then use following command to start the installation. Make sure you change the -c parameter to the downloaded ISO file, along with the location:

$ sudo virt-install -n ubuntu -r 1024 \ --disk path=/var/lib/libvirt/images/ubuntu01.img,bus=virtio,size=4 \ -c ubuntu-16.04-server-i386.iso \ --network network=default,model=virtio --graphics vnc,listen=0.0.0.0 --noautoconsole -v

The command will wait for the installation to complete. You can access the GUI installation using the VNC client.

Forward your local port to access VNC on a KVM host. Make sure you replace 5900 with the respective port from virsh vncdisplay node0:

$ ssh kvm_hostname_or_ip -L 5900:127.0.0.1:5900

Now you can connect to VNC at localhost:5900.

Easy cloud images with uvtool

Ubuntu provides another super easy tool named uvtool. This tool focuses on the creation of virtual machines out of Ubuntu Cloud images. It synchronizes cloud images from Ubuntu servers to your local machine. Later, these images can be used to launch virtual machines in minutes. You can install and use uvtool with the following commands:

$ sudo apt-get install uvtool

Download the Xenial image from the cloud images:

$ uvt-simplestreams-libvirt sync release=xenial arch=amd64

Start a virtual machine:

$ uvt-kvm create virtsys01

Finally, get the IP of a running system:

$ uvt-kvm ip virtsys01

Check out the manual page with the man uvtool command and visit the official uvtool page at https://help.ubuntu.com/lts/serverguide/cloud-images-and-uvtool.html for more details.

See also

- Check out the manual pages for virt-install using

$ man virt-install - Check out the manual pages for virsh using

$ man virsh - The official Libvirt site: http://libvirt.org/

- The Libvirt documentation on Ubuntu Server guide: https://help.ubuntu.com/lts/serverguide/libvirt.html

How to do it…

We need to create a new virtual machine. This can be done either with an XML definition of the machine or with a tool called virt-install. We will again use the prebuilt Ubuntu Cloud images and initialize them with a secondary disk:

- First, download the Ubuntu Cloud image and prepare it for use:

$ mkdir ubuntuvm && cd ubuntuvm $ wget -O trusty.img.dist \ http://cloud-images.ubuntu.com/releases/trusty/release/ubuntu-14.04-server-cloudimg-amd64-disk1.img $ qemu-img convert -O qcow2 trusty.img.dist trusty.img.orig $ qemu-img create -f qcow2 -b trusty.img.orig trusty.img

- Create the initialization disk to initialize your cloud image:

$ sudo vi user-data #cloud-config password: password chpasswd: { expire: False } ssh_pwauth: True $ sudo vi meta-data instance-id: ubuntu01; local-hostname: ubuntu $ genisoimage -output cidata.iso -volid cidata -joliet \ -rock user-data meta-data

- Now that we have all the necessary data, let's create a new machine, as follows:

$ virt-install --import --name ubuntu01 \ --ram 256 --vcpus 1 --disk trusty.img \ --disk cidata.iso,device=cdrom \ --network bridge=virbr0 \ --graphics vnc,listen=0.0.0.0 --noautoconsole -v

This should create a virtual machine and start it. A display should be opened on the local VNC port

5900. You can access the VNC through other systems available on the local network with a GUI.Tip

You can set up local port forwarding and access VNC from your local system as follows:

$ ssh kvm_hostname_or_ip -L 5900:127.0.0.1:5900 $ vncviewer localhost:5900

- Once the cloud-init process completes, you can log in with the default user,

ubuntu, and the password set inuser-data. - Now that the machine is created and running, we can use the

virshcommand to manage this machine. You may need to connectvirshandqemubefore using them:$ virsh connect qemu:///system - Get a list of running machines with

virsh list. The--allparameter will show all available machines, whether they are running or stopped:$ virsh list --all # or virsh --connect qemu:///system list - You can open a console to a running machine with

virshas follows. This should give you a login prompt inside the virtual machine:$ virsh console ubuntu01To close the console, use the Ctrl + ] key combination.

- Once you are done with the machine, you can shut it down with

virsh shutdown. This will call a shutdown process inside the virtual machine:$ virsh shutdown ubuntu01You can also stop the machine without a proper shutdown, as follows:

$ virsh destroy ubuntu01 - To completely remove the machine, use

virsh undefine. With this command, the machine will be deleted and cannot be used again:$ virsh destroy ubuntu01

How it works…

Both the virt-install and virsh commands collectively give you an easy-to-use virtualization environment. Additionally, the system does not need to support hardware virtualization. When it's available, the virtual machines will use KVM and hardware acceleration, and when KVM is not supported, Qemu will be used to emulate virtual hardware.

With virt-install, we have easily created a KVM virtual machine. This command abstracts the XML definition required by libvirt. With a list of various parameters, we can easily define all the components with their respective configurations. You can get a full list of virt-install parameters with the --help flag.

Tip

The virtinst package, which installs virt-install, also contains some more commands, such as virt-clone, virt-admin, and virt-xml. Use tab completion in your bash shell to get a list of all virt-* commands.

Once the machine is defined and running, it can be managed with virsh subcommands. Virsh provides tons of subcommands to manage virtual machines, or domains as they are called by libvirt. You can start or stop machines, pause and resume them, or stop them entirely. You can even modify the machine configuration to add or remove devices as needed, or create a clone of an existing machine. To get a list of all machine (domain) management commands, use virsh help domain.

Once you have your first virtual machine, it becomes easier to create new machines using the XML definition from it. You can dump the XML definition with virsh dumpxml machine, edit it as required, and then create a new machine using XML configuration with virsh create configuration.xml.

There are a lot more options available for the virsh and virt-install commands; check their respective manual pages for more details.

There's more…

In the previous example, we used cloud images to quickly start a virtual machine. You do not need to use cloud machines, and you can install the operating system on your own using the respective installation media.

Download the installation media and then use following command to start the installation. Make sure you change the -c parameter to the downloaded ISO file, along with the location:

$ sudo virt-install -n ubuntu -r 1024 \ --disk path=/var/lib/libvirt/images/ubuntu01.img,bus=virtio,size=4 \ -c ubuntu-16.04-server-i386.iso \ --network network=default,model=virtio --graphics vnc,listen=0.0.0.0 --noautoconsole -v

The command will wait for the installation to complete. You can access the GUI installation using the VNC client.

Forward your local port to access VNC on a KVM host. Make sure you replace 5900 with the respective port from virsh vncdisplay node0:

$ ssh kvm_hostname_or_ip -L 5900:127.0.0.1:5900

Now you can connect to VNC at localhost:5900.

Easy cloud images with uvtool

Ubuntu provides another super easy tool named uvtool. This tool focuses on the creation of virtual machines out of Ubuntu Cloud images. It synchronizes cloud images from Ubuntu servers to your local machine. Later, these images can be used to launch virtual machines in minutes. You can install and use uvtool with the following commands:

$ sudo apt-get install uvtool

Download the Xenial image from the cloud images:

$ uvt-simplestreams-libvirt sync release=xenial arch=amd64

Start a virtual machine:

$ uvt-kvm create virtsys01

Finally, get the IP of a running system:

$ uvt-kvm ip virtsys01

Check out the manual page with the man uvtool command and visit the official uvtool page at https://help.ubuntu.com/lts/serverguide/cloud-images-and-uvtool.html for more details.

See also

- Check out the manual pages for virt-install using

$ man virt-install - Check out the manual pages for virsh using

$ man virsh - The official Libvirt site: http://libvirt.org/

- The Libvirt documentation on Ubuntu Server guide: https://help.ubuntu.com/lts/serverguide/libvirt.html

How it works…

Both the virt-install and virsh commands collectively give you an easy-to-use virtualization environment. Additionally, the system does not need to support hardware virtualization. When it's available, the virtual machines will use KVM and hardware acceleration, and when KVM is not supported, Qemu will be used to emulate virtual hardware.

With virt-install, we have easily created a KVM virtual machine. This command abstracts the XML definition required by libvirt. With a list of various parameters, we can easily define all the components with their respective configurations. You can get a full list of virt-install parameters with the --help flag.

Tip

The virtinst package, which installs virt-install, also contains some more commands, such as virt-clone, virt-admin, and virt-xml. Use tab completion in your bash shell to get a list of all virt-* commands.

Once the machine is defined and running, it can be managed with virsh subcommands. Virsh provides tons of subcommands to manage virtual machines, or domains as they are called by libvirt. You can start or stop machines, pause and resume them, or stop them entirely. You can even modify the machine configuration to add or remove devices as needed, or create a clone of an existing machine. To get a list of all machine (domain) management commands, use virsh help domain.

Once you have your first virtual machine, it becomes easier to create new machines using the XML definition from it. You can dump the XML definition with virsh dumpxml machine, edit it as required, and then create a new machine using XML configuration with virsh create configuration.xml.

There are a lot more options available for the virsh and virt-install commands; check their respective manual pages for more details.

There's more…

In the previous example, we used cloud images to quickly start a virtual machine. You do not need to use cloud machines, and you can install the operating system on your own using the respective installation media.

Download the installation media and then use following command to start the installation. Make sure you change the -c parameter to the downloaded ISO file, along with the location:

$ sudo virt-install -n ubuntu -r 1024 \ --disk path=/var/lib/libvirt/images/ubuntu01.img,bus=virtio,size=4 \ -c ubuntu-16.04-server-i386.iso \ --network network=default,model=virtio --graphics vnc,listen=0.0.0.0 --noautoconsole -v

The command will wait for the installation to complete. You can access the GUI installation using the VNC client.

Forward your local port to access VNC on a KVM host. Make sure you replace 5900 with the respective port from virsh vncdisplay node0:

$ ssh kvm_hostname_or_ip -L 5900:127.0.0.1:5900

Now you can connect to VNC at localhost:5900.

Easy cloud images with uvtool

Ubuntu provides another super easy tool named uvtool. This tool focuses on the creation of virtual machines out of Ubuntu Cloud images. It synchronizes cloud images from Ubuntu servers to your local machine. Later, these images can be used to launch virtual machines in minutes. You can install and use uvtool with the following commands:

$ sudo apt-get install uvtool

Download the Xenial image from the cloud images:

$ uvt-simplestreams-libvirt sync release=xenial arch=amd64

Start a virtual machine:

$ uvt-kvm create virtsys01

Finally, get the IP of a running system:

$ uvt-kvm ip virtsys01

Check out the manual page with the man uvtool command and visit the official uvtool page at https://help.ubuntu.com/lts/serverguide/cloud-images-and-uvtool.html for more details.

See also

- Check out the manual pages for virt-install using

$ man virt-install - Check out the manual pages for virsh using

$ man virsh - The official Libvirt site: http://libvirt.org/

- The Libvirt documentation on Ubuntu Server guide: https://help.ubuntu.com/lts/serverguide/libvirt.html

There's more…

In the previous example, we used cloud images to quickly start a virtual machine. You do not need to use cloud machines, and you can install the operating system on your own using the respective installation media.

Download the installation media and then use following command to start the installation. Make sure you change the -c parameter to the downloaded ISO file, along with the location:

$ sudo virt-install -n ubuntu -r 1024 \ --disk path=/var/lib/libvirt/images/ubuntu01.img,bus=virtio,size=4 \ -c ubuntu-16.04-server-i386.iso \ --network network=default,model=virtio --graphics vnc,listen=0.0.0.0 --noautoconsole -v

The command will wait for the installation to complete. You can access the GUI installation using the VNC client.

Forward your local port to access VNC on a KVM host. Make sure you replace 5900 with the respective port from virsh vncdisplay node0:

$ ssh kvm_hostname_or_ip -L 5900:127.0.0.1:5900

Now you can connect to VNC at localhost:5900.

Easy cloud images with uvtool

Ubuntu provides another super easy tool named uvtool. This tool focuses on the creation of virtual machines out of Ubuntu Cloud images. It synchronizes cloud images from Ubuntu servers to your local machine. Later, these images can be used to launch virtual machines in minutes. You can install and use uvtool with the following commands:

$ sudo apt-get install uvtool

Download the Xenial image from the cloud images:

$ uvt-simplestreams-libvirt sync release=xenial arch=amd64

Start a virtual machine:

$ uvt-kvm create virtsys01

Finally, get the IP of a running system:

$ uvt-kvm ip virtsys01

Check out the manual page with the man uvtool command and visit the official uvtool page at https://help.ubuntu.com/lts/serverguide/cloud-images-and-uvtool.html for more details.

See also

- Check out the manual pages for virt-install using

$ man virt-install - Check out the manual pages for virsh using

$ man virsh - The official Libvirt site: http://libvirt.org/

- The Libvirt documentation on Ubuntu Server guide: https://help.ubuntu.com/lts/serverguide/libvirt.html

Easy cloud images with uvtool

Ubuntu provides another super easy tool named uvtool. This tool focuses on the creation of virtual machines out of Ubuntu Cloud images. It synchronizes cloud images from Ubuntu servers to your local machine. Later, these images can be used to launch virtual machines in minutes. You can install and use uvtool with the following commands:

$ sudo apt-get install uvtool

Download the Xenial image from the cloud images:

$ uvt-simplestreams-libvirt sync release=xenial arch=amd64

Start a virtual machine:

$ uvt-kvm create virtsys01

Finally, get the IP of a running system:

$ uvt-kvm ip virtsys01

Check out the manual page with the man uvtool command and visit the official uvtool page at https://help.ubuntu.com/lts/serverguide/cloud-images-and-uvtool.html for more details.

- Check out the manual pages for virt-install using

$ man virt-install - Check out the manual pages for virsh using

$ man virsh - The official Libvirt site: http://libvirt.org/

- The Libvirt documentation on Ubuntu Server guide: https://help.ubuntu.com/lts/serverguide/libvirt.html

See also

- Check out the manual pages for virt-install using

$ man virt-install - Check out the manual pages for virsh using

$ man virsh - The official Libvirt site: http://libvirt.org/

- The Libvirt documentation on Ubuntu Server guide: https://help.ubuntu.com/lts/serverguide/libvirt.html

Setting up your own cloud with OpenStack

We have already seen how to create virtual machines with KVM and Qemu, and how to manage them with tools such as virsh and virt-manager. This approach works when you need to work with a handful of machines and manage few hosts. To operate on a larger scale, you need a tool to manage host machines, VM configurations, images, network, and storage, and monitor the entire environment. OpenStack is an open source initiative to create and manage a large pool of virtual machines (or containers). It is a collection of various tools to deploy IaaS clouds. The official site defines OpenStack as an operating system to control a large pool of compute, network, and storage resources, all managed through a dashboard.

OpenStack was primarily developed and open-sourced by Rackspace, a leading cloud service provider. With its thirteenth release, Mitaka, OpenStack provides tons of tools to manage various components of your infrastructure. A few important components of OpenStack are as follows:

- Nova: Compute controller

- Neutron: OpenStack networking

- Keystone: Identity service

- Glance: OpenStack image service

- Horizon: OpenStack dashboard

- Cinder: Block storage service

- Swift: Object store

- Heat: Orchestration program

OpenStack in itself is quite a big deployment. You need to decide the required components, plan their deployment, and install and configure them to work in sync. The installation itself can be a good topic for a separate book. However, the OpenStack community has developed a set of scripts known as DevStack to support development with faster deployments. In this recipe, we will use the DevStack script to quickly install OpenStack and get an overview of its workings. The official OpenStack documentation provides detailed documents for the Ubuntu based installation and configuration of various components. If you are planning a serious production environment, you should read it thoroughly.

Getting ready

You will need a non-root account with sudo privileges. The default account named ubuntu should work.

The system should have at least two CPU cores with at least 4 GB of RAM and 60 GB of disk space. A static IP address is preferred. If possible, use the minimal installation of Ubuntu.

Tip

If you are performing a fresh installation of Ubuntu Server, press F4 on the first screen to get installation options, and choose Install Minimal System. If you are installing inside a virtual machine, choose Install Minimal Virtual Machine. You may need to go to the installation menu with the Esc key before using F4.

DevStack scripts are available on GitHub. Clone the repository or download and extract it to your installation server. Use the following command to clone:

$ git clone https://git.openstack.org/openstack-dev/devstack \ -b stable/mitaka --depth 1 $ cd devstack

You can choose to get the latest release by selecting the master branch. Just skip the -b stable/mitaka option from the previous command.

How to do it…

Once you obtain the DevStack source, it's as easy as executing an installation script. Before that, we will create a minimal configuration file for passwords and basic network configuration:

- Copy the sample configuration to the root of the

devstackdirectory:$ cp samples/local.conf - Edit

local.confand update passwords:ADMIN_PASSWORD=password DATABASE_PASSWORD=password RABBIT_PASSWORD=password SERVICE_PASSWORD=$ADMIN_PASSWORD

- Add basic network configuration as follows. Update IP address range as per your local network configuration and set

FLAT_INTERFACEto your primary Ethernet interface:FLOATING_RANGE=192.168.1.224/27 FIXED_RANGE=10.11.12.0/24 FIXED_NETWORK_SIZE=256 FLAT_INTERFACE=eth0

Save the changes to the configuration file.

- Now, start the installation with the following command. As the Mitaka stable branch has not been tested with Ubuntu Xenial (16.04), we need to use the

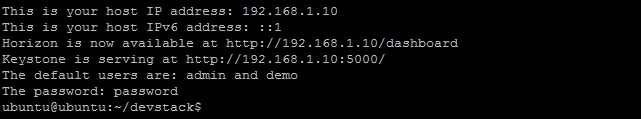

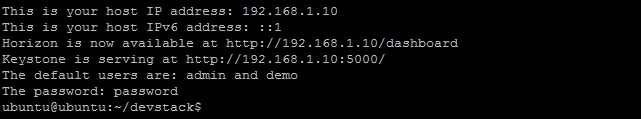

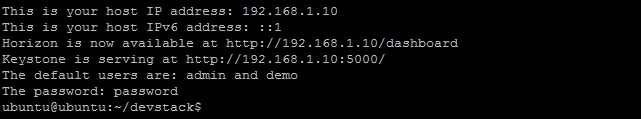

FORCEvariable. If you are using the master branch of DevStack or an older version of Ubuntu, you can start the installation with the./stack.shcommand:$ FORCE=yes ./stack.shThe installation should take some time to complete, mostly depending on your network speed. Once the installation completes, the script should output the dashboard URL, keystone API endpoint, and the admin password:

- Now, access the OpenStack dashboard and log in with the given username and password. The admin account will give you an admin interface. The login screen looks like this:

- Once you log in, your admin interface should look something like this:

Now, from this screen, you can deploy new virtual instances, set up different cloud images, and configure instance flavors.

How it works…

We used DevStack, an unattended installation script, to install and configure basic OpenStack deployment. This will install OpenStack with the bare minimum components for deploying virtual machines with OpenStack. By default, DevStack installs the identity service, Nova network, compute service, and image service. The installation process creates two user accounts, namely admin and dummy. The admin account gives you administrative access to the OpenStack installation and the dummy account gives you the end user interface. The DevStack installation also adds a Cirros image to the image store. This is a basic lightweight Linux distribution and a good candidate to test OpenStack installation.

The default installation creates a basic flat network. You can also configure DevStack to enable Neutron support, by setting the required options in the configuration. Check out the DevStack documentation for more details.

There's more…

Ubuntu provides its own easy-to-use OpenStack installer. It provides options to install OpenStack, along with LXD support and OpenStack Autopilot, an enterprise offering by Canonical. You can choose to install on your local machine (all-in-one installation) or choose a Metal as a Service (MAAS) setup for a multinode deployment. The single-machine setup will install OpenStack on multiple LXC containers, deployed and managed through Juju. You will need at least 12 GB of main memory and an 8-CPU server. Use the following commands to get started with the Ubuntu OpenStack installer:

$ sudo apt-get update $ sudo apt-get install conjure-up $ conjure-up openstack

While DevStack installs a development-focused minimal installation of OpenStack, various other scripts support the automation of the OpenStack installation process. A notable project is OpenStack Ansible. This is an official OpenStack project and provides production-grade deployments. A quick GitHub search should give you a lot more options.

See also

- A step-by-step detailed guide to installing various OpenStack components on Ubuntu server: http://docs.openstack.org/mitaka/install-guide-ubuntu/

- DevStack Neutron configuration: http://docs.openstack.org/developer/devstack/guides/neutron.html

- OpenStack Ansible: https://github.com/openstack/openstack-ansible

- A list of OpenStack resources: https://github.com/ramitsurana/awesome-openstack

- Ubuntu MaaS: http://www.ubuntu.com/cloud/maas

- Ubuntu Juju: http://www.ubuntu.com/cloud/juju

- Read more about LXD and LXC in Chapter 8, Working with Containers

Getting ready

You will need a non-root account with sudo privileges. The default account named ubuntu should work.

The system should have at least two CPU cores with at least 4 GB of RAM and 60 GB of disk space. A static IP address is preferred. If possible, use the minimal installation of Ubuntu.

Tip

If you are performing a fresh installation of Ubuntu Server, press F4 on the first screen to get installation options, and choose Install Minimal System. If you are installing inside a virtual machine, choose Install Minimal Virtual Machine. You may need to go to the installation menu with the Esc key before using F4.

DevStack scripts are available on GitHub. Clone the repository or download and extract it to your installation server. Use the following command to clone:

$ git clone https://git.openstack.org/openstack-dev/devstack \ -b stable/mitaka --depth 1 $ cd devstack

You can choose to get the latest release by selecting the master branch. Just skip the -b stable/mitaka option from the previous command.

How to do it…

Once you obtain the DevStack source, it's as easy as executing an installation script. Before that, we will create a minimal configuration file for passwords and basic network configuration:

- Copy the sample configuration to the root of the

devstackdirectory:$ cp samples/local.conf - Edit

local.confand update passwords:ADMIN_PASSWORD=password DATABASE_PASSWORD=password RABBIT_PASSWORD=password SERVICE_PASSWORD=$ADMIN_PASSWORD

- Add basic network configuration as follows. Update IP address range as per your local network configuration and set

FLAT_INTERFACEto your primary Ethernet interface:FLOATING_RANGE=192.168.1.224/27 FIXED_RANGE=10.11.12.0/24 FIXED_NETWORK_SIZE=256 FLAT_INTERFACE=eth0

Save the changes to the configuration file.

- Now, start the installation with the following command. As the Mitaka stable branch has not been tested with Ubuntu Xenial (16.04), we need to use the

FORCEvariable. If you are using the master branch of DevStack or an older version of Ubuntu, you can start the installation with the./stack.shcommand:$ FORCE=yes ./stack.shThe installation should take some time to complete, mostly depending on your network speed. Once the installation completes, the script should output the dashboard URL, keystone API endpoint, and the admin password:

- Now, access the OpenStack dashboard and log in with the given username and password. The admin account will give you an admin interface. The login screen looks like this:

- Once you log in, your admin interface should look something like this:

Now, from this screen, you can deploy new virtual instances, set up different cloud images, and configure instance flavors.

How it works…

We used DevStack, an unattended installation script, to install and configure basic OpenStack deployment. This will install OpenStack with the bare minimum components for deploying virtual machines with OpenStack. By default, DevStack installs the identity service, Nova network, compute service, and image service. The installation process creates two user accounts, namely admin and dummy. The admin account gives you administrative access to the OpenStack installation and the dummy account gives you the end user interface. The DevStack installation also adds a Cirros image to the image store. This is a basic lightweight Linux distribution and a good candidate to test OpenStack installation.

The default installation creates a basic flat network. You can also configure DevStack to enable Neutron support, by setting the required options in the configuration. Check out the DevStack documentation for more details.

There's more…

Ubuntu provides its own easy-to-use OpenStack installer. It provides options to install OpenStack, along with LXD support and OpenStack Autopilot, an enterprise offering by Canonical. You can choose to install on your local machine (all-in-one installation) or choose a Metal as a Service (MAAS) setup for a multinode deployment. The single-machine setup will install OpenStack on multiple LXC containers, deployed and managed through Juju. You will need at least 12 GB of main memory and an 8-CPU server. Use the following commands to get started with the Ubuntu OpenStack installer:

$ sudo apt-get update $ sudo apt-get install conjure-up $ conjure-up openstack

While DevStack installs a development-focused minimal installation of OpenStack, various other scripts support the automation of the OpenStack installation process. A notable project is OpenStack Ansible. This is an official OpenStack project and provides production-grade deployments. A quick GitHub search should give you a lot more options.

See also

- A step-by-step detailed guide to installing various OpenStack components on Ubuntu server: http://docs.openstack.org/mitaka/install-guide-ubuntu/

- DevStack Neutron configuration: http://docs.openstack.org/developer/devstack/guides/neutron.html

- OpenStack Ansible: https://github.com/openstack/openstack-ansible

- A list of OpenStack resources: https://github.com/ramitsurana/awesome-openstack

- Ubuntu MaaS: http://www.ubuntu.com/cloud/maas

- Ubuntu Juju: http://www.ubuntu.com/cloud/juju

- Read more about LXD and LXC in Chapter 8, Working with Containers

How to do it…

Once you obtain the DevStack source, it's as easy as executing an installation script. Before that, we will create a minimal configuration file for passwords and basic network configuration:

- Copy the sample configuration to the root of the

devstackdirectory:$ cp samples/local.conf - Edit

local.confand update passwords:ADMIN_PASSWORD=password DATABASE_PASSWORD=password RABBIT_PASSWORD=password SERVICE_PASSWORD=$ADMIN_PASSWORD

- Add basic network configuration as follows. Update IP address range as per your local network configuration and set

FLAT_INTERFACEto your primary Ethernet interface:FLOATING_RANGE=192.168.1.224/27 FIXED_RANGE=10.11.12.0/24 FIXED_NETWORK_SIZE=256 FLAT_INTERFACE=eth0

Save the changes to the configuration file.

- Now, start the installation with the following command. As the Mitaka stable branch has not been tested with Ubuntu Xenial (16.04), we need to use the

FORCEvariable. If you are using the master branch of DevStack or an older version of Ubuntu, you can start the installation with the./stack.shcommand:$ FORCE=yes ./stack.shThe installation should take some time to complete, mostly depending on your network speed. Once the installation completes, the script should output the dashboard URL, keystone API endpoint, and the admin password:

- Now, access the OpenStack dashboard and log in with the given username and password. The admin account will give you an admin interface. The login screen looks like this:

- Once you log in, your admin interface should look something like this:

Now, from this screen, you can deploy new virtual instances, set up different cloud images, and configure instance flavors.

How it works…

We used DevStack, an unattended installation script, to install and configure basic OpenStack deployment. This will install OpenStack with the bare minimum components for deploying virtual machines with OpenStack. By default, DevStack installs the identity service, Nova network, compute service, and image service. The installation process creates two user accounts, namely admin and dummy. The admin account gives you administrative access to the OpenStack installation and the dummy account gives you the end user interface. The DevStack installation also adds a Cirros image to the image store. This is a basic lightweight Linux distribution and a good candidate to test OpenStack installation.

The default installation creates a basic flat network. You can also configure DevStack to enable Neutron support, by setting the required options in the configuration. Check out the DevStack documentation for more details.

There's more…

Ubuntu provides its own easy-to-use OpenStack installer. It provides options to install OpenStack, along with LXD support and OpenStack Autopilot, an enterprise offering by Canonical. You can choose to install on your local machine (all-in-one installation) or choose a Metal as a Service (MAAS) setup for a multinode deployment. The single-machine setup will install OpenStack on multiple LXC containers, deployed and managed through Juju. You will need at least 12 GB of main memory and an 8-CPU server. Use the following commands to get started with the Ubuntu OpenStack installer:

$ sudo apt-get update $ sudo apt-get install conjure-up $ conjure-up openstack

While DevStack installs a development-focused minimal installation of OpenStack, various other scripts support the automation of the OpenStack installation process. A notable project is OpenStack Ansible. This is an official OpenStack project and provides production-grade deployments. A quick GitHub search should give you a lot more options.

See also

- A step-by-step detailed guide to installing various OpenStack components on Ubuntu server: http://docs.openstack.org/mitaka/install-guide-ubuntu/

- DevStack Neutron configuration: http://docs.openstack.org/developer/devstack/guides/neutron.html

- OpenStack Ansible: https://github.com/openstack/openstack-ansible

- A list of OpenStack resources: https://github.com/ramitsurana/awesome-openstack

- Ubuntu MaaS: http://www.ubuntu.com/cloud/maas

- Ubuntu Juju: http://www.ubuntu.com/cloud/juju

- Read more about LXD and LXC in Chapter 8, Working with Containers

How it works…

We used DevStack, an unattended installation script, to install and configure basic OpenStack deployment. This will install OpenStack with the bare minimum components for deploying virtual machines with OpenStack. By default, DevStack installs the identity service, Nova network, compute service, and image service. The installation process creates two user accounts, namely admin and dummy. The admin account gives you administrative access to the OpenStack installation and the dummy account gives you the end user interface. The DevStack installation also adds a Cirros image to the image store. This is a basic lightweight Linux distribution and a good candidate to test OpenStack installation.

The default installation creates a basic flat network. You can also configure DevStack to enable Neutron support, by setting the required options in the configuration. Check out the DevStack documentation for more details.

There's more…

Ubuntu provides its own easy-to-use OpenStack installer. It provides options to install OpenStack, along with LXD support and OpenStack Autopilot, an enterprise offering by Canonical. You can choose to install on your local machine (all-in-one installation) or choose a Metal as a Service (MAAS) setup for a multinode deployment. The single-machine setup will install OpenStack on multiple LXC containers, deployed and managed through Juju. You will need at least 12 GB of main memory and an 8-CPU server. Use the following commands to get started with the Ubuntu OpenStack installer:

$ sudo apt-get update $ sudo apt-get install conjure-up $ conjure-up openstack

While DevStack installs a development-focused minimal installation of OpenStack, various other scripts support the automation of the OpenStack installation process. A notable project is OpenStack Ansible. This is an official OpenStack project and provides production-grade deployments. A quick GitHub search should give you a lot more options.

See also

- A step-by-step detailed guide to installing various OpenStack components on Ubuntu server: http://docs.openstack.org/mitaka/install-guide-ubuntu/

- DevStack Neutron configuration: http://docs.openstack.org/developer/devstack/guides/neutron.html

- OpenStack Ansible: https://github.com/openstack/openstack-ansible

- A list of OpenStack resources: https://github.com/ramitsurana/awesome-openstack

- Ubuntu MaaS: http://www.ubuntu.com/cloud/maas

- Ubuntu Juju: http://www.ubuntu.com/cloud/juju

- Read more about LXD and LXC in Chapter 8, Working with Containers

There's more…

Ubuntu provides its own easy-to-use OpenStack installer. It provides options to install OpenStack, along with LXD support and OpenStack Autopilot, an enterprise offering by Canonical. You can choose to install on your local machine (all-in-one installation) or choose a Metal as a Service (MAAS) setup for a multinode deployment. The single-machine setup will install OpenStack on multiple LXC containers, deployed and managed through Juju. You will need at least 12 GB of main memory and an 8-CPU server. Use the following commands to get started with the Ubuntu OpenStack installer:

$ sudo apt-get update $ sudo apt-get install conjure-up $ conjure-up openstack

While DevStack installs a development-focused minimal installation of OpenStack, various other scripts support the automation of the OpenStack installation process. A notable project is OpenStack Ansible. This is an official OpenStack project and provides production-grade deployments. A quick GitHub search should give you a lot more options.

See also

- A step-by-step detailed guide to installing various OpenStack components on Ubuntu server: http://docs.openstack.org/mitaka/install-guide-ubuntu/

- DevStack Neutron configuration: http://docs.openstack.org/developer/devstack/guides/neutron.html

- OpenStack Ansible: https://github.com/openstack/openstack-ansible

- A list of OpenStack resources: https://github.com/ramitsurana/awesome-openstack

- Ubuntu MaaS: http://www.ubuntu.com/cloud/maas

- Ubuntu Juju: http://www.ubuntu.com/cloud/juju

- Read more about LXD and LXC in Chapter 8, Working with Containers

See also

- A step-by-step detailed guide to installing various OpenStack components on Ubuntu server: http://docs.openstack.org/mitaka/install-guide-ubuntu/

- DevStack Neutron configuration: http://docs.openstack.org/developer/devstack/guides/neutron.html

- OpenStack Ansible: https://github.com/openstack/openstack-ansible

- A list of OpenStack resources: https://github.com/ramitsurana/awesome-openstack

- Ubuntu MaaS: http://www.ubuntu.com/cloud/maas

- Ubuntu Juju: http://www.ubuntu.com/cloud/juju

- Read more about LXD and LXC in Chapter 8, Working with Containers

Adding a cloud image to OpenStack