Detecting faces with the Face API

With the newly created project we will now try our first API, the Face API. We will not be doing a whole lot, but we will see how simple it is to detect faces in images.

The steps we need to cover to do this are as follows:

- Register for a free Face API preview subscription.

- Add the necessary NuGet packages to our project.

- Add some UI to the application.

- Detect faces on command.

Head over to https://www.microsoft.com/cognitive-services/en-us/face-api to start the process of registering for a free subscription to the Face API. By clicking the yellow button, stating Get started for free, you will be taken to a login page. Log on with your Microsoft account, or if you do not have one, register for one.

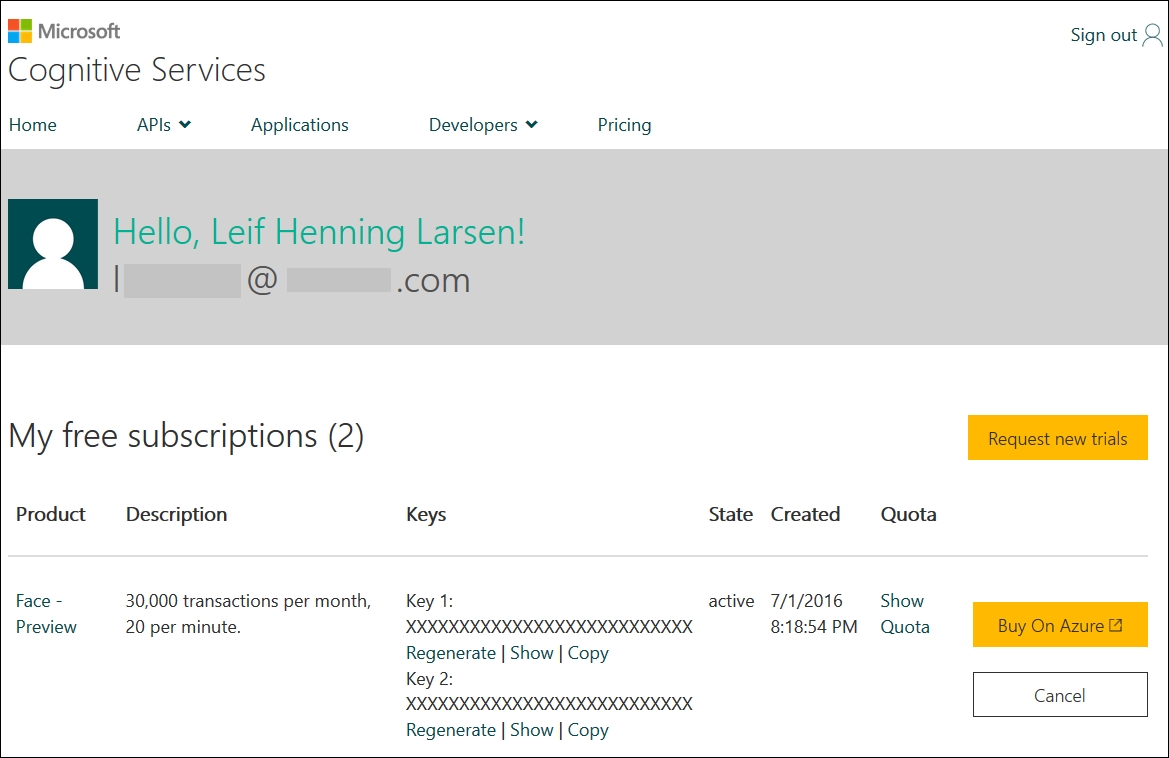

Once logged in, you will need to verify that the Face API Preview has been selected in the list, and accept the terms and conditions. With that out of the way, you will be presented with the following:

You will need one of the two keys later when we are accessing the API.

This is where we will be accessing all our API keys throughout this book. It means that you can already register for more, but we will do this for each new API we add.

Some of the APIs that we will cover have their own NuGet packages created. Whenever this is the case, we will utilize those packages to do the operations we want to do. Common for all APIs is that they are REST APIs, which means in practice you can use them with whichever language you want. For those APIs that do not have their own NuGet package, we call the APIs directly through HTTP.

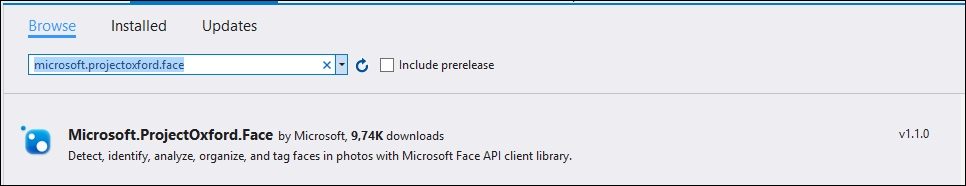

For the Face API we are using now, a NuGet package does exist, so we need to add that to our project. Head over to the NuGet Package Manager option for the project we created earlier. In the Browse tab search for the Microsoft.ProjectOxford.Face package and install the package from Microsoft:

As you will notice, another package will also be installed. This is the Newtonsoft.Json package, which is required by the Face API.

The next step is to add some UI to our application. We will be adding this in the MainView.xaml file. Open this file where the template code we created earlier should be. This means we have a datacontext, and can make bindings for our elements, which we will define now.

First we add a grid and define some rows for the grid:

<Grid>

<Grid.RowDefinitions>

<RowDefinition Height="*" />

<RowDefinition Height="20" />

<RowDefinition Height="30" />

</Grid.RowDefinitions>

Three rows are defined. The first is a row where we will have an image. The second is a line for status message, and the last is where we will place some buttons.

Next we add our image element:

<Image x:Name="FaceImage" Stretch="Uniform" Source="{Binding ImageSource}" Grid.Row="0" />

We have given it a unique name. By setting the Stretch parameter to Uniform, we ensure that the image keeps its aspect ratio. Further on, we place this element in the first row. Last, we bind the image source to a BitmapImage in the ViewModel, which we will look at in a bit.

The next row will contain a text block with some status text. The Text property will be bound to a string property in the ViewModel:

<TextBlockx:Name="StatusTextBlock" Text="{Binding StatusText}" Grid.Row="1" />

The last row will contain one button to browse for an image and one button to be able to detect faces. The command properties of both buttons will be bound to the DelegateCommand properties in the ViewModel:

<Button x:Name="BrowseButton" Content="Browse" Height="20" Width="140" HorizontalAlignment="Left" Command="{Binding BrowseButtonCommand}" Margin="5, 0, 0, 5"Grid.Row="2" />

<Button x:Name="DetectFaceButton" Content="Detect face" Height="20" Width="140" HorizontalAlignment="Right" Command="{Binding DetectFaceCommand}" Margin="0, 0, 5, 5"Grid.Row="2"/>

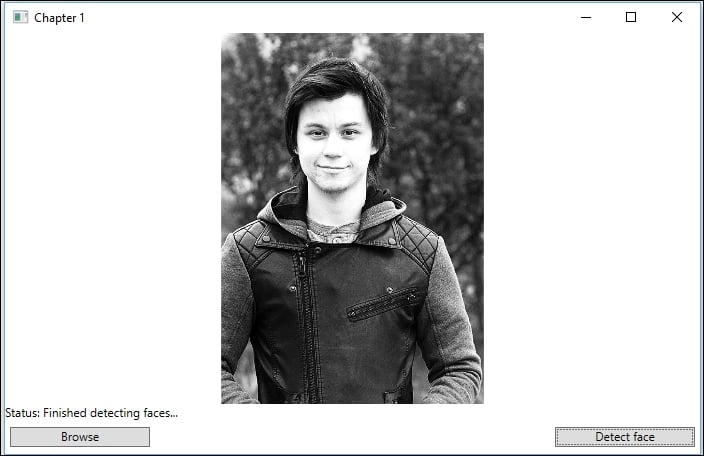

With the View in place, make sure the code compiles and run it. This should present you with the following UI:

The last part is to create the binding properties in our ViewModel, and make the buttons execute something. Open the MainViewModel.cs file. The class should already inherit from the ObservableObject class. First we define two variables:

private string _filePath;

private IFaceServiceClient _faceServiceClient;

The string variable will hold the path to our image, while the IFaceServiceClient variable is to interface the Face API. Next, we define two properties:

private BitmapImage _imageSource;

public BitmapImageImageSource

{

get { return _imageSource; }

set

{

_imageSource = value;

RaisePropertyChangedEvent("ImageSource");

}

}

private string _statusText;

public string StatusText

{

get { return _statusText; }

set

{

_statusText = value;

RaisePropertyChangedEvent("StatusText");

}

}

What we have here is a property for the BitmapImage, mapped to the Image element in the View. We also have a string property for the status text, mapped to the text block element in the View. As you also may notice, when either of the properties is set, we call the RaisePropertyChangedEvent event. This will ensure that the UI updates when either property has new values.

Next we define our two DelegateCommand objects, and do some initialization through the constructor:

public ICommandBrowseButtonCommand { get; private set; }

public ICommandDetectFaceCommand { get; private set; }

public MainViewModel()

{

StatusText = "Status: Waiting for image...";

_faceServiceClient = new FaceServiceClient("YOUR_API_KEY_HERE");

BrowseButtonCommand = new DelegateCommand(Browse);

DetectFaceCommand = new DelegateCommand(DetectFace, CanDetectFace);

}

The properties for the commands are both public to get, but private to set. This means we can only set them from within the ViewModel. In our constructor we start off by setting the status text. Next we create an object of the Face API, which needs to be created with the API key we got earlier.

At last we create the DelegateCommand constructor for our command properties. Notice how the browse command does not specify a predicate. This means it will always be possible to click the corresponding button. To make this compile, we need to create the functions specified in the DelegateCommand constructors: the Browse, DetectFace, and CanDetectFace functions:

private void Browse(object obj)

{

var openDialog = new Microsoft.Win32.OpenFileDialog();

openDialog.Filter = "JPEG Image(*.jpg)|*.jpg";

bool? result = openDialog.ShowDialog();

if (!(bool)result) return;

We start the Browse function by creating an OpenFileDialog object. This dialog is assigned a filter for JPEG images, and in turn it is opened. When the dialog is closed we check the result. If the dialog was cancelled, we simply stop further execution:

_filePath = openDialog.FileName;

Uri fileUri = new Uri(_filePath);

With the dialog closed, we grab the filename of the file selected, and create a new URI from it:

BitmapImage image = new BitmapImage(fileUri);

image.CacheOption = BitmapCacheOption.None;

image.UriSource = fileUri;

With the newly created URI we want to create a new BitmapImage. We specify it to use no cache, and we set the URI source of the URI we created:

ImageSource = image;

StatusText = "Status: Image loaded...";

}

The last step we take is to assign the bitmap image to our BitmapImage property, so the image is shown in the UI. We also update the status text to let the user know the image has been loaded.

Before we move on, it is time to make sure the code compiles, and that you are able to load an image into the View:

private boolCanDetectFace(object obj)

{

return !string.IsNullOrEmpty(ImageSource?.UriSource.ToString());

}

The CanDetectFace function checks whether or not the DetectFacesButton button should be enabled. In this case, it checks if our image property actually has a URI. If it does, by extension that means we have an image, and we should be able to detect faces:

private async void DetectFace(object obj)

{

FaceRectangle[] faceRects = await UploadAndDetectFacesAsync();

string textToSpeak = "No faces detected";

if (faceRects.Length == 1)

textToSpeak = "1 face detected";

else if (faceRects.Length> 1)

textToSpeak = $"{faceRects.Length} faces detected";

Debug.WriteLine(textToSpeak);

}

Our DetectFace method calls an async method to upload and detect faces. The return value contains an array of the FaceRectangles variable. This array contains the rectangle area for all face positions in the given image. We will look into the function we call in a bit.

After the call has finished executing we print a line with the number of faces to the debug console window:

private async Task<FaceRectangle[]>UploadAndDetectFacesAsync()

{

StatusText = "Status: Detecting faces...";

try

{

using (Stream imageFileStream = File.OpenRead(_filePath))

In the UploadAndDetectFacesAsync function we create a Stream from the image. This stream will be used as input for the actual call to the Face API service:

Face[] faces = await _faceServiceClient.DetectAsync(imageFileStream, true, true, new List<FaceAttributeType>() { FaceAttributeType.Age });

This line is the actual call to the detection endpoint for the Face API. The first parameter is the file stream we created in the previous step. The rest of the parameters are all optional. The second parameter should be true if you want to get a face ID. The next parameter specifies if you want to receive face landmarks or not. The last parameter takes a list of facial attributes you may want to receive. In our case, we want the age parameter to be returned, so we need to specify that.

The return type of this function call is an array of faces, with all the parameters you have specified:

List<double> ages = faces.Select(face =>face.FaceAttributes.Age).ToList();

FaceRectangle[] faceRects = faces.Select(face =>face.FaceRectangle).ToArray();

StatusText = "Status: Finished detecting faces...";

foreach(var age in ages)

{

Console.WriteLine(age);

}

return faceRects;

}

}

The first line iterates over all faces and retrieves the approximate age of all faces. This is later printed to the debug console window, in the following foreach loop.

The second line iterates over all faces and retrieves the face rectangle, with the rectangular location of all faces. This is the data we return to the calling function.

Add a catch clause to finish the method. In case an exception is thrown in our API call, we catch that. You want to show the error message and return an empty FaceRectangle array.

With that code in place, you should now be able to run the full example. The end result will look like the following screenshot:

The resulting debug console window will print the following text:

1 face detected

23,7