Before we dive into the details of the message passing model, we will look at a bit of basic terminology.

When an executable program runs, it is a process. The shell looks up the executable, talks to the operating system (OS) using system calls, and thereby creates a child process. The OS also allocates memory and resources, such as file descriptors. So, for example, when you run the find command (the executable lives at /usr/bin/find), it becomes a child process whose parent process is the shell, as shown in the following diagram:

In case you don't have the pstree command, you could try the ptree command instead. The ps --forest command will also work to show you the tree of processes.

Here is a UNIX shell command recursively searching a directory tree for HTML files containing a word:

% find . -type f -name '*.html' | xargs egrep -w Mon /dev/null

./Untitled.html:Mon Jun 5 10:23:38 IST 2017

./Untitled.html:Mon Jun 5 10:23:38 IST 2017

./Untitled.html:Mon Jun 5 10:23:38 IST 2017

./Untitled.html:Mon Jun 5 10:23:38 IST 2017

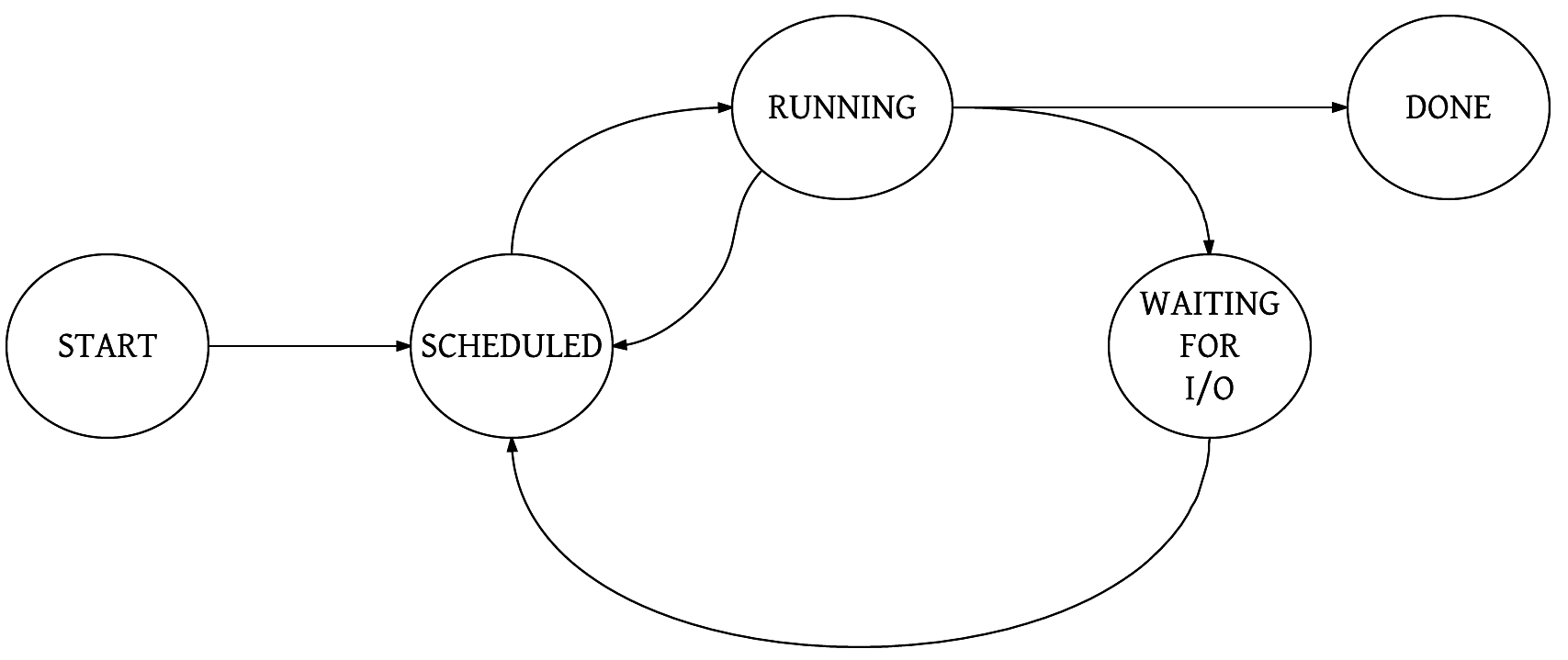

We see a shell pipeline in action here. The find command searches the directory tree rooted at the current directory. It searches for all files with the .html extension and outputs the filenames to standard output. The shell creates a process from the find command and another process for the xargs command. An active (running) program is called a process. The shell also arranges the output of the find command to go to the input of the xargs command via a pipe.

The find process is a producer here. The list of files it produces is consumed by the xargs command. xargs collects a bunch of filenames and invokes egrep on them. Lastly, the output appears in the console. It is important to note that both the processes are running concurrently, as shown in the following diagram:

Both these processes are collaborating with each other, so our goal of recursively searching the directory tree is achieved. One process is producing the filenames. The other is searching these files. As these are running in parallel, we start getting the results as soon as there are some qualifying filenames. We start getting results faster, which means the system is responsive.

Just as in real life, collaboration needs communication. The pipe is the mechanism that enables the find process to communicate with the xargs process. The pipe acts both as a coordinator and as a communication mechanism.