With the ever-increasing popularity of AI, mobile application users expect apps to adapt to the information that is provided and made available to them. The only way to make applications adaptive to the data is by deploying fine-tuned machine learning models to provide a delightful user experience.

Methods of integrating AI on Android and iOS

Firebase ML Kit

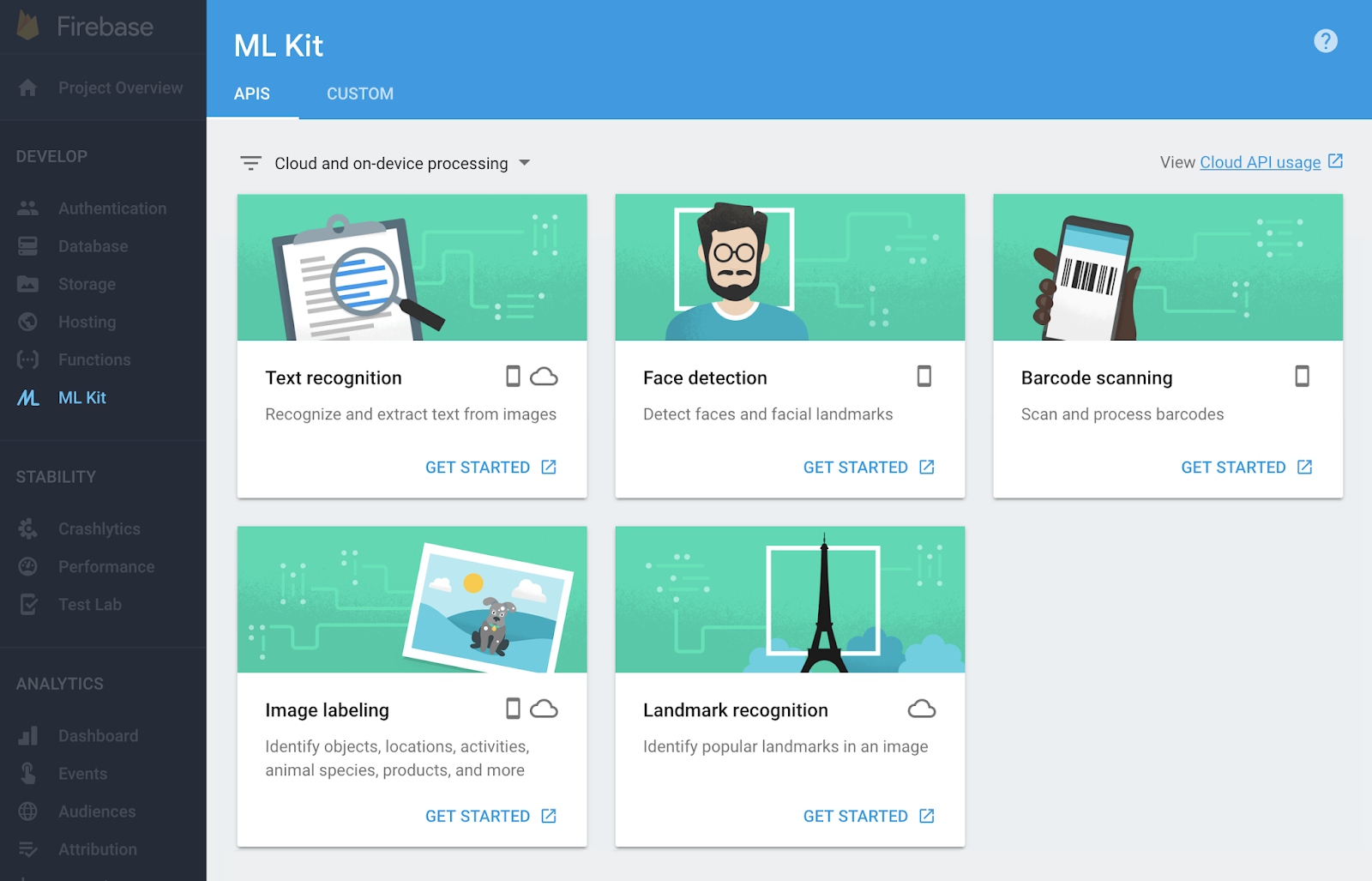

Firebase ML Kit is a machine learning Software Development Kit (SDK) that is available on Firebase for mobile developers. It facilitates the hosting and serving of mobile machine learning models. It reduces the heavy tasks of running machine learning models on mobile devices to API calls that cover common mobile use cases such as face detection, text recognition, barcode scanning, image labeling, and landmark recognition. It simply takes input as parameters in order to output a bunch of analytical information. The APIs provided by ML Kit can run on the device, on the cloud, or on both. The on-device APIs are independent of network connections and, consequently, work faster compared to cloud-based APIs. The cloud-based APIs are hosted on the Google Cloud Platform and uses machine learning technology to provide a higher level of accuracy. If the available APIs do not cover the required use case, custom TensorFlow Lite models can be built, hosted, and served using the Firebase console. The ML Kit acts as an API layer between the custom models, making it easy to run. Let's look at the following screenshot:

Here, you can see what the dashboard for Firebase ML Kit looks like.

Core ML

Core ML, a machine learning framework released by Apple in iOS 11, is used to make applications running on iOS, such as Siri, Camera, and QuickType more intelligent. Delivering efficient performance, Core ML facilitates the easy integration of machine learning models on iOS devices, giving the applications the power to analyze and predict from the available data. Standard machine learning models such as tree ensembles, SVMs, and generalized linear models are supported by Core ML. It contains extensive deep learning models with over 30 types of neuron layers.

Using the Vision framework, features such as face tracking, face detection, text detection, and object tracking can be easily integrated with the apps. The Natural Language framework helps to analyze natural text and deduce its language-specific metadata. When used with Create ML, the framework can be used to deploy custom NLP models. The support for GamePlayKit helps in the evaluation of learned decision trees. Core ML is highly efficient as it is built on top of low-level technologies such as Metal and Accelerate. This allows it to take advantage of the CPU and GPU. Moreover, Core ML does not require an active network connection to run. It has high on-device optimizations. This ensures that all of the computations are done offline, within the device itself, minimizing memory footprint and power consumption.

Caffe2

Built on the original Convolution Architecture for Fast Embedding (Caffe), which was developed at the University of California, Berkeley, Caffe2 is a lightweight, modular, and scalable deep learning framework developed by Facebook. It helps developers and researchers deploy machine learning models and deliver AI-powered performance on Android, iOS, and Raspberry Pi. Additionally, it supports integration in Android Studio, Microsoft Visual Studio, and Xcode. Caffe2 comes with native Python and C++ APIs that work interchangeably, facilitating easy prototyping and optimizations. It is efficient enough to handle large sets of data, and it facilitates automation, image processing, and statistical and mathematical operations. Caffe2, which is open source and hosted on GitHub, leverages community contributions for new models and algorithms.

TensorFlow

TensorFlow, an open source software library developed by Google Brain, facilitates high-performance numerical computation. Due to its flexible architecture, it allows easy deployment of deep learning models and neural networks across CPUs, GPUs, and TPUs. Gmail uses a TensorFlow model to understand the context of a message and predicts replies in its widely known feature, Smart Reply. TensorFlow Lite is a lightweight version of TensorFlow that aids the deployment of machine learning models on Android and iOS devices. It leverages the power of the Android Neural Network API to support hardware acceleration.

The TensorFlow ecosystem, which is available for mobile devices through TensorFlow Lite, is illustrated in the following diagram:

In the preceding diagram, you can see that we need to convert a TensorFlow model into a TensorFlow Lite model before we can use it on mobile devices. This is important because TensorFlow models are bulkier and suffer more latency than the Lite models, which are optimized to run on mobile devices. The conversion is carried out through the TF Lite converter, which can be used in the following ways:

- Using Python APIs: The conversion of a TensorFlow model into a TensorFlow Lite model can be carried out using Python, with any of the following lines of code:

TFLiteConverter.from_saved_model(): Converts SavedModel directories.

TFLiteConverter.from_keras_model(): Converts tf.keras models.

TFLiteConverter.from_concrete_functions(): Converts concrete functions.

- Using the command-line tool: The TensorFlow Lite converter is available as a CLI tool as well, albeit it is somewhat less diverse in its capabilities than the Python API version:

tflite_convert \

--saved_model_dir=/tf_model \

--output_file=/tflite_model.tflite

We will demonstrate the conversion of a TensorFlow model into a TensorFlow Lite model in the upcoming chapters.