Chapter 2. Networking

In this chapter, we will cover the following recipes:

- Connecting to a network with a static IP

- Installing the DHCP server

- Installing the DNS server

- Hiding behind the proxy with squid

- Being on time with NTP

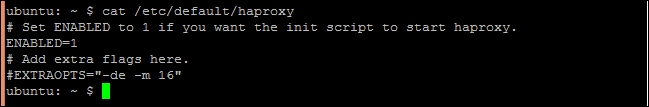

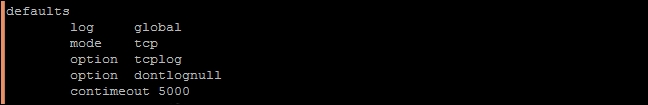

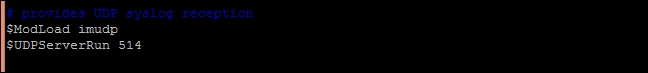

- Discussing load balancing with HAProxy

- Tuning the TCP stack

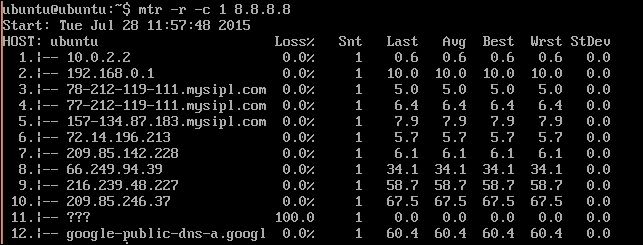

- Troubleshooting network connectivity

- Securing remote access with OpenVPN

- Securing a network with uncomplicated firewall

- Securing against brute force attacks

- Discussing Ubuntu security best practices

Introduction

When we are talking about server systems, networking is the first and most important factor. If you are using an Ubuntu server in a cloud or virtual machine, you generally don't notice the network settings, as they are already configured with various network protocols. However, as your infrastructure grows, managing and securing the network becomes the priority.

Networking can be thought of as an umbrella term for various activities that include network configurations, file sharing and network time management, firewall settings and network proxies, and many others. In this chapter, we will take a closer look at the various networking services that help us set up and effectively manage our networks, be it in the cloud or a local network in your office.

Connecting to a network with a static IP

When you install Ubuntu server, its network setting defaults to dynamic IP addressing, that is, the network management daemon in Ubuntu searches for a DHCP server on the connected network and configures the network with the IP address assigned by DHCP. Even when you start an instance in the cloud, the network is configured with dynamic addressing using the DHCP server setup by the cloud service provider. In this chapter, you will learn how to configure the network interface with static IP assignment.

Getting ready

You will need an Ubuntu server with access to the root account or an account with sudo privileges. If network configuration is a new thing for you, then it is recommended to try this on a local or virtual machine.

How to do it…

Follow these steps to connect to the network with a static IP:

- Get a list of available Ethernet interfaces using the following command:

$ ifconfig -a | grep eth

- Open

/etc/network/interfacesand find the following lines:auto eth0 iface eth0 inet dhcp

- Change the preceding lines to add an IP address, net mask, and default gateway (replace samples with the respective values):

auto eth0 iface eth0 inet static address 192.168.1.100 netmask 255.255.255.0 gateway 192.168.1.1 dns-nameservers 192.168.1.45 192.168.1.46

- Restart the network service for the changes to take effect:

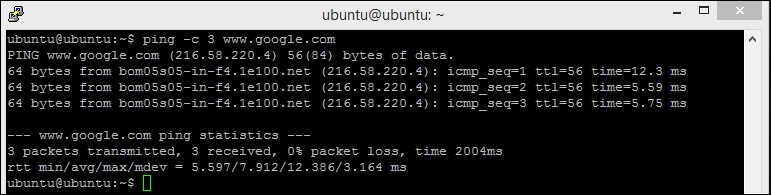

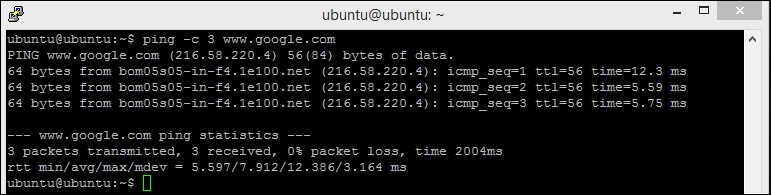

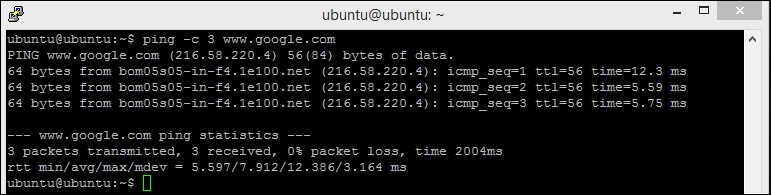

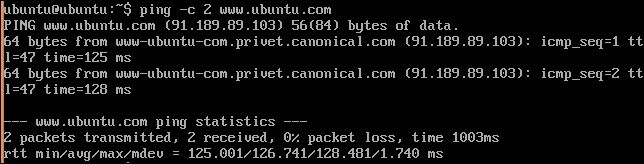

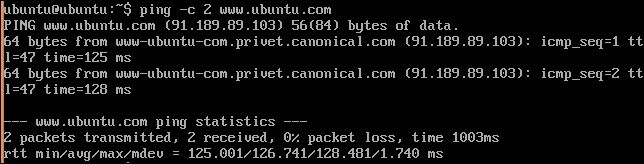

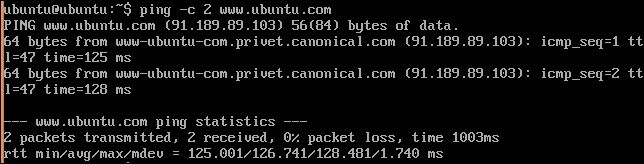

$ sudo /etc/init.d/networking restart - Try to ping a remote host to test the network connection:

$ ping www.google.com

How it works…

In this recipe, we have modified the network configuration from dynamic IP assignment to static assignment.

First, we got a list of all the available network interfaces with ifconfig -a. The -a option of ifconfig returns all the available network interfaces, even if they are disabled. With the help of the pipe (|) symbol, we have directed the output of ifconfig to the grep command. For now, we are interested with Ethernet ports only. The grep command will filter the received data and return only the lines that contain the eth character sequence:

ubuntu@ubuntu:~$ ifconfig -a | grep eth eth0 Link encap:Ethernet HWaddr 08:00:27:bb:a6:03

Here, eth0 means first Ethernet interface available on the server. After getting the name of the interface to configure, we will change the network settings for eth0 in interfaces file at /etc/network/interfaces. By default, eth0 is configured to query the DHCP server for an IP assignment. The eth0 line auto is used to automatically configure the eth0 interface at server startup. Without this line, you will need to enable the network interface after each reboot. You can enable the eth0 interface with the following command:

$ sudo ifup eth0

Similarly, to disable a network interface, use the following command:

$ sudo ifdown eth0

The second iface eth0 inet static line sets the network configuration to static assignment. After this line, we will add network settings, such as IP address, netmask, default gateway, and DNS servers.

After saving the changes, we need to restart the networking service for the changes to take effect. Alternatively, you can simply disable the network interface and enable it with ifdown and ifup commands.

There's more…

The steps in this recipe are used to configure the network changes permanently. If you need to change your network parameters temporarily, you can use the ifconfig and route commands as follows:

- Change the IP address and netmask, as follows:

$ sudo ifconfig eth0 192.168.1.100 netmask 255.255.255.0 - Set the default gateway:

$ sudo route add default gw 192.168.1.1 eth0 - Edit

/etc/resolv.confto add temporary name servers (DNS):nameserver 192.168.1.45 nameserver 192.168.1.46

- To verify the changes, use the following command:

$ ifconfig eth0 $ route -n

- When you no longer need this configuration, you can easily reset it with the following command:

$ ip addr flush eth0 - Alternatively, you can reboot your server to reset the temporary configuration.

IPv6 configuration

You may need to configure your Ubuntu server for IPv6 IP address. Version six IP addresses use a 128-bit address space and include hexadecimal characters. They are different from simple version four IP addresses that use a 32-bit addressing space. Ubuntu supports IPv6 addressing and can be easily configured with either DHCP or a static address. The following is an example of static configuration for IPv6:

iface eth0 inet6 static address 2001:db8::xxxx:yyyy gateway your_ipv6_gateway

See also

You can find more details about network configuration in the Ubuntu server guide:

- https://help.ubuntu.com/lts/serverguide/network-configuration.html

- Checkout the Ubuntu wiki page on IP version 6 - https://wiki.ubuntu.com/IPv6

Getting ready

You will need an Ubuntu server with access to the root account or an account with sudo privileges. If network configuration is a new thing for you, then it is recommended to try this on a local or virtual machine.

How to do it…

Follow these steps to connect to the network with a static IP:

- Get a list of available Ethernet interfaces using the following command:

$ ifconfig -a | grep eth

- Open

/etc/network/interfacesand find the following lines:auto eth0 iface eth0 inet dhcp

- Change the preceding lines to add an IP address, net mask, and default gateway (replace samples with the respective values):

auto eth0 iface eth0 inet static address 192.168.1.100 netmask 255.255.255.0 gateway 192.168.1.1 dns-nameservers 192.168.1.45 192.168.1.46

- Restart the network service for the changes to take effect:

$ sudo /etc/init.d/networking restart - Try to ping a remote host to test the network connection:

$ ping www.google.com

How it works…

In this recipe, we have modified the network configuration from dynamic IP assignment to static assignment.

First, we got a list of all the available network interfaces with ifconfig -a. The -a option of ifconfig returns all the available network interfaces, even if they are disabled. With the help of the pipe (|) symbol, we have directed the output of ifconfig to the grep command. For now, we are interested with Ethernet ports only. The grep command will filter the received data and return only the lines that contain the eth character sequence:

ubuntu@ubuntu:~$ ifconfig -a | grep eth eth0 Link encap:Ethernet HWaddr 08:00:27:bb:a6:03

Here, eth0 means first Ethernet interface available on the server. After getting the name of the interface to configure, we will change the network settings for eth0 in interfaces file at /etc/network/interfaces. By default, eth0 is configured to query the DHCP server for an IP assignment. The eth0 line auto is used to automatically configure the eth0 interface at server startup. Without this line, you will need to enable the network interface after each reboot. You can enable the eth0 interface with the following command:

$ sudo ifup eth0

Similarly, to disable a network interface, use the following command:

$ sudo ifdown eth0

The second iface eth0 inet static line sets the network configuration to static assignment. After this line, we will add network settings, such as IP address, netmask, default gateway, and DNS servers.

After saving the changes, we need to restart the networking service for the changes to take effect. Alternatively, you can simply disable the network interface and enable it with ifdown and ifup commands.

There's more…

The steps in this recipe are used to configure the network changes permanently. If you need to change your network parameters temporarily, you can use the ifconfig and route commands as follows:

- Change the IP address and netmask, as follows:

$ sudo ifconfig eth0 192.168.1.100 netmask 255.255.255.0 - Set the default gateway:

$ sudo route add default gw 192.168.1.1 eth0 - Edit

/etc/resolv.confto add temporary name servers (DNS):nameserver 192.168.1.45 nameserver 192.168.1.46

- To verify the changes, use the following command:

$ ifconfig eth0 $ route -n

- When you no longer need this configuration, you can easily reset it with the following command:

$ ip addr flush eth0 - Alternatively, you can reboot your server to reset the temporary configuration.

IPv6 configuration

You may need to configure your Ubuntu server for IPv6 IP address. Version six IP addresses use a 128-bit address space and include hexadecimal characters. They are different from simple version four IP addresses that use a 32-bit addressing space. Ubuntu supports IPv6 addressing and can be easily configured with either DHCP or a static address. The following is an example of static configuration for IPv6:

iface eth0 inet6 static address 2001:db8::xxxx:yyyy gateway your_ipv6_gateway

See also

You can find more details about network configuration in the Ubuntu server guide:

- https://help.ubuntu.com/lts/serverguide/network-configuration.html

- Checkout the Ubuntu wiki page on IP version 6 - https://wiki.ubuntu.com/IPv6

How to do it…

Follow these steps to connect to the network with a static IP:

- Get a list of available Ethernet interfaces using the following command:

$ ifconfig -a | grep eth

- Open

/etc/network/interfacesand find the following lines:auto eth0 iface eth0 inet dhcp

- Change the preceding lines to add an IP address, net mask, and default gateway (replace samples with the respective values):

auto eth0 iface eth0 inet static address 192.168.1.100 netmask 255.255.255.0 gateway 192.168.1.1 dns-nameservers 192.168.1.45 192.168.1.46

- Restart the network service for the changes to take effect:

$ sudo /etc/init.d/networking restart - Try to ping a remote host to test the network connection:

$ ping www.google.com

How it works…

In this recipe, we have modified the network configuration from dynamic IP assignment to static assignment.

First, we got a list of all the available network interfaces with ifconfig -a. The -a option of ifconfig returns all the available network interfaces, even if they are disabled. With the help of the pipe (|) symbol, we have directed the output of ifconfig to the grep command. For now, we are interested with Ethernet ports only. The grep command will filter the received data and return only the lines that contain the eth character sequence:

ubuntu@ubuntu:~$ ifconfig -a | grep eth eth0 Link encap:Ethernet HWaddr 08:00:27:bb:a6:03

Here, eth0 means first Ethernet interface available on the server. After getting the name of the interface to configure, we will change the network settings for eth0 in interfaces file at /etc/network/interfaces. By default, eth0 is configured to query the DHCP server for an IP assignment. The eth0 line auto is used to automatically configure the eth0 interface at server startup. Without this line, you will need to enable the network interface after each reboot. You can enable the eth0 interface with the following command:

$ sudo ifup eth0

Similarly, to disable a network interface, use the following command:

$ sudo ifdown eth0

The second iface eth0 inet static line sets the network configuration to static assignment. After this line, we will add network settings, such as IP address, netmask, default gateway, and DNS servers.

After saving the changes, we need to restart the networking service for the changes to take effect. Alternatively, you can simply disable the network interface and enable it with ifdown and ifup commands.

There's more…

The steps in this recipe are used to configure the network changes permanently. If you need to change your network parameters temporarily, you can use the ifconfig and route commands as follows:

- Change the IP address and netmask, as follows:

$ sudo ifconfig eth0 192.168.1.100 netmask 255.255.255.0 - Set the default gateway:

$ sudo route add default gw 192.168.1.1 eth0 - Edit

/etc/resolv.confto add temporary name servers (DNS):nameserver 192.168.1.45 nameserver 192.168.1.46

- To verify the changes, use the following command:

$ ifconfig eth0 $ route -n

- When you no longer need this configuration, you can easily reset it with the following command:

$ ip addr flush eth0 - Alternatively, you can reboot your server to reset the temporary configuration.

IPv6 configuration

You may need to configure your Ubuntu server for IPv6 IP address. Version six IP addresses use a 128-bit address space and include hexadecimal characters. They are different from simple version four IP addresses that use a 32-bit addressing space. Ubuntu supports IPv6 addressing and can be easily configured with either DHCP or a static address. The following is an example of static configuration for IPv6:

iface eth0 inet6 static address 2001:db8::xxxx:yyyy gateway your_ipv6_gateway

See also

You can find more details about network configuration in the Ubuntu server guide:

- https://help.ubuntu.com/lts/serverguide/network-configuration.html

- Checkout the Ubuntu wiki page on IP version 6 - https://wiki.ubuntu.com/IPv6

How it works…

In this recipe, we have modified the network configuration from dynamic IP assignment to static assignment.

First, we got a list of all the available network interfaces with ifconfig -a. The -a option of ifconfig returns all the available network interfaces, even if they are disabled. With the help of the pipe (|) symbol, we have directed the output of ifconfig to the grep command. For now, we are interested with Ethernet ports only. The grep command will filter the received data and return only the lines that contain the eth character sequence:

ubuntu@ubuntu:~$ ifconfig -a | grep eth eth0 Link encap:Ethernet HWaddr 08:00:27:bb:a6:03

Here, eth0 means first Ethernet interface available on the server. After getting the name of the interface to configure, we will change the network settings for eth0 in interfaces file at /etc/network/interfaces. By default, eth0 is configured to query the DHCP server for an IP assignment. The eth0 line auto is used to automatically configure the eth0 interface at server startup. Without this line, you will need to enable the network interface after each reboot. You can enable the eth0 interface with the following command:

$ sudo ifup eth0

Similarly, to disable a network interface, use the following command:

$ sudo ifdown eth0

The second iface eth0 inet static line sets the network configuration to static assignment. After this line, we will add network settings, such as IP address, netmask, default gateway, and DNS servers.

After saving the changes, we need to restart the networking service for the changes to take effect. Alternatively, you can simply disable the network interface and enable it with ifdown and ifup commands.

There's more…

The steps in this recipe are used to configure the network changes permanently. If you need to change your network parameters temporarily, you can use the ifconfig and route commands as follows:

- Change the IP address and netmask, as follows:

$ sudo ifconfig eth0 192.168.1.100 netmask 255.255.255.0 - Set the default gateway:

$ sudo route add default gw 192.168.1.1 eth0 - Edit

/etc/resolv.confto add temporary name servers (DNS):nameserver 192.168.1.45 nameserver 192.168.1.46

- To verify the changes, use the following command:

$ ifconfig eth0 $ route -n

- When you no longer need this configuration, you can easily reset it with the following command:

$ ip addr flush eth0 - Alternatively, you can reboot your server to reset the temporary configuration.

IPv6 configuration

You may need to configure your Ubuntu server for IPv6 IP address. Version six IP addresses use a 128-bit address space and include hexadecimal characters. They are different from simple version four IP addresses that use a 32-bit addressing space. Ubuntu supports IPv6 addressing and can be easily configured with either DHCP or a static address. The following is an example of static configuration for IPv6:

iface eth0 inet6 static address 2001:db8::xxxx:yyyy gateway your_ipv6_gateway

See also

You can find more details about network configuration in the Ubuntu server guide:

- https://help.ubuntu.com/lts/serverguide/network-configuration.html

- Checkout the Ubuntu wiki page on IP version 6 - https://wiki.ubuntu.com/IPv6

There's more…

The steps in this recipe are used to configure the network changes permanently. If you need to change your network parameters temporarily, you can use the ifconfig and route commands as follows:

- Change the IP address and netmask, as follows:

$ sudo ifconfig eth0 192.168.1.100 netmask 255.255.255.0 - Set the default gateway:

$ sudo route add default gw 192.168.1.1 eth0 - Edit

/etc/resolv.confto add temporary name servers (DNS):nameserver 192.168.1.45 nameserver 192.168.1.46

- To verify the changes, use the following command:

$ ifconfig eth0 $ route -n

- When you no longer need this configuration, you can easily reset it with the following command:

$ ip addr flush eth0 - Alternatively, you can reboot your server to reset the temporary configuration.

IPv6 configuration

You may need to configure your Ubuntu server for IPv6 IP address. Version six IP addresses use a 128-bit address space and include hexadecimal characters. They are different from simple version four IP addresses that use a 32-bit addressing space. Ubuntu supports IPv6 addressing and can be easily configured with either DHCP or a static address. The following is an example of static configuration for IPv6:

iface eth0 inet6 static address 2001:db8::xxxx:yyyy gateway your_ipv6_gateway

See also

You can find more details about network configuration in the Ubuntu server guide:

- https://help.ubuntu.com/lts/serverguide/network-configuration.html

- Checkout the Ubuntu wiki page on IP version 6 - https://wiki.ubuntu.com/IPv6

IPv6 configuration

You may need to configure your Ubuntu server for IPv6 IP address. Version six IP addresses use a 128-bit address space and include hexadecimal characters. They are different from simple version four IP addresses that use a 32-bit addressing space. Ubuntu supports IPv6 addressing and can be easily configured with either DHCP or a static address. The following is an example of static configuration for IPv6:

iface eth0 inet6 static address 2001:db8::xxxx:yyyy gateway your_ipv6_gateway

You can find more details about network configuration in the Ubuntu server guide:

- https://help.ubuntu.com/lts/serverguide/network-configuration.html

- Checkout the Ubuntu wiki page on IP version 6 - https://wiki.ubuntu.com/IPv6

See also

You can find more details about network configuration in the Ubuntu server guide:

- https://help.ubuntu.com/lts/serverguide/network-configuration.html

- Checkout the Ubuntu wiki page on IP version 6 - https://wiki.ubuntu.com/IPv6

Installing the DHCP server

DHCP is a service used to automatically assign network configuration to client systems. DHCP can be used as a handy tool when you have a large pool of systems that needs to be configured for network settings. Plus, when you need to change the network configuration, say to update a DNS server, all you need to do is update the DHCP server and all the connected hosts will be reconfigured with new settings. Also, you get reliable IP address configuration that minimizes configuration errors and address conflicts. You can easily add a new host to the network without spending time on network planning.

DHCP is most commonly used to provide IP configuration settings, such as IP address, net mask, default gateway, and DNS servers. However, it can also be set to configure the time server and hostname on the client.

DHCP can be configured to use the following configuration methods:

- Manual allocation: Here, the configuration settings are tied with the MAC address of the client's network card. The same settings are supplied each time the client makes a request with the same network card.

- Dynamic allocation: This method specifies a range of IP addresses to be assigned to the clients. The server can dynamically assign IP configuration to the client on first come, first served basis. These settings are allocated for a specified time period called lease; after this period, the client needs to renegotiate with the server to keep using the same address. If the client leaves the network for a specified time, the configuration gets expired and returns to pool where it can be assigned to other clients. Lease time is a configurable option and it can be set to infinite.

Ubuntu comes pre-installed with the DHCP client, dhclient. The DHCP dhcpd server daemon can be installed while setting up an Ubuntu server or separately with the apt-get command.

Getting ready

Make sure that your DHCP host is configured with static IP address.

You will need an access to the root account or an account with sudo privileges.

How to do it…

Follow these steps to install a DHCP server:

- Install a DHCP server:

$ sudo apt-get install isc-dhcp-server - Open the DHCP configuration file:

$ sudo nano -w /etc/dhcp/dhcpd.conf - Change the default and max lease time if necessary:

default-lease-time 600; max-lease-time 7200;

- Add the following lines at the end of the file (replace the IP address to match your network):

subnet 192.168.1.0 netmask 255.255.255.0 { range 192.168.1.150 192.168.1.200; option routers 192.168.1.1; option domain-name-servers 192.168.1.2, 192.168.1.3; option domain-name "example.com"; }

- Save the configuration file and exit with Ctrl + O and Ctrl + X.

- After changing the configuration file, restart

dhcpd:$ sudo service isc-dhcp-server restart

How it works…

Here, we have installed the DHCP server with the isc-dhcp-server package. It is open source software that implements the DHCP protocol. ISC-DHCP supports both IPv4 and IPv6.

After the installation, we need to set the basic configuration to match our network settings. All dhcpd settings are listed in the /etc/dhcp/dhcpd.conf configuration file. In the sample settings listed earlier, we have configured a new network, 192.168.1.0. This will result in IP addresses ranging from 192.168.1.150 to 192.168.1.200 to be assigned to clients. The default lease time is set to 600 seconds with maximum bound of 7200 seconds. A client can ask for a specific time to a maximum lease period of 7200 seconds. Additionally, the DHCP server will provide a default gateway (routers) as well as default DNS servers.

If you have multiple network interfaces, you may need to change the interface that dhcpd should listen to. These settings are listed in /etc/default/isc-dhcp-server. You can set multiple interfaces to listen to; just specify the interface names, separated by a space, for example, INTERFACES="wlan0 eth0".

There's more…

You can reserve an IP address to be assigned to a specific device on network. Reservation ensures that a specified device is always assigned to the same IP address. To create a reservation, add the following lines to dhcpd.conf. It will assign IP 192.168.1.201 to the client with the 08:D2:1F:50:F0:6F MAC ID:

host Server1 { hardware ethernet 08:D2:1F:50:F0:6F; fixed-address 192.168.1.201; }

Getting ready

Make sure that your DHCP host is configured with static IP address.

You will need an access to the root account or an account with sudo privileges.

How to do it…

Follow these steps to install a DHCP server:

- Install a DHCP server:

$ sudo apt-get install isc-dhcp-server - Open the DHCP configuration file:

$ sudo nano -w /etc/dhcp/dhcpd.conf - Change the default and max lease time if necessary:

default-lease-time 600; max-lease-time 7200;

- Add the following lines at the end of the file (replace the IP address to match your network):

subnet 192.168.1.0 netmask 255.255.255.0 { range 192.168.1.150 192.168.1.200; option routers 192.168.1.1; option domain-name-servers 192.168.1.2, 192.168.1.3; option domain-name "example.com"; }

- Save the configuration file and exit with Ctrl + O and Ctrl + X.

- After changing the configuration file, restart

dhcpd:$ sudo service isc-dhcp-server restart

How it works…

Here, we have installed the DHCP server with the isc-dhcp-server package. It is open source software that implements the DHCP protocol. ISC-DHCP supports both IPv4 and IPv6.

After the installation, we need to set the basic configuration to match our network settings. All dhcpd settings are listed in the /etc/dhcp/dhcpd.conf configuration file. In the sample settings listed earlier, we have configured a new network, 192.168.1.0. This will result in IP addresses ranging from 192.168.1.150 to 192.168.1.200 to be assigned to clients. The default lease time is set to 600 seconds with maximum bound of 7200 seconds. A client can ask for a specific time to a maximum lease period of 7200 seconds. Additionally, the DHCP server will provide a default gateway (routers) as well as default DNS servers.

If you have multiple network interfaces, you may need to change the interface that dhcpd should listen to. These settings are listed in /etc/default/isc-dhcp-server. You can set multiple interfaces to listen to; just specify the interface names, separated by a space, for example, INTERFACES="wlan0 eth0".

There's more…

You can reserve an IP address to be assigned to a specific device on network. Reservation ensures that a specified device is always assigned to the same IP address. To create a reservation, add the following lines to dhcpd.conf. It will assign IP 192.168.1.201 to the client with the 08:D2:1F:50:F0:6F MAC ID:

host Server1 { hardware ethernet 08:D2:1F:50:F0:6F; fixed-address 192.168.1.201; }

How to do it…

Follow these steps to install a DHCP server:

- Install a DHCP server:

$ sudo apt-get install isc-dhcp-server - Open the DHCP configuration file:

$ sudo nano -w /etc/dhcp/dhcpd.conf - Change the default and max lease time if necessary:

default-lease-time 600; max-lease-time 7200;

- Add the following lines at the end of the file (replace the IP address to match your network):

subnet 192.168.1.0 netmask 255.255.255.0 { range 192.168.1.150 192.168.1.200; option routers 192.168.1.1; option domain-name-servers 192.168.1.2, 192.168.1.3; option domain-name "example.com"; }

- Save the configuration file and exit with Ctrl + O and Ctrl + X.

- After changing the configuration file, restart

dhcpd:$ sudo service isc-dhcp-server restart

How it works…

Here, we have installed the DHCP server with the isc-dhcp-server package. It is open source software that implements the DHCP protocol. ISC-DHCP supports both IPv4 and IPv6.

After the installation, we need to set the basic configuration to match our network settings. All dhcpd settings are listed in the /etc/dhcp/dhcpd.conf configuration file. In the sample settings listed earlier, we have configured a new network, 192.168.1.0. This will result in IP addresses ranging from 192.168.1.150 to 192.168.1.200 to be assigned to clients. The default lease time is set to 600 seconds with maximum bound of 7200 seconds. A client can ask for a specific time to a maximum lease period of 7200 seconds. Additionally, the DHCP server will provide a default gateway (routers) as well as default DNS servers.

If you have multiple network interfaces, you may need to change the interface that dhcpd should listen to. These settings are listed in /etc/default/isc-dhcp-server. You can set multiple interfaces to listen to; just specify the interface names, separated by a space, for example, INTERFACES="wlan0 eth0".

There's more…

You can reserve an IP address to be assigned to a specific device on network. Reservation ensures that a specified device is always assigned to the same IP address. To create a reservation, add the following lines to dhcpd.conf. It will assign IP 192.168.1.201 to the client with the 08:D2:1F:50:F0:6F MAC ID:

host Server1 { hardware ethernet 08:D2:1F:50:F0:6F; fixed-address 192.168.1.201; }

How it works…

Here, we have installed the DHCP server with the isc-dhcp-server package. It is open source software that implements the DHCP protocol. ISC-DHCP supports both IPv4 and IPv6.

After the installation, we need to set the basic configuration to match our network settings. All dhcpd settings are listed in the /etc/dhcp/dhcpd.conf configuration file. In the sample settings listed earlier, we have configured a new network, 192.168.1.0. This will result in IP addresses ranging from 192.168.1.150 to 192.168.1.200 to be assigned to clients. The default lease time is set to 600 seconds with maximum bound of 7200 seconds. A client can ask for a specific time to a maximum lease period of 7200 seconds. Additionally, the DHCP server will provide a default gateway (routers) as well as default DNS servers.

If you have multiple network interfaces, you may need to change the interface that dhcpd should listen to. These settings are listed in /etc/default/isc-dhcp-server. You can set multiple interfaces to listen to; just specify the interface names, separated by a space, for example, INTERFACES="wlan0 eth0".

There's more…

You can reserve an IP address to be assigned to a specific device on network. Reservation ensures that a specified device is always assigned to the same IP address. To create a reservation, add the following lines to dhcpd.conf. It will assign IP 192.168.1.201 to the client with the 08:D2:1F:50:F0:6F MAC ID:

host Server1 { hardware ethernet 08:D2:1F:50:F0:6F; fixed-address 192.168.1.201; }

There's more…

You can reserve an IP address to be assigned to a specific device on network. Reservation ensures that a specified device is always assigned to the same IP address. To create a reservation, add the following lines to dhcpd.conf. It will assign IP 192.168.1.201 to the client with the 08:D2:1F:50:F0:6F MAC ID:

host Server1 { hardware ethernet 08:D2:1F:50:F0:6F; fixed-address 192.168.1.201; }

Installing the DNS server

DNS, also known as name server, is a service on the Internet that provides mapping between IP addresses and domain names and vice versa. DNS maintains a database of names and related IP addresses. When an application queries with a domain name, DNS responds with a mapped IP address. Applications can also ask for a domain name by providing an IP address.

DNS is quite a big topic, and an entire chapter can be written just on the DNS setup. This recipe assumes some basic understanding of the working of the DNS protocol. We will cover the installation of BIND, installation of DNS server application, configuration of BIND as a caching DNS, and setup of Primary Master and Secondary Master. We will also cover some best practices to secure your DNS server.

Getting ready

In this recipe, I will be using four servers. You can create virtual machines if you want to simply test the setup:

ns1: Name server one/Primary Masterns2: Name server two/Secondary Masterhost1: Host system onehost2: Host system two, optional- All servers should be configured in a private network. I have used the

10.0.2.0/24network - We need root privileges on all servers

- All servers should be configured in a private network. I have used the

How to do it…

Install BIND and set up a caching name server through the following steps:

- On

ns1, install BIND anddnsutilswith the following command:$ sudo apt-get update $ sudo apt-get install bind9 dnsutils

- Open

/etc/bind/named.conf.optoins, enable theforwarderssection, and add your preferred DNS servers:forwarders { 8.8.8.8; 8.8.4.4; };

- Now restart BIND to apply a new configuration:

$ sudo service bind9 restart - Check whether the BIND server is up and running:

$ dig -x 127.0.0.1 - You should get an output similar to the following code:

;; Query time: 1 msec ;; SERVER: 10.0.2.53#53(10.0.2.53)

- Use

digto external domain and check the query time:

- Dig the same domain again and cross check the query time. It should be less than the first query:

Set up Primary Master through the following steps:

- On the

ns1server, edit/etc/bind/named.conf.optionsand add theaclblock above theoptionsblock:acl "local" { 10.0.2.0/24; # local network };

- Add the following lines under the

optionsblock:recursion yes; allow-recursion { local; }; listen-on { 10.0.2.53; }; # ns1 IP address allow-transfer { none; };

- Open the

/etc/bind/named.conf.localfile to add forward and reverse zones:$ sudo nano /etc/bind/named.conf.local - Add the forward

zone:zone "example.com" { type master; file "/etc/bind/zones/db.example.com"; };

- Add the reverse

zone:zone "2.0.10.in-addr.arpa" { type master; file "/etc/bind/zones/db.10"; };

- Create the

zonesdirectory under/etc/bind/:$ sudo mkdir /etc/bind/zones - Create the forward

zonefile using the existingzonefile,db.local, as a template:$ cd /etc/bind/ $ sudo cp db.local zones/db.example.com

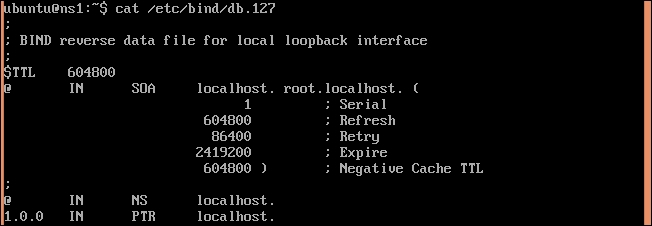

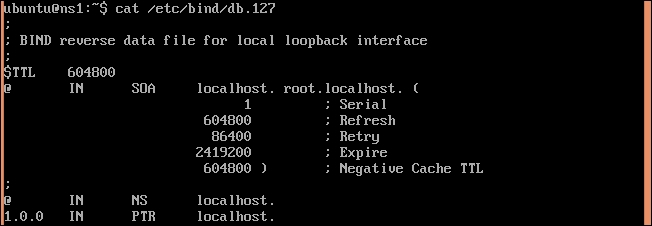

- The default file should look similar to the following image:

- Edit the

SOAentry and replacelocalhostwith FQDN of your server. - Increment the serial number (you can use the current date time as the serial number,

201507071100) - Remove entries for

localhost,127.0.0.1and::1. - Add new records:

; name server - NS records @ IN NS ns.exmple.com ; name server A records ns IN A 10.0.2.53 ; local - A records host1 IN A 10.0.2.58

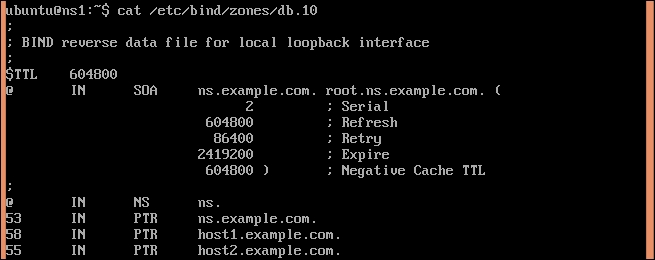

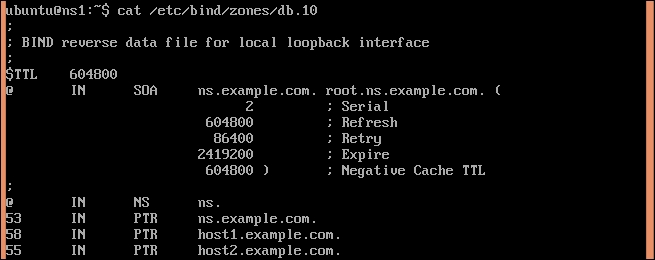

- Save the changes and exit the nano editor. The final file should look similar to the following image:

- Now create the reverse

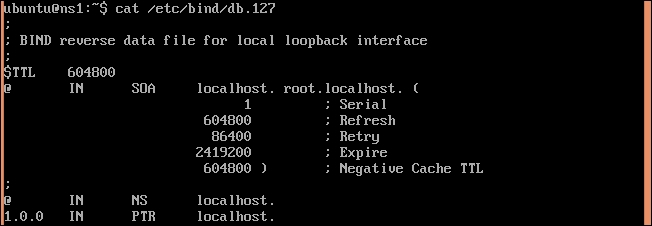

zonefile using/etc/bind/db.127as a template:$ sudo cp db.127 zones/db.10 - The default file should look similar to the following screenshot:

- Change the

SOArecord and increment the serial number. - Remove

NSandPTRrecords forlocalhost. - Add

NS,PTR, andhost records:; NS records @ IN NS ns.example.com ; PTR records 53 IN PTR ns.example.com ; host records 58 IN PTR host1.example.com

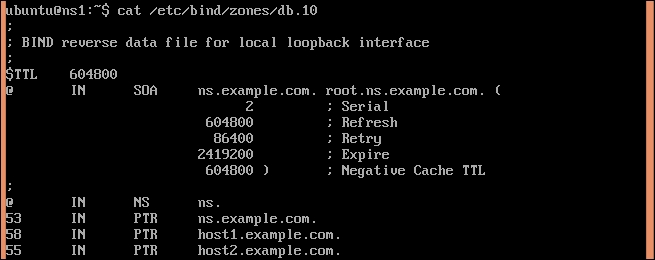

- Save the changes. The final file should look similar to the following image:

- Check the configuration files for syntax errors. It should end with no output:

$ sudo named-checkconf - Check

zonefiles for syntax errors:$ sudo named-checkzone example.com /etc/bind/zones/db.example.com - If there are no errors, you should see an output similar to the following:

zone example.com/IN: loaded serial 3 OK

- Check the reverse

zonefile,zones/db.10:$ sudo named-checkzone example.com /etc/bind/zones/db.10 - If there are no errors, you should see output similar to the following:

zone example.com/IN: loaded serial 3 OK

- Now restart the DNS server bind:

$ sudo service bind9 restart - Log in to host2 and configure it to use

ns.example.comas a DNS server. Addns.example.comto/etc/resolve.confon host2. - Test forward lookup with the

nslookupcommand:$ nslookup host1.example.com - You should see an output similar to following:

$ nslookup host1.example.com Server: 10.0.2.53 Address: 10.0.2.53#53 Name: host1.example.com Address: 10.0.2.58

- Now test the reverse lookup:

$ nslookup 10.0.2.58 - It should output something similar to the following:

$ nslookup 10.0.2.58 Server: 10.0.2.53 Address: 10.0.2.53#53 58.2.0.10.in-addr.arpa name = host1.example.com

Set up Secondary Master through the following steps:

- First, allow zone transfer on Primary Master by setting the

allow-transferoption in/etc/bind/named.conf.local:zone "example.com" { type master; file "/etc/bind/zones/db.example.com"; allow-transfer { 10.0.2.54; }; }; zone "2.0.10.in-addr.arpa" { type master; file "/etc/bind/zones/db.10"; allow-transfer { 10.0.2.54; }; };

Note

A syntax check will throw errors if you miss semicolons.

- Restart BIND9 on Primary Master:

$ sudo service bind9 restart - On Secondary Master (

ns2), install the BIND package. - Edit

/etc/bind/named.conf.localto addzonedeclarations as follows:zone "example.com" { type slave; file "db.example.com"; masters { 10.0.2.53; }; }; zone "2.0.10.in-addr.arpa" { type slave; file "db.10"; masters { 10.0.2.53; }; };

- Save the changes made to

named.conf.local. - Restart the BIND server on Secondary Master:

$ sudo service bind9 restart - This will initiate the transfer of all zones configured on Primary Master. You can check the logs on Secondary Master at

/var/log/syslogto verify the zone transfer.

Tip

A zone is transferred only if the serial number under the SOA section on Primary Master is greater than that of Secondary Master. Make sure that you increment the serial number after every change to the zone file.

How it works…

In the first section, we have installed the BIND server and enabled a simple caching DNS server. A caching server helps to reduce bandwidth and latency in name resolution. The server will try to resolve queries locally from the cache. If the entry is not available in the cache, the query will be forwarded to external DNS servers and the result will be cached.

In the second and third sections, we have set Primary Master and Secondary Master respectively. Primary Master is the first DNS server. Secondary Master will be used as an alternate server in case the Primary server becomes unavailable.

Under Primary Master, we have declared a forward zone and reverse zone for the example.com domain. The forward zone is declared with domain name as the identifier and contains the type and filename for the database file. On Primary Master, we have set type to master. The reverse zone is declared with similar attributes and uses part of an IP address as an identifier. As we are using a 24-bit network address (10.0.2.0/24), we have included the first three octets of the IP address in reverse order (2.0.10) for the reverse zone name.

Lastly, we have created zone files by using existing files as templates. Zone files are the actual database that contains records of the IP address mapped to FQDN and vice versa. It contains SOA record, A records, and NS records. An SOA record defines the domain for this zone; A records and AAAA records are used to map the hostname to the IP address.

When the DNS server receives a query for the example.com domain, it checks for zone files for that domain. After finding the zone file, the host part from the query will be used to find the actual IP address to be returned as a result for query. Similarly, when a query with an IP address is received, the DNS server will look for a reverse zone file matching with the queried IP address.

See also

- Checkout the DNS configuration guide in the Ubuntu server guide at https://help.ubuntu.com/lts/serverguide/dns-configuration.html

- For an introduction to DNS concepts, check out this tutorial by the DigitalOcean community at https://www.digitalocean.com/community/tutorials/an-introduction-to-dns-terminology-components-and-concepts

- Get manual pages for BIND9 at http://www.bind9.net/manuals

- Find manual pages for named with the following command:

$ man named

Getting ready

In this recipe, I will be using four servers. You can create virtual machines if you want to simply test the setup:

ns1: Name server one/Primary Masterns2: Name server two/Secondary Masterhost1: Host system onehost2: Host system two, optional- All servers should be configured in a private network. I have used the

10.0.2.0/24network - We need root privileges on all servers

- All servers should be configured in a private network. I have used the

How to do it…

Install BIND and set up a caching name server through the following steps:

- On

ns1, install BIND anddnsutilswith the following command:$ sudo apt-get update $ sudo apt-get install bind9 dnsutils

- Open

/etc/bind/named.conf.optoins, enable theforwarderssection, and add your preferred DNS servers:forwarders { 8.8.8.8; 8.8.4.4; };

- Now restart BIND to apply a new configuration:

$ sudo service bind9 restart - Check whether the BIND server is up and running:

$ dig -x 127.0.0.1 - You should get an output similar to the following code:

;; Query time: 1 msec ;; SERVER: 10.0.2.53#53(10.0.2.53)

- Use

digto external domain and check the query time:

- Dig the same domain again and cross check the query time. It should be less than the first query:

Set up Primary Master through the following steps:

- On the

ns1server, edit/etc/bind/named.conf.optionsand add theaclblock above theoptionsblock:acl "local" { 10.0.2.0/24; # local network };

- Add the following lines under the

optionsblock:recursion yes; allow-recursion { local; }; listen-on { 10.0.2.53; }; # ns1 IP address allow-transfer { none; };

- Open the

/etc/bind/named.conf.localfile to add forward and reverse zones:$ sudo nano /etc/bind/named.conf.local - Add the forward

zone:zone "example.com" { type master; file "/etc/bind/zones/db.example.com"; };

- Add the reverse

zone:zone "2.0.10.in-addr.arpa" { type master; file "/etc/bind/zones/db.10"; };

- Create the

zonesdirectory under/etc/bind/:$ sudo mkdir /etc/bind/zones - Create the forward

zonefile using the existingzonefile,db.local, as a template:$ cd /etc/bind/ $ sudo cp db.local zones/db.example.com

- The default file should look similar to the following image:

- Edit the

SOAentry and replacelocalhostwith FQDN of your server. - Increment the serial number (you can use the current date time as the serial number,

201507071100) - Remove entries for

localhost,127.0.0.1and::1. - Add new records:

; name server - NS records @ IN NS ns.exmple.com ; name server A records ns IN A 10.0.2.53 ; local - A records host1 IN A 10.0.2.58

- Save the changes and exit the nano editor. The final file should look similar to the following image:

- Now create the reverse

zonefile using/etc/bind/db.127as a template:$ sudo cp db.127 zones/db.10 - The default file should look similar to the following screenshot:

- Change the

SOArecord and increment the serial number. - Remove

NSandPTRrecords forlocalhost. - Add

NS,PTR, andhost records:; NS records @ IN NS ns.example.com ; PTR records 53 IN PTR ns.example.com ; host records 58 IN PTR host1.example.com

- Save the changes. The final file should look similar to the following image:

- Check the configuration files for syntax errors. It should end with no output:

$ sudo named-checkconf - Check

zonefiles for syntax errors:$ sudo named-checkzone example.com /etc/bind/zones/db.example.com - If there are no errors, you should see an output similar to the following:

zone example.com/IN: loaded serial 3 OK

- Check the reverse

zonefile,zones/db.10:$ sudo named-checkzone example.com /etc/bind/zones/db.10 - If there are no errors, you should see output similar to the following:

zone example.com/IN: loaded serial 3 OK

- Now restart the DNS server bind:

$ sudo service bind9 restart - Log in to host2 and configure it to use

ns.example.comas a DNS server. Addns.example.comto/etc/resolve.confon host2. - Test forward lookup with the

nslookupcommand:$ nslookup host1.example.com - You should see an output similar to following:

$ nslookup host1.example.com Server: 10.0.2.53 Address: 10.0.2.53#53 Name: host1.example.com Address: 10.0.2.58

- Now test the reverse lookup:

$ nslookup 10.0.2.58 - It should output something similar to the following:

$ nslookup 10.0.2.58 Server: 10.0.2.53 Address: 10.0.2.53#53 58.2.0.10.in-addr.arpa name = host1.example.com

Set up Secondary Master through the following steps:

- First, allow zone transfer on Primary Master by setting the

allow-transferoption in/etc/bind/named.conf.local:zone "example.com" { type master; file "/etc/bind/zones/db.example.com"; allow-transfer { 10.0.2.54; }; }; zone "2.0.10.in-addr.arpa" { type master; file "/etc/bind/zones/db.10"; allow-transfer { 10.0.2.54; }; };

Note

A syntax check will throw errors if you miss semicolons.

- Restart BIND9 on Primary Master:

$ sudo service bind9 restart - On Secondary Master (

ns2), install the BIND package. - Edit

/etc/bind/named.conf.localto addzonedeclarations as follows:zone "example.com" { type slave; file "db.example.com"; masters { 10.0.2.53; }; }; zone "2.0.10.in-addr.arpa" { type slave; file "db.10"; masters { 10.0.2.53; }; };

- Save the changes made to

named.conf.local. - Restart the BIND server on Secondary Master:

$ sudo service bind9 restart - This will initiate the transfer of all zones configured on Primary Master. You can check the logs on Secondary Master at

/var/log/syslogto verify the zone transfer.

Tip

A zone is transferred only if the serial number under the SOA section on Primary Master is greater than that of Secondary Master. Make sure that you increment the serial number after every change to the zone file.

How it works…

In the first section, we have installed the BIND server and enabled a simple caching DNS server. A caching server helps to reduce bandwidth and latency in name resolution. The server will try to resolve queries locally from the cache. If the entry is not available in the cache, the query will be forwarded to external DNS servers and the result will be cached.

In the second and third sections, we have set Primary Master and Secondary Master respectively. Primary Master is the first DNS server. Secondary Master will be used as an alternate server in case the Primary server becomes unavailable.

Under Primary Master, we have declared a forward zone and reverse zone for the example.com domain. The forward zone is declared with domain name as the identifier and contains the type and filename for the database file. On Primary Master, we have set type to master. The reverse zone is declared with similar attributes and uses part of an IP address as an identifier. As we are using a 24-bit network address (10.0.2.0/24), we have included the first three octets of the IP address in reverse order (2.0.10) for the reverse zone name.

Lastly, we have created zone files by using existing files as templates. Zone files are the actual database that contains records of the IP address mapped to FQDN and vice versa. It contains SOA record, A records, and NS records. An SOA record defines the domain for this zone; A records and AAAA records are used to map the hostname to the IP address.

When the DNS server receives a query for the example.com domain, it checks for zone files for that domain. After finding the zone file, the host part from the query will be used to find the actual IP address to be returned as a result for query. Similarly, when a query with an IP address is received, the DNS server will look for a reverse zone file matching with the queried IP address.

See also

- Checkout the DNS configuration guide in the Ubuntu server guide at https://help.ubuntu.com/lts/serverguide/dns-configuration.html

- For an introduction to DNS concepts, check out this tutorial by the DigitalOcean community at https://www.digitalocean.com/community/tutorials/an-introduction-to-dns-terminology-components-and-concepts

- Get manual pages for BIND9 at http://www.bind9.net/manuals

- Find manual pages for named with the following command:

$ man named

How to do it…

Install BIND and set up a caching name server through the following steps:

- On

ns1, install BIND anddnsutilswith the following command:$ sudo apt-get update $ sudo apt-get install bind9 dnsutils

- Open

/etc/bind/named.conf.optoins, enable theforwarderssection, and add your preferred DNS servers:forwarders { 8.8.8.8; 8.8.4.4; };

- Now restart BIND to apply a new configuration:

$ sudo service bind9 restart - Check whether the BIND server is up and running:

$ dig -x 127.0.0.1 - You should get an output similar to the following code:

;; Query time: 1 msec ;; SERVER: 10.0.2.53#53(10.0.2.53)

- Use

digto external domain and check the query time:

- Dig the same domain again and cross check the query time. It should be less than the first query:

Set up Primary Master through the following steps:

- On the

ns1server, edit/etc/bind/named.conf.optionsand add theaclblock above theoptionsblock:acl "local" { 10.0.2.0/24; # local network };

- Add the following lines under the

optionsblock:recursion yes; allow-recursion { local; }; listen-on { 10.0.2.53; }; # ns1 IP address allow-transfer { none; };

- Open the

/etc/bind/named.conf.localfile to add forward and reverse zones:$ sudo nano /etc/bind/named.conf.local - Add the forward

zone:zone "example.com" { type master; file "/etc/bind/zones/db.example.com"; };

- Add the reverse

zone:zone "2.0.10.in-addr.arpa" { type master; file "/etc/bind/zones/db.10"; };

- Create the

zonesdirectory under/etc/bind/:$ sudo mkdir /etc/bind/zones - Create the forward

zonefile using the existingzonefile,db.local, as a template:$ cd /etc/bind/ $ sudo cp db.local zones/db.example.com

- The default file should look similar to the following image:

- Edit the

SOAentry and replacelocalhostwith FQDN of your server. - Increment the serial number (you can use the current date time as the serial number,

201507071100) - Remove entries for

localhost,127.0.0.1and::1. - Add new records:

; name server - NS records @ IN NS ns.exmple.com ; name server A records ns IN A 10.0.2.53 ; local - A records host1 IN A 10.0.2.58

- Save the changes and exit the nano editor. The final file should look similar to the following image:

- Now create the reverse

zonefile using/etc/bind/db.127as a template:$ sudo cp db.127 zones/db.10 - The default file should look similar to the following screenshot:

- Change the

SOArecord and increment the serial number. - Remove

NSandPTRrecords forlocalhost. - Add

NS,PTR, andhost records:; NS records @ IN NS ns.example.com ; PTR records 53 IN PTR ns.example.com ; host records 58 IN PTR host1.example.com

- Save the changes. The final file should look similar to the following image:

- Check the configuration files for syntax errors. It should end with no output:

$ sudo named-checkconf - Check

zonefiles for syntax errors:$ sudo named-checkzone example.com /etc/bind/zones/db.example.com - If there are no errors, you should see an output similar to the following:

zone example.com/IN: loaded serial 3 OK

- Check the reverse

zonefile,zones/db.10:$ sudo named-checkzone example.com /etc/bind/zones/db.10 - If there are no errors, you should see output similar to the following:

zone example.com/IN: loaded serial 3 OK

- Now restart the DNS server bind:

$ sudo service bind9 restart - Log in to host2 and configure it to use

ns.example.comas a DNS server. Addns.example.comto/etc/resolve.confon host2. - Test forward lookup with the

nslookupcommand:$ nslookup host1.example.com - You should see an output similar to following:

$ nslookup host1.example.com Server: 10.0.2.53 Address: 10.0.2.53#53 Name: host1.example.com Address: 10.0.2.58

- Now test the reverse lookup:

$ nslookup 10.0.2.58 - It should output something similar to the following:

$ nslookup 10.0.2.58 Server: 10.0.2.53 Address: 10.0.2.53#53 58.2.0.10.in-addr.arpa name = host1.example.com

Set up Secondary Master through the following steps:

- First, allow zone transfer on Primary Master by setting the

allow-transferoption in/etc/bind/named.conf.local:zone "example.com" { type master; file "/etc/bind/zones/db.example.com"; allow-transfer { 10.0.2.54; }; }; zone "2.0.10.in-addr.arpa" { type master; file "/etc/bind/zones/db.10"; allow-transfer { 10.0.2.54; }; };

Note

A syntax check will throw errors if you miss semicolons.

- Restart BIND9 on Primary Master:

$ sudo service bind9 restart - On Secondary Master (

ns2), install the BIND package. - Edit

/etc/bind/named.conf.localto addzonedeclarations as follows:zone "example.com" { type slave; file "db.example.com"; masters { 10.0.2.53; }; }; zone "2.0.10.in-addr.arpa" { type slave; file "db.10"; masters { 10.0.2.53; }; };

- Save the changes made to

named.conf.local. - Restart the BIND server on Secondary Master:

$ sudo service bind9 restart - This will initiate the transfer of all zones configured on Primary Master. You can check the logs on Secondary Master at

/var/log/syslogto verify the zone transfer.

Tip

A zone is transferred only if the serial number under the SOA section on Primary Master is greater than that of Secondary Master. Make sure that you increment the serial number after every change to the zone file.

How it works…

In the first section, we have installed the BIND server and enabled a simple caching DNS server. A caching server helps to reduce bandwidth and latency in name resolution. The server will try to resolve queries locally from the cache. If the entry is not available in the cache, the query will be forwarded to external DNS servers and the result will be cached.

In the second and third sections, we have set Primary Master and Secondary Master respectively. Primary Master is the first DNS server. Secondary Master will be used as an alternate server in case the Primary server becomes unavailable.

Under Primary Master, we have declared a forward zone and reverse zone for the example.com domain. The forward zone is declared with domain name as the identifier and contains the type and filename for the database file. On Primary Master, we have set type to master. The reverse zone is declared with similar attributes and uses part of an IP address as an identifier. As we are using a 24-bit network address (10.0.2.0/24), we have included the first three octets of the IP address in reverse order (2.0.10) for the reverse zone name.

Lastly, we have created zone files by using existing files as templates. Zone files are the actual database that contains records of the IP address mapped to FQDN and vice versa. It contains SOA record, A records, and NS records. An SOA record defines the domain for this zone; A records and AAAA records are used to map the hostname to the IP address.

When the DNS server receives a query for the example.com domain, it checks for zone files for that domain. After finding the zone file, the host part from the query will be used to find the actual IP address to be returned as a result for query. Similarly, when a query with an IP address is received, the DNS server will look for a reverse zone file matching with the queried IP address.

See also

- Checkout the DNS configuration guide in the Ubuntu server guide at https://help.ubuntu.com/lts/serverguide/dns-configuration.html

- For an introduction to DNS concepts, check out this tutorial by the DigitalOcean community at https://www.digitalocean.com/community/tutorials/an-introduction-to-dns-terminology-components-and-concepts

- Get manual pages for BIND9 at http://www.bind9.net/manuals

- Find manual pages for named with the following command:

$ man named

How it works…

In the first section, we have installed the BIND server and enabled a simple caching DNS server. A caching server helps to reduce bandwidth and latency in name resolution. The server will try to resolve queries locally from the cache. If the entry is not available in the cache, the query will be forwarded to external DNS servers and the result will be cached.

In the second and third sections, we have set Primary Master and Secondary Master respectively. Primary Master is the first DNS server. Secondary Master will be used as an alternate server in case the Primary server becomes unavailable.

Under Primary Master, we have declared a forward zone and reverse zone for the example.com domain. The forward zone is declared with domain name as the identifier and contains the type and filename for the database file. On Primary Master, we have set type to master. The reverse zone is declared with similar attributes and uses part of an IP address as an identifier. As we are using a 24-bit network address (10.0.2.0/24), we have included the first three octets of the IP address in reverse order (2.0.10) for the reverse zone name.

Lastly, we have created zone files by using existing files as templates. Zone files are the actual database that contains records of the IP address mapped to FQDN and vice versa. It contains SOA record, A records, and NS records. An SOA record defines the domain for this zone; A records and AAAA records are used to map the hostname to the IP address.

When the DNS server receives a query for the example.com domain, it checks for zone files for that domain. After finding the zone file, the host part from the query will be used to find the actual IP address to be returned as a result for query. Similarly, when a query with an IP address is received, the DNS server will look for a reverse zone file matching with the queried IP address.

See also

- Checkout the DNS configuration guide in the Ubuntu server guide at https://help.ubuntu.com/lts/serverguide/dns-configuration.html

- For an introduction to DNS concepts, check out this tutorial by the DigitalOcean community at https://www.digitalocean.com/community/tutorials/an-introduction-to-dns-terminology-components-and-concepts

- Get manual pages for BIND9 at http://www.bind9.net/manuals

- Find manual pages for named with the following command:

$ man named

See also

- Checkout the DNS configuration guide in the Ubuntu server guide at https://help.ubuntu.com/lts/serverguide/dns-configuration.html

- For an introduction to DNS concepts, check out this tutorial by the DigitalOcean community at https://www.digitalocean.com/community/tutorials/an-introduction-to-dns-terminology-components-and-concepts

- Get manual pages for BIND9 at http://www.bind9.net/manuals

- Find manual pages for named with the following command:

$ man named

Hiding behind the proxy with squid

In this recipe, we will install and configure the squid proxy and caching server. The term proxy is generally combined with two different terms: one is forward proxy and the other is reverse proxy.

When we say proxy, it generally refers to forward proxy. A forward proxy acts as a gateway between a client's browser and the Internet, requesting the content on behalf of the client. This protects intranet clients by exposing the proxy as the only requester. A proxy can also be used as a filtering agent, imposing organizational policies. As all Internet requests go through the proxy server, the proxy can cache the response and return cached content when a similar request is found, thus saving bandwidth and time.

A reverse proxy is the exact opposite of a forward proxy. It protects internal servers from the outside world. A reverse proxy accepts requests from external clients and routes them to servers behind the proxy. External clients can see a single entity serving requests, but internally, it can be multiple servers working behind the proxy and sharing the load. More details about reverse proxies are covered in Chapter 3, Working with Web Servers.

In this recipe, we will discuss how to install a squid server. Squid is a well-known application in the forward proxy world and works well as a caching proxy. It supports HTTP, HTTPS, FTP, and other popular network protocols.

Getting ready

As always, you will need access to a root account or an account with sudo privileges.

How to do it…

Following are the steps to setup and configure Squid proxy:

- Squid is quite an old, mature, and commonly used piece of software. It is generally shipped as a default package with various Linux distributions. The Ubuntu package repository contains the necessary pre-compiled binaries, so the installation is as easy as two commands.

- First, update the

aptcache and then install squid as follows:$ sudo apt-get update $ sudo apt-get install squid3

- Edit the

/etc/squid3/squid.conffile:$ sudo nano /etc/squid3/squid.conf - Ensure that the

cache_dirdirective is not commented out:cache_dir ufs /var/spool/squid3 100 16 256 - Optionally, change the

http_portdirective to your desired TCP port:http_port 8080 - Optionally, change the squid hostname:

visible_hostname proxy1 - Save changes with Ctrl + O and exit with Ctrl + X.

- Restart the squid server:

$ sudo service squid3 restart - Make sure that you have allowed the selected

http_porton firewall. - Next, configure your browser using the squid server as the

http/httpsproxy.

How it works…

Squid is available as a package in the Ubuntu repository, so you can directly install it with the apt-get install squid command. After installing squid, we need to edit the squid.conf file for some basic settings. The squid.conf file is quite a big file and you can find a large number of directives listed with their explanation. It is recommended to create a copy of the original configuration file as a reference before you do any modifications.

In our example, we are changing the port squid listens on. The default port is 3128. This is just a security precaution and it's fine if you want to run squid on the default port. Secondly, we have changed the hostname for squid.

Other important directive to look at is cache_dir. Make sure that this directive is enabled, and also set the cache size. The following example sets cache_dir to /var/spool/suid3 with the size set to 100MB:

cache_dir ufs /var/spool/squid3 100 16 256

To check the cache utilization, use the following command:

$ sudo du /var/spool/squid3

There's more…

Squid provides lot more features than a simple proxy server. Following is a quick list of some important features:

Access control list

With squid ACLs, you can set the list of IP addresses allowed to use squid. Add the following line at the bottom of the acl section of /etc/squid3/squid.conf:

acl developers src 192.168.2.0/24

Then, add the following line at the top of the http_access section in the same file:

http_access allow developers

Set cache refresh rules

You can change squid's caching behavior depending on the file types. Add the following line to cache all image files to be cached—the minimum time is an hour and the maximum is a day:

refresh_pattern -i \.(gif|png|jpg|jpeg|ico)$ 3600 90% 86400

This line uses a regular expression to find the file names that end with any of the listed file extensions (gif, png, and etc)

Sarg – tool to analyze squid logs

Squid Analysis Report Generator is an open source tool to monitor the squid server usages. It parses the logs generated by Squid and converts them to easy-to-digest HTML-based reports. You can track various metrics such as bandwidth used per user, top sites, downloads, and so on. Sarg can be quickly installed with the following command:

$ sudo apt-get install sarg

The configuration file for Sarg is located at /etc/squid/sarg.conf. Once installed, set the output_dir path and run sarg. You can also set cron jobs to execute sarg periodically. The generated reports are stored in output_dir and can be accessed with the help of a web server.

Squid guard

Squid guard is another useful plugin for squid server. It is generally used to block a list of websites so that these sites are inaccessible from the internal network. As always, it can also be installed with a single command, as follows:

$ sudo apt-get install squidguard

The configuration file is located at /etc/squid/squidGuard.conf.

See also

- Check out the squid manual pages with the

man squidcommand - Check out the Ubuntu community page for squid guard at https://help.ubuntu.com/community/SquidGuard

Getting ready

As always, you will need access to a root account or an account with sudo privileges.

How to do it…

Following are the steps to setup and configure Squid proxy:

- Squid is quite an old, mature, and commonly used piece of software. It is generally shipped as a default package with various Linux distributions. The Ubuntu package repository contains the necessary pre-compiled binaries, so the installation is as easy as two commands.

- First, update the

aptcache and then install squid as follows:$ sudo apt-get update $ sudo apt-get install squid3

- Edit the

/etc/squid3/squid.conffile:$ sudo nano /etc/squid3/squid.conf - Ensure that the

cache_dirdirective is not commented out:cache_dir ufs /var/spool/squid3 100 16 256 - Optionally, change the

http_portdirective to your desired TCP port:http_port 8080 - Optionally, change the squid hostname:

visible_hostname proxy1 - Save changes with Ctrl + O and exit with Ctrl + X.

- Restart the squid server:

$ sudo service squid3 restart - Make sure that you have allowed the selected

http_porton firewall. - Next, configure your browser using the squid server as the

http/httpsproxy.

How it works…

Squid is available as a package in the Ubuntu repository, so you can directly install it with the apt-get install squid command. After installing squid, we need to edit the squid.conf file for some basic settings. The squid.conf file is quite a big file and you can find a large number of directives listed with their explanation. It is recommended to create a copy of the original configuration file as a reference before you do any modifications.

In our example, we are changing the port squid listens on. The default port is 3128. This is just a security precaution and it's fine if you want to run squid on the default port. Secondly, we have changed the hostname for squid.

Other important directive to look at is cache_dir. Make sure that this directive is enabled, and also set the cache size. The following example sets cache_dir to /var/spool/suid3 with the size set to 100MB:

cache_dir ufs /var/spool/squid3 100 16 256

To check the cache utilization, use the following command:

$ sudo du /var/spool/squid3

There's more…

Squid provides lot more features than a simple proxy server. Following is a quick list of some important features:

Access control list

With squid ACLs, you can set the list of IP addresses allowed to use squid. Add the following line at the bottom of the acl section of /etc/squid3/squid.conf:

acl developers src 192.168.2.0/24

Then, add the following line at the top of the http_access section in the same file:

http_access allow developers

Set cache refresh rules

You can change squid's caching behavior depending on the file types. Add the following line to cache all image files to be cached—the minimum time is an hour and the maximum is a day:

refresh_pattern -i \.(gif|png|jpg|jpeg|ico)$ 3600 90% 86400

This line uses a regular expression to find the file names that end with any of the listed file extensions (gif, png, and etc)

Sarg – tool to analyze squid logs

Squid Analysis Report Generator is an open source tool to monitor the squid server usages. It parses the logs generated by Squid and converts them to easy-to-digest HTML-based reports. You can track various metrics such as bandwidth used per user, top sites, downloads, and so on. Sarg can be quickly installed with the following command:

$ sudo apt-get install sarg

The configuration file for Sarg is located at /etc/squid/sarg.conf. Once installed, set the output_dir path and run sarg. You can also set cron jobs to execute sarg periodically. The generated reports are stored in output_dir and can be accessed with the help of a web server.

Squid guard

Squid guard is another useful plugin for squid server. It is generally used to block a list of websites so that these sites are inaccessible from the internal network. As always, it can also be installed with a single command, as follows:

$ sudo apt-get install squidguard

The configuration file is located at /etc/squid/squidGuard.conf.

See also

- Check out the squid manual pages with the

man squidcommand - Check out the Ubuntu community page for squid guard at https://help.ubuntu.com/community/SquidGuard

How to do it…

Following are the steps to setup and configure Squid proxy:

- Squid is quite an old, mature, and commonly used piece of software. It is generally shipped as a default package with various Linux distributions. The Ubuntu package repository contains the necessary pre-compiled binaries, so the installation is as easy as two commands.

- First, update the

aptcache and then install squid as follows:$ sudo apt-get update $ sudo apt-get install squid3

- Edit the

/etc/squid3/squid.conffile:$ sudo nano /etc/squid3/squid.conf - Ensure that the

cache_dirdirective is not commented out:cache_dir ufs /var/spool/squid3 100 16 256 - Optionally, change the

http_portdirective to your desired TCP port:http_port 8080 - Optionally, change the squid hostname:

visible_hostname proxy1 - Save changes with Ctrl + O and exit with Ctrl + X.

- Restart the squid server:

$ sudo service squid3 restart - Make sure that you have allowed the selected

http_porton firewall. - Next, configure your browser using the squid server as the

http/httpsproxy.

How it works…

Squid is available as a package in the Ubuntu repository, so you can directly install it with the apt-get install squid command. After installing squid, we need to edit the squid.conf file for some basic settings. The squid.conf file is quite a big file and you can find a large number of directives listed with their explanation. It is recommended to create a copy of the original configuration file as a reference before you do any modifications.

In our example, we are changing the port squid listens on. The default port is 3128. This is just a security precaution and it's fine if you want to run squid on the default port. Secondly, we have changed the hostname for squid.

Other important directive to look at is cache_dir. Make sure that this directive is enabled, and also set the cache size. The following example sets cache_dir to /var/spool/suid3 with the size set to 100MB:

cache_dir ufs /var/spool/squid3 100 16 256

To check the cache utilization, use the following command:

$ sudo du /var/spool/squid3

There's more…

Squid provides lot more features than a simple proxy server. Following is a quick list of some important features:

Access control list

With squid ACLs, you can set the list of IP addresses allowed to use squid. Add the following line at the bottom of the acl section of /etc/squid3/squid.conf:

acl developers src 192.168.2.0/24

Then, add the following line at the top of the http_access section in the same file:

http_access allow developers

Set cache refresh rules

You can change squid's caching behavior depending on the file types. Add the following line to cache all image files to be cached—the minimum time is an hour and the maximum is a day:

refresh_pattern -i \.(gif|png|jpg|jpeg|ico)$ 3600 90% 86400

This line uses a regular expression to find the file names that end with any of the listed file extensions (gif, png, and etc)

Sarg – tool to analyze squid logs

Squid Analysis Report Generator is an open source tool to monitor the squid server usages. It parses the logs generated by Squid and converts them to easy-to-digest HTML-based reports. You can track various metrics such as bandwidth used per user, top sites, downloads, and so on. Sarg can be quickly installed with the following command:

$ sudo apt-get install sarg

The configuration file for Sarg is located at /etc/squid/sarg.conf. Once installed, set the output_dir path and run sarg. You can also set cron jobs to execute sarg periodically. The generated reports are stored in output_dir and can be accessed with the help of a web server.

Squid guard

Squid guard is another useful plugin for squid server. It is generally used to block a list of websites so that these sites are inaccessible from the internal network. As always, it can also be installed with a single command, as follows:

$ sudo apt-get install squidguard

The configuration file is located at /etc/squid/squidGuard.conf.

See also

- Check out the squid manual pages with the

man squidcommand - Check out the Ubuntu community page for squid guard at https://help.ubuntu.com/community/SquidGuard

How it works…

Squid is available as a package in the Ubuntu repository, so you can directly install it with the apt-get install squid command. After installing squid, we need to edit the squid.conf file for some basic settings. The squid.conf file is quite a big file and you can find a large number of directives listed with their explanation. It is recommended to create a copy of the original configuration file as a reference before you do any modifications.

In our example, we are changing the port squid listens on. The default port is 3128. This is just a security precaution and it's fine if you want to run squid on the default port. Secondly, we have changed the hostname for squid.

Other important directive to look at is cache_dir. Make sure that this directive is enabled, and also set the cache size. The following example sets cache_dir to /var/spool/suid3 with the size set to 100MB:

cache_dir ufs /var/spool/squid3 100 16 256

To check the cache utilization, use the following command:

$ sudo du /var/spool/squid3

There's more…

Squid provides lot more features than a simple proxy server. Following is a quick list of some important features:

Access control list

With squid ACLs, you can set the list of IP addresses allowed to use squid. Add the following line at the bottom of the acl section of /etc/squid3/squid.conf:

acl developers src 192.168.2.0/24

Then, add the following line at the top of the http_access section in the same file:

http_access allow developers

Set cache refresh rules

You can change squid's caching behavior depending on the file types. Add the following line to cache all image files to be cached—the minimum time is an hour and the maximum is a day:

refresh_pattern -i \.(gif|png|jpg|jpeg|ico)$ 3600 90% 86400

This line uses a regular expression to find the file names that end with any of the listed file extensions (gif, png, and etc)

Sarg – tool to analyze squid logs

Squid Analysis Report Generator is an open source tool to monitor the squid server usages. It parses the logs generated by Squid and converts them to easy-to-digest HTML-based reports. You can track various metrics such as bandwidth used per user, top sites, downloads, and so on. Sarg can be quickly installed with the following command:

$ sudo apt-get install sarg

The configuration file for Sarg is located at /etc/squid/sarg.conf. Once installed, set the output_dir path and run sarg. You can also set cron jobs to execute sarg periodically. The generated reports are stored in output_dir and can be accessed with the help of a web server.

Squid guard

Squid guard is another useful plugin for squid server. It is generally used to block a list of websites so that these sites are inaccessible from the internal network. As always, it can also be installed with a single command, as follows:

$ sudo apt-get install squidguard

The configuration file is located at /etc/squid/squidGuard.conf.

See also

- Check out the squid manual pages with the

man squidcommand - Check out the Ubuntu community page for squid guard at https://help.ubuntu.com/community/SquidGuard

There's more…

Squid provides lot more features than a simple proxy server. Following is a quick list of some important features:

Access control list

With squid ACLs, you can set the list of IP addresses allowed to use squid. Add the following line at the bottom of the acl section of /etc/squid3/squid.conf:

acl developers src 192.168.2.0/24

Then, add the following line at the top of the http_access section in the same file:

http_access allow developers

Set cache refresh rules

You can change squid's caching behavior depending on the file types. Add the following line to cache all image files to be cached—the minimum time is an hour and the maximum is a day:

refresh_pattern -i \.(gif|png|jpg|jpeg|ico)$ 3600 90% 86400

This line uses a regular expression to find the file names that end with any of the listed file extensions (gif, png, and etc)

Sarg – tool to analyze squid logs