Using parameters and built-in functions

In this recipe, we shall demonstrate the power and versatility of ADF by performing a common task: importing data from several files (blobs) from a storage container into tables in Azure SQL Database. We shall create a pipeline, define datasets, and use a Copy activity to tie all the pieces together and transfer the data. We shall also see how easy it is to back up data with a quick modification to the pipeline.

Getting ready

In this recipe, we shall be using most of the services that were mentioned in the Technical requirements section of this chapter. Make sure that you have access to Azure SQL Database (with the AzureSQLDatabase instance we created) and the Azure storage account with the necessary .csv files already uploaded.

How to do it…

First, open your Azure Data Factory instance in the Azure portal and go to the Author and Monitor interface. Here, we shall define the datasets for input files and database tables, and the linked services (for Azure Blob Storage and Azure SQL Database):

- Start by creating linked services for the Azure storage account and

AzureSQLDatabase. - Create the linked service for the

adforchestrationstoragestorage account:(a) In the Manage tab, select Linked Services and click on the New button. On the New linked service blade, select Azure Blob Storage:

Figure 2.2 – The New linked service blade

(b) On the next screen, configure the linked service connection properties as shown in the following screenshot:

Figure 2.3 – Connection configurations for Azure Blob Storage

Name your linked service according to your naming convention (in our example, we named it

OrchestrationAzureBlobStorage1).Select the appropriate subscription and enter the name of your storage account (where you store the

.csvfiles).For Integration Runtime, select AutoResolveIntegrationRuntime.

For Authentication method, select Account Key.

Note:

In this recipe, we are using Account Key authentication to access our storage account. However, in your work environment, it is recommended to authenticate using Managed Identity, taking advantage of the Azure Active Directory service. This is more secure and allows you to avoid using credentials in your code. Find the references for more information about using Managed Identity with Azure Data Factory in the See also section of this recipe.

(c) Click the Test Connection button at the bottom and verify that you can connect to the storage account.

(d) Finally, click on the Create button and wait for the linked service to be created.

- Create the second linked service for

AzureSQLDatabase:

Figure 2.4 – Connection properties for Azure SQL Database

(a) In the Manage tab, create a new linked service, but this time select Azure SQL from choices in the New linked service blade. You can enter

Azure SQLinto the search field to find it easily.(b) Select the subscription information and the SQL server name (the dropdown will present you with choices). Once you have selected the SQL server name, you can select your database (

AzureSQLDatabase) from the dropdown in the Database Name section.(c) Select SQL Authentication for Authentication Type. Enter the username and password for your database.

(d) Make sure to test the connection. If the connection fails, ensure that you configured access correctly in Firewall and Network Settings. Once you have successfully tested the connection, click on Create to save your linked service.

Now, we shall create two datasets, one for each linked service.

- In the Author tab, define the dataset for Azure Storage as shown in the following screenshot:

Figure 2.5 – Create a new dataset

(a) Go to Datasets and click on New dataset. Select Azure Blob Storage from the choices and click Continue.

(b) In the Select Format blade, select Delimited Text and hit Continue.

(c) Call your new dataset

CsvDataand select OrchestrationAzureBlobStorage in the Linked Service dropdown.(d) With the help of the folder button, navigate to your Azure folder and select any file from there to specify the file path:

Figure 2.6 – Dataset properties

(e) Check the First Row as Header checkbox and click on Create.

- In the same Author tab, create a dataset for the Azure SQL table:

(a) Go to Datasets and click on New dataset.

(b) Select Azure SQL from the choices in the New Dataset blade.

(c) Name your dataset

AzureSQLTables.(d) In the Linked Service dropdown, select AzureSQLDatabase. For the table name, select Country from the dropdown.

(e) Click on Create.

- Parameterize the

AzureSQLTablesdataset:(a) In the Parameters tab, enter the name of your new parameter,

tableName:

Figure 2.7 – Parameterizing the dataset

(b) Next, in the Connection tab, click on the Edit checkbox and enter

dboas schema and@dataset().tableNamein the table text field, as shown in the following screenshot:

Figure 2.8 – Specifying a value for the dataset parameter

- In the same way, parameterize and add dynamic content in the Connection tab for the

CsvDatadataset:(a) Select your dataset, open the Parameters tab, and create a parameter named

filename.(b) In the Connections tab, in the File Path section, click inside the File text box, then click on the Add Dynamic Content link. This will bring up the Dynamic Content interface. In that interface, find the Parameters section and click on

filename. This will generate the correct code to refer to the dataset'sfilenameparameter in the dynamic content text box:

Figure 2.9 – Dynamic content interface

Click on the Finish button to finalize your choice.

Verify that you can see both datasets under the Datasets tab:

Figure 2.10 – Datasets in the Author tab

- We are now ready to design the pipeline.

In the Author tab, create a new pipeline. Change its name to

pl_orchestration_recipe_1. - From the Move and Transform menu in the Activities pane (on the left), drag a Copy activity onto the canvas:

Figure 2.11 – Pipeline canvas with a Copy activity

On the bottom of the canvas, you will see some tabs: General, Source, Sink, and so on. Configure your Copy activity.

In the General tab, you can configure the name for your activity. Call it

Copy From Blob to Azure SQL.In the Source tab, select the

CsvDatadataset and specifycountries.csvin the filename textbox.In the Sink tab, select the

AzureSQLTablesdataset and specifyCountryin the tableName text field. - We are ready to run the pipeline in Debug mode:

Note

You will learn more about using the debug capabilities of Azure Data Factory in Chapter 10, Monitoring and Troubleshooting Data Pipelines. In this recipe, we introduce you to the Output pane, which will help you understand the design and function of this pipeline.

(a) Click the Debug button in the top panel. This will run your pipeline.

(b) Put your cursor anywhere on the pipeline canvas. You will see the report with the status of the activities in the bottom panel in the Output tab:

Figure 2.12 – Debug output

Hover your cursor over the row representing the activity to see the inputs and outputs buttons. We shall make use of these in later chapters.

After your pipeline has run, you should see that the

dbo.Countrytable in your Azure SQL database has been populated with the countries data:

Figure 2.13 – Contents of the Country table in Azure SQL Database

We have copied the contents of the

Countries.csvfile into the database. In the next steps, we shall demonstrate how parameterizing the datasets gives us the flexibility to define which file we want to copy and which SQL table we want as the destination without redesigning the pipeline. - Edit the pipeline: click on the Copy from Blob To Azure SQL activity to select it, and specify

airlines.csvfor the filename in the Source tab andAirlinefor the table name in the Sink tab. Run your pipeline again (in Debug mode), and you should see that the second table is populated with the data – using the same pipeline! - Now, let's say we want to back up the contents of the tables in an Azure SQL database before overwriting them with data from

.csvfiles. We can easily enhance the existing pipeline to accomplish this. - Drag another instance of the Copy activity from the Activities pane, name it

Backup Copy Activity, and configure it in the following way:(a) For the source, select AzureSQLDatabase for the linked service, and add

Airlinein the text box for the table name.(b) In Sink, specify

CsvDataas the linked service, and enter the following formula into the filename textbox:@concat('Airlines-', utcnow(), '.backup' ).(c) Connect Backup Copy Activity to the Copy from Blob to AzureSQL copy activity:

Figure 2.14 – Adding backup functionality to the pipeline

- Run the pipeline in debug mode. After the run is complete, you should see the backup file in your storage account.

- We have created two linked services and two datasets, and we have a functioning pipeline. Click on the Publish All button at the top to save your work.

Let's look at how this works!

How it works…

In this recipe, we became familiar with all the major components of an Azure Data Factory pipeline: linked services, datasets, and activities:

- Linked services represent configured connections between your Data Factory instance and the service that you want to use.

- Datasets are more granular: they represent the specific view of the data that your activities will use as input and output.

- Activities represent the actions that are performed on the data. Many activities require you to specify where the data is extracted from and where it is loaded to. The ADF terms for these entities are source and sink.

Every pipeline that you design will have those components.

In step 1 and step 2, we created the linked services to connect to Azure Blob Storage and Azure SQL Database. Then, in step 3 and step 4, we created datasets that connected to those linked services and referred to specific files or tables. We created parameters that represented the data we referred to in step 5 and step 6, and this allowed us to change which files we wanted to load into tables without creating additional pipelines. In the remaining steps, we worked with instances of the Copy activity, specifying the inputs and outputs (sources and sinks) for the data.

There's more…

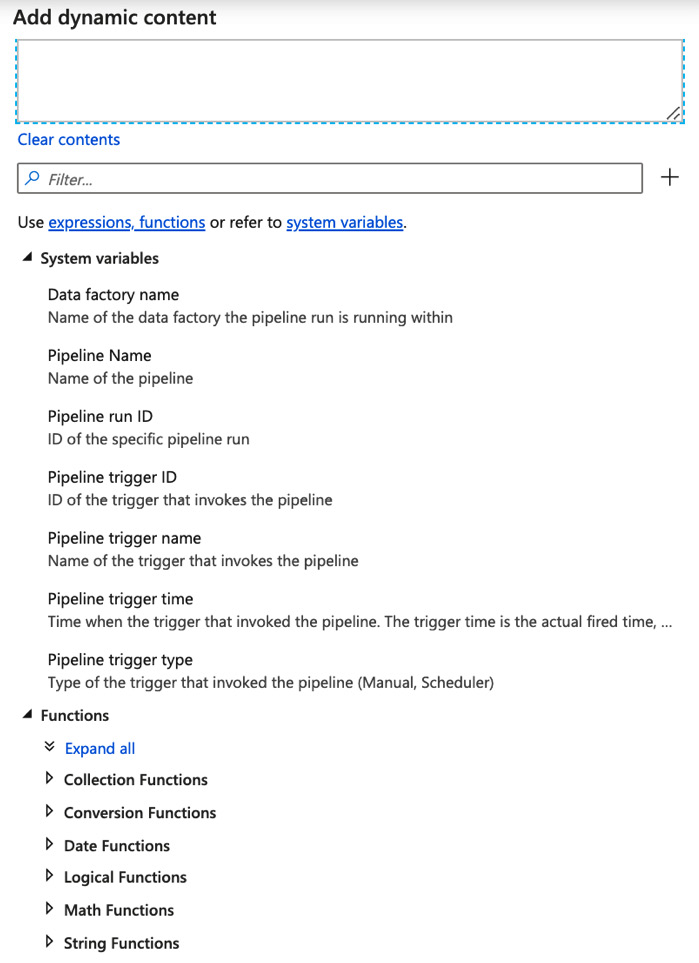

We used a built-in function for generating UTC timestamps in step 12. Data Factory provides many convenient built-in functions and expressions, as well as system variables, for your use. To see them, click on Backup SQL Data activity in your pipeline and go to the Source tab below it. Put your cursor inside the tableName text field. You will see an Add dynamic content link appear underneath. Click on it, and you will see the Add dynamic content blade:

Figure 2.15 – Data Factory functions and system variables

This blade lists many useful functions and system variables to explore. We will use some of them in later recipes.

See also

Microsoft keeps extensive documentation on Data Factory. For a more detailed explanation of the concepts used in this recipe, refer to the following pages:

- Linked services in Azure Data Factory:

https://docs.microsoft.com/azure/data-factory/concepts-linked-services

- Pipelines and activities in Azure Data Factory:

https://docs.microsoft.com/azure/data-factory/concepts-pipelines-activities

- Setting up and using Managed Identity with Azure Data Factory:

https://docs.microsoft.com/azure/data-factory/data-factory-service-identity