H2O.ai's answer to these challenges

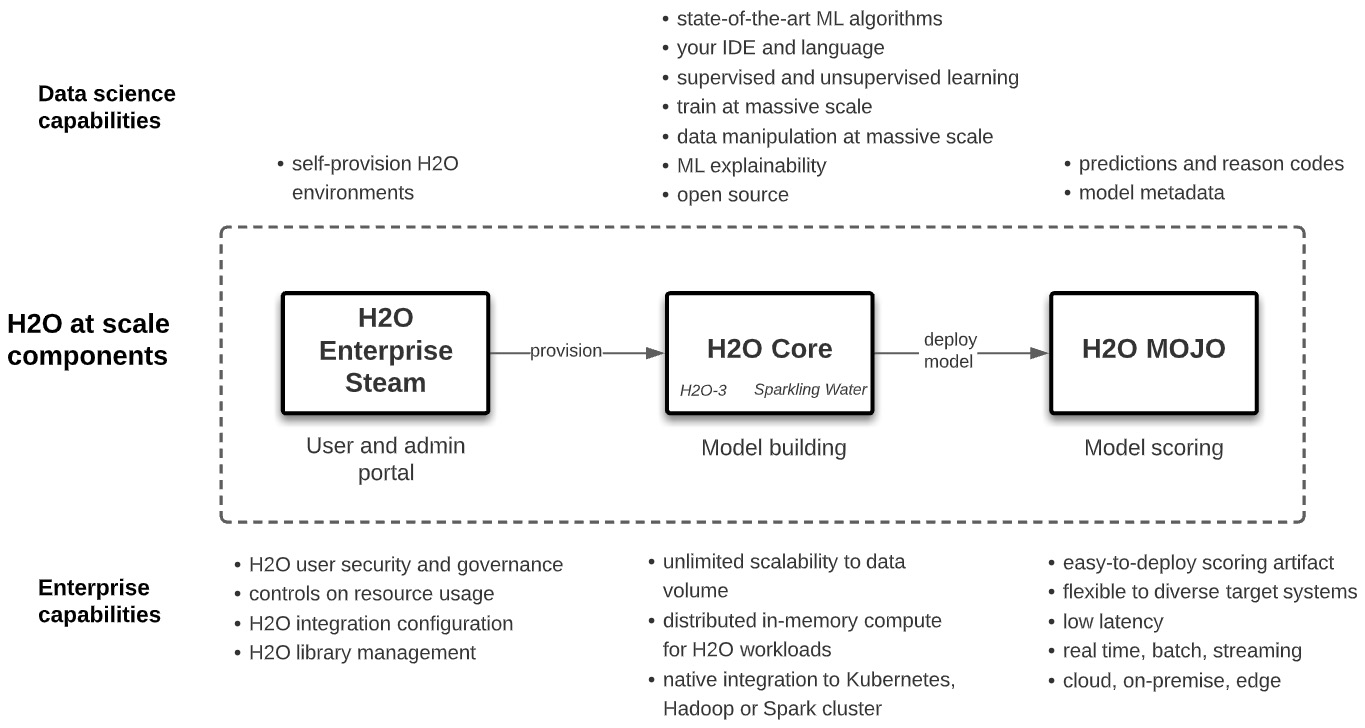

H2O.ai provides software to build ML models at scale and overcome the challenges of doing so – model building at scale, model deployment at scale, and dealing with enterprise stakeholders' concerns and inherent friction along the way. These components are described in brief in the following diagram:

Figure 1.6 – H2O ML at scale

Subsequent chapters of this book elaborate on how these components are used to build and deploy state-of-the-art models within the complexities of the enterprise environment.

Let's try to understand these components at first glance:

- H2O Core: This is open source software that distributes state-of-the-art ML algorithms and data manipulations over a specified number of servers on Kubernetes, Hadoop, or Spark environments. Data is partitioned in memory across the designated number of servers and ML algorithm computation is run in parallel using it.

This architecture creates horizontal scalability of model building to hundreds of gigabytes or terabytes of data and generally fast processing times at lower data volumes. Data scientists work with familiar IDEs, languages, and algorithms and are abstracted away from the underlying architecture. Thus, for example, a data scientist can run an XGBoost model in Python from a Jupyter notebook against 500 GB of data in Hadoop, similar to doing so with data loaded into their laptop.

H2O Core is often referred to as H2O Open Source and comes in two forms, H2O-3 and Sparkling Water, which we will elaborate on in subsequent chapters. H2O Core can be run as a scaled-down sandbox on a single server or laptop.

- H2O Enterprise Steam: This is a web UI or API for data scientists to self-provision and manage their individual H2O Core environments. Self-provisioning includes auto-calculation of horizontal scaling based on user inputs that describe the data. Enterprise Steam is also used by administrators to manage users, including defining boundaries for their resource consumption, and to configure H2O Core integration against Hadoop, Spark, or Kubernetes.

- H2O MOJO: This is an easy-to-deploy scoring artifact exportable from models built from H2O Core. MOJOs are low latency (typically < 100 ms or faster) Java binaries that can run on any Java Virtual Machine (JVM) and thus serve predictions on diverse software systems, such as REST servers, database clients, Amazon SageMaker, Kafka queues, Spark pipelines, Hive user-defined functions (UDFs), and Internet of Things (IoT) devices.

- APIs: Each component has a rich set of APIs so that you can automate workflows, including continuous integration and continuous delivery (CI/CD) and retraining pipelines.

The focus of this book is on building and deploying state-of-the-art models at scale using H2O Core with help from Enterprise Steam and deploying those models as MOJOs within the complexities of enterprise environments.

H2O at Scale and H2O AI Cloud

We refer to H2O at scale in this book as H2O Enterprise Steam, H2O Core, and H2O Mojo because it addresses the ML at scale challenges described earlier in this chapter, especially through the distributed ML scalability that H2O Core provides for model building.

Note that H2O.ai offers a larger end-to-end ML platform called the H2O AI Cloud. The H2O AI Cloud integrates a hyper-advanced AutoML tool (called H2O Driverless AI) and other model building engines, an MLOps scoring, monitoring, and governance environment (called H2O MLOps), and a low-code software development kit, or SDK (called H2O Wave) with H2O API hooks to build AI applications that publish to the App Store. It also integrates H2O at scale as defined in this book.

H2O at scale can be deployed as standalone or as part of the H2O AI Cloud. As a standalone implementation, Enterprise Steam is not in fact required, but for reasons elaborated on later in this book, Enterprise Steam is deemed essential for enterprise implementations.

The majority of this book is focused on H2O at scale. The last part of the book will extend our understanding to the H2O AI Cloud and how H2O at scale components can leverage this larger integrated platform and vice versa.